第 2 章 - Socket 和模式 #

在第 1 章 - 基础中,我们初步了解了 ZeroMQ,并学习了一些主要的 ZeroMQ 模式的基本示例:请求-响应、发布-订阅和流水线。在本章中,我们将深入实践,开始学习如何在实际程序中使用这些工具。

我们将涵盖:

- 如何创建和使用 ZeroMQ Socket。

- 如何在 Socket 上发送和接收消息。

- 如何围绕 ZeroMQ 的异步 I/O 模型构建应用程序。

- 如何在单个线程中处理多个 Socket。

- 如何正确处理致命错误和非致命错误。

- 如何处理中断信号,例如 Ctrl-C。

- 如何干净地关闭 ZeroMQ 应用程序。

- 如何检查 ZeroMQ 应用程序是否存在内存泄漏。

- 如何发送和接收多部分消息。

- 如何在网络上转发消息。

- 如何构建一个简单的消息队列代理。

- 如何使用 ZeroMQ 编写多线程应用程序。

- 如何使用 ZeroMQ 进行线程间信号传递。

- 如何使用 ZeroMQ 协调节点网络。

- 如何创建和使用发布-订阅的消息信封。

- 使用高水位标记(HWM)来防止内存溢出。

Socket API #

坦白地说,ZeroMQ 在某种程度上对你使用了“障眼法”,对此我们并不道歉。这是为了你好,而且这比伤到你更让我们自己难受。ZeroMQ 提供了一个熟悉的基于 Socket 的 API,为了隐藏大量的消息处理引擎,我们付出了巨大的努力。然而,结果将慢慢地纠正你关于如何设计和编写分布式软件的世界观。

Socket 是事实上的网络编程标准 API,同时也能防止你的眼球掉到脸颊上。让 ZeroMQ 对开发者特别有吸引力的一点是,它使用了 Socket 和消息,而不是其他一些任意的概念集。感谢 Martin Sustrik 实现了这一点。它将“面向消息的中间件”——一个能让整个房间陷入僵局的短语——变成了“超辣 Socket!”,这让我们对披萨产生了奇怪的渴望,并渴望了解更多。

就像一道最喜欢的菜一样,ZeroMQ Socket 很容易消化。Socket 的生命分为四个部分,就像 BSD Socket 一样:

-

创建和销毁 Socket,它们共同构成了 Socket 生命的因果循环(参见 `zmq_socket()`, `zmq_close()`).

-

配置 Socket,通过设置选项并在必要时检查它们(参见 `zmq_setsockopt()`, `zmq_getsockopt()`).

-

将 Socket 连接到网络拓扑中,通过创建进出它们的 ZeroMQ 连接(参见 `zmq_bind()`, `zmq_connect()`).

-

使用 Socket 传输数据,通过在其上写入和接收消息(参见 `zmq_msg_send()`, `zmq_msg_recv()`).

请注意,Socket 始终是 void 指针,而消息(我们很快就会讲到)是结构体。因此,在 C 语言中,你直接传递 Socket,但在所有处理消息的函数中,你都传递消息的地址,例如 `zmq_msg_send()``zmq_msg_send()` `zmq_msg_recv()`和 `zmq_msg_recv()`。作为记忆辅助,记住“在 ZeroMQ 中,你的所有 Socket 都属于我们”,但消息是你代码中实际拥有的东西。

创建、销毁和配置 Socket 的方式和你期望的任何对象一样。但请记住,ZeroMQ 是一个异步的、弹性的结构。这对我们将 Socket 连接到网络拓扑以及之后如何使用它们产生了一些影响。

将 Socket 连接到网络拓扑中 #

要在两个节点之间建立连接,你使用 `zmq_bind()``zmq_bind()` `zmq_connect()`在一个节点中,并使用 `zmq_bind()``zmq_connect()` `zmq_connect()`在另一个节点中。通常的经验法则是,执行 `zmq_bind()` 的是“服务器”,它监听在一个众所周知的网络地址上,而执行 `zmq_connect()` 的是“客户端”,其网络地址未知或任意。因此,我们说“将一个 Socket 绑定到一个端点”以及“将一个 Socket 连接到一个端点”,端点就是那个众所周知的网络地址。

ZeroMQ 连接与经典的 TCP 连接有些不同。主要显著差异在于:

-

它们通过任意传输方式(inproc、ipc、tcp、pgm 或 epgm)。参见`zmq_inproc()`, `zmq_ipc()`, `zmq_tcp()`, `zmq_pgm()`和`zmq_epgm()`)。参见 zmq_inproc(), zmq_ipc(), zmq_tcp(), zmq_pgm(),以及 zmq_epgm().

-

一个 Socket 可以有多个出站和入站连接。

-

没有`zmq_accept`() 方法。当 Socket 绑定到一个端点后,它会自动开始接受连接。

-

网络连接本身在后台进行,如果网络连接中断(例如,对等方消失后又出现),ZeroMQ 会自动重新连接。

-

你的应用程序代码不能直接操作这些连接;它们被封装在 Socket 下。

许多架构遵循某种客户端/服务器模型,其中服务器是最静态的组件,而客户端是最动态的组件,即它们出现和消失最频繁。有时会遇到寻址问题:服务器对客户端可见,但反之则不一定。因此,大多数情况下,哪个节点应该执行 `zmq_bind()``zmq_bind()` `zmq_connect()`(服务器),哪个节点应该执行 `zmq_connect()`(客户端)是显而易见的。这也取决于你使用的 Socket 类型,对于不寻常的网络架构可能有一些例外。我们稍后会查看 Socket 类型。

现在,想象一下我们在服务器启动之前启动客户端。在传统网络中,我们会得到一个大大的红色失败标志。但 ZeroMQ 允许我们任意启动和停止各部分。一旦客户端节点执行了 `zmq_connect()``zmq_connect()` `zmq_bind()`,连接就存在了,该节点可以开始向 Socket 写入消息。在某个阶段(希望在消息排队过多以至于开始被丢弃或客户端阻塞之前),服务器就会启动,执行一次 `zmq_bind()`,然后 ZeroMQ 开始传递消息。

服务器节点可以绑定到多个端点(即协议和地址的组合),并且可以使用单个 Socket 来完成。这意味着它将接受跨不同传输方式的连接

zmq_bind (socket, "tcp://*:5555");

zmq_bind (socket, "tcp://*:9999");

zmq_bind (socket, "inproc://somename");

对于大多数传输方式,你不能两次绑定到同一个端点,这与 UDP 等不同。然而,`zmq_ipc()``inproc`

传输方式允许一个进程绑定到已被第一个进程使用的端点。这是为了让进程在崩溃后能够恢复。尽管 ZeroMQ 试图对哪一端绑定、哪一端连接保持中立,但它们之间存在差异。我们稍后会更详细地了解这些差异。总而言之,你通常应该将“服务器”视为拓扑中静态的部分,绑定到或多或少固定的端点,而将“客户端”视为动态的部分,它们会不断出现和消失并连接到这些端点。然后,围绕这个模型设计你的应用程序。这样,“just work”(正常运行)的机会就会大大增加。

Socket 有类型。Socket 类型定义了 Socket 的语义、消息的入站和出站路由策略、队列等。你可以将某些类型的 Socket 连接在一起,例如,发布者 Socket 和订阅者 Socket。Socket 在“消息模式”中协同工作。我们稍后会更详细地讨论这一点。

正是这种以不同方式连接 Socket 的能力赋予了 ZeroMQ 作为消息队列系统的基本能力。在此之上还有一些层,例如代理,我们稍后会讲到。但本质上,使用 ZeroMQ,你就像拼搭儿童积木一样将各部分连接起来,从而定义你的网络架构。

发送和接收消息 #

要发送和接收消息,你使用 `zmq_msg_send()``zmq_msg_send()` `zmq_msg_recv()``zmq_send()`

图 9 - TCP Socket 是一对一的

-

让我们看看在处理数据时,TCP Socket 和 ZeroMQ Socket 之间的主要区别:

-

ZeroMQ Socket 传输的是消息,类似于 UDP,而不是像 TCP 那样的字节流。ZeroMQ 消息是指定长度的二进制数据。我们很快就会讲到消息;它们的设计经过性能优化,因此有点复杂。

-

ZeroMQ Socket 在后台线程中执行 I/O 操作。这意味着无论你的应用程序正在忙碌什么,消息都会到达本地输入队列并从本地输出队列发送出去。

根据 Socket 类型,ZeroMQ Socket 内置了 1-对-N 的路由行为。 The`zmq_send()` The方法实际上并没有将消息发送到 Socket 连接。它将消息放入队列,以便 I/O 线程可以异步发送。除了某些异常情况外,它不会阻塞。因此,当 `zmq_send()` 返回到你的应用程序时,消息不一定已经发送出去。

单播传输 #

ZeroMQ 提供了一组单播传输(inproc、ipc、tcp)和多播传输(epgm, pgm)。多播是一种高级技术,我们稍后会讲到。除非你确定你的扇出比率使得 1 对 N 的单播变得不可能,否则不要轻易使用它。`zmq_inproc()`, `zmq_ipc()`,以及`zmq_tcp()`)和组播传输 (epgm, pgm)。组播是一种高级技术,我们稍后会介绍。除非你知道你的扇出率使得 1 对 N 的单播变得不可能,否则甚至不要开始使用它。

对于大多数常见情况,请使用 tcp,这是一种断开连接的 TCP 传输方式。它具有弹性、可移植性,并且对于大多数情况来说足够快。我们称之为断开连接,因为 ZeroMQ 的`zmq_tcp()``tcp`

传输方式不需要在连接之前端点就存在。客户端和服务器可以随时连接和绑定,可以离开和回来,这一切对应用程序来说都是透明的。进程间传输方式`zmq_ipc()``ipc``zmq_tcp()`是断开连接的,类似于`zmq_ipc()``tcp`

。它有一个限制:它尚不支持 Windows。按照约定,我们使用扩展名为“.ipc”的端点名称,以避免与其他文件名潜在冲突。在 UNIX 系统上,如果你使用`zmq_tcp()``ipc``zmq_ipc()`端点,你需要使用适当的权限创建它们,否则在不同用户 ID 下运行的进程之间可能无法共享。你还必须确保所有进程都能访问文件,例如通过在相同工作目录中运行。线程间传输方式 inproc 是一种连接型信号传输。它比`zmq_tcp()``zmq_msg_send()``zmq_ipc()``tcp`

或

`ipc`

快得多。与

相比,这种传输方式有一个特定的限制:服务器必须在任何客户端发出连接之前进行绑定。此问题已在 ZeroMQ v4.0 及更高版本中修复。

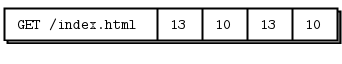

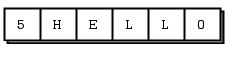

ZeroMQ 的新手经常会问(这也是我曾经问过自己的问题):“如何在 ZeroMQ 中编写一个 XYZ 服务器?”例如,“如何在 ZeroMQ 中编写一个 HTTP 服务器?”言下之意是,如果我们使用普通的 Socket 传输 HTTP 请求和响应,那么我们也应该可以使用 ZeroMQ Socket 来做同样的事情,而且会更快更好。过去的答案是“这不是它的工作方式”。ZeroMQ 不是中立载体:它对所使用的传输协议强加了帧格式。这种帧格式与现有的协议不兼容,因为这些协议倾向于使用自己的帧格式。例如,比较一个 HTTP 请求和一个 ZeroMQ 请求,两者都基于 TCP/IP。图 10 - 网络上的 HTTP

HTTP 请求使用 CR-LF 作为最简单的帧分隔符,而 ZeroMQ 使用长度指定的帧。因此,你可以使用 ZeroMQ 编写一个类似 HTTP 的协议,例如使用请求-响应 Socket 模式。但它不会是真正的 HTTP。

图 11 - 网络上的 ZeroMQ 然而,自 v3.3 版本以来,ZeroMQ 有一个 Socket 选项叫做`ZMQ_ROUTER_RAW`

int io_threads = 4;

void *context = zmq_ctx_new ();

zmq_ctx_set (context, ZMQ_IO_THREADS, io_threads);

assert (zmq_ctx_get (context, ZMQ_IO_THREADS) == io_threads);

,它允许你在不使用 ZeroMQ 帧的情况下读取和写入数据。你可以使用它来读取和写入真正的 HTTP 请求和响应。Hardeep Singh 贡献了这一修改,以便他可以从 ZeroMQ 应用程序连接到 Telnet 服务器。在撰写本文时,这仍然有些实验性,但这表明 ZeroMQ 如何不断发展以解决新问题。也许下一个补丁将由你贡献。

I/O 线程 #

我们说过 ZeroMQ 在后台线程中执行 I/O 操作。对于除了最极端的应用程序之外的所有应用来说,一个 I/O 线程(用于所有 Socket)就足够了。当你创建一个新的上下文时,它会启动一个 I/O 线程。一般的经验法则是,每秒输入或输出每吉字节数据,允许一个 I/O 线程。要增加 I/O 线程的数量,请在创建任何 Socket 之前使用

`zmq_ctx_set()`

调用。

我们已经看到,一个 Socket 可以同时处理几十甚至几千个连接。这对你编写应用程序的方式产生了根本性的影响。传统的网络应用程序通常为每个远程连接分配一个进程或线程,该进程或线程处理一个 Socket。ZeroMQ 允许你将这种整个结构压缩到一个单一进程中,然后根据需要进行拆分以实现扩展。

如果你只使用 ZeroMQ 进行线程间通信(即,不执行外部 Socket I/O 的多线程应用程序),你可以将 I/O 线程数设置为零。但这并不是一个显著的优化,更多是一种奇特用法。

消息模式 #

-

在 ZeroMQ Socket API 的朴实包装下,隐藏着消息模式的世界。如果你有企业消息传递背景,或熟悉 UDP,这些模式你会感到隐约熟悉。但对于大多数 ZeroMQ 新手来说,它们是一个惊喜。我们太习惯于 TCP 范式,其中一个 Socket 与另一个节点是一对一映射的。

-

让我们简要回顾一下 ZeroMQ 为你做了什么。它快速有效地将数据块(消息)传递给节点。你可以将节点映射到线程、进程或物理节点。无论实际传输方式是什么(如进程内、进程间、TCP 或多播),ZeroMQ 都为你的应用程序提供单一的 Socket API。它会自动重新连接来来去去的对等方。它根据需要对发送方和接收方进行消息排队。它限制这些队列以防止进程内存耗尽。它处理 Socket 错误。它在后台线程中执行所有 I/O。它使用无锁技术在节点之间进行通信,因此永远不会有锁、等待、信号量或死锁。

-

但除此之外,它根据称为模式的精确规则来路由和排队消息。正是这些模式提供了 ZeroMQ 的智能。它们封装了我们关于数据和工作分配最佳方式的宝贵经验。ZeroMQ 的模式是硬编码的,但未来的版本可能会允许用户自定义模式。

-

ZeroMQ 模式由类型匹配的 Socket 对实现。换句话说,要理解 ZeroMQ 模式,你需要理解 Socket 类型以及它们如何协同工作。大多数情况下,这只需要学习;在这个层面,很少有显而易见的东西。

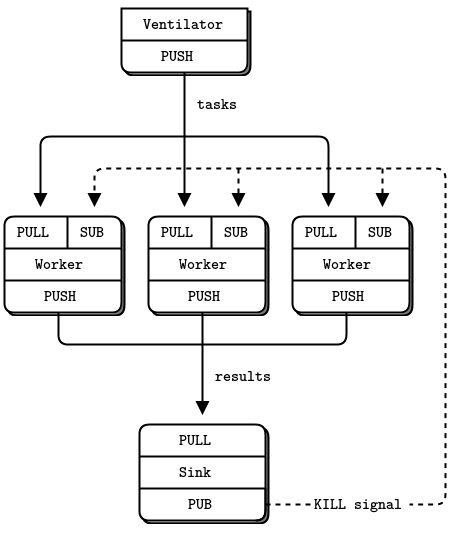

内置的核心 ZeroMQ 模式有: `zmq_socket()`请求-响应(Request-reply),它将一组客户端连接到一组服务。这是一种远程过程调用和任务分发模式。

- 发布-订阅(Pub-sub),它将一组发布者连接到一组订阅者。这是一种数据分发模式。

- 流水线(Pipeline),它以扇出/扇入模式连接节点,该模式可以有多个步骤和循环。这是一种并行任务分发和收集模式。

- 独占对(Exclusive pair),它独占地连接两个 Socket。这是一种用于连接同一进程中两个线程的模式,不要与“普通”的 Socket 对混淆。

- 我们在第 1 章 - 基础中介绍了前三种模式,并在本章稍后会看到独占对模式。关于这些模式,

- `zmq_socket(3)`

- 手册页写得相当清楚——值得反复阅读,直到你理解。以下是连接-绑定对有效的 Socket 组合(任何一侧都可以绑定):

- PUB 和 SUB

- REQ 和 REP

- REQ 和 ROUTER(注意,REQ 会插入一个额外的空帧)

DEALER 和 REP(注意,REP 会假定存在一个空帧)

DEALER 和 ROUTER

DEALER 和 DEALER

ROUTER 和 ROUTER

PUSH 和 PULL

PAIR 和 PAIR

根据 Socket 类型,ZeroMQ Socket 内置了 1-对-N 的路由行为。你还会看到 XPUB 和 XSUB Socket 的引用,我们稍后会讲到它们(它们类似于 PUB 和 SUB 的原始版本)。任何其他组合都会产生未文档化且不可靠的结果,未来的 ZeroMQ 版本如果尝试这些组合可能会返回错误。当然,你可以并且会通过代码桥接其他 Socket 类型,即从一种 Socket 类型读取并写入另一种。高级消息模式 # The`zmq_msg_send()` 这四种核心模式内置于 ZeroMQ 中。它们是 ZeroMQ API 的一部分,在核心 C++ 库中实现,并且保证在所有优秀零售店都有售。在此之上,我们添加了高级消息模式。我们在 ZeroMQ 的基础上构建这些高级模式,并使用我们应用程序所用的语言来实现它们。它们不是核心库的一部分,不包含在 ZeroMQ 包中,并作为 ZeroMQ 社区的一部分独立存在。例如,我们在第 4 章 - 可靠请求-响应模式中探讨的 Majordomo 模式,就位于 ZeroMQ 组织下的 GitHub Majordomo 项目中。 这四种核心模式内置于 ZeroMQ 中。它们是 ZeroMQ API 的一部分,在核心 C++ 库中实现,并且保证在所有优秀零售店都有售。本书的目标之一是为你提供一套这样的高级模式,既有小的(如何理智地处理消息)也有大的(如何构建一个可靠的发布-订阅架构)。

- 使用消息 # libzmq, 核心库实际上有两种 API 来发送和接收消息。我们已经看到和使用过的 zmq_send() 和 zmq_recv() 方法是简单的单行代码。我们会经常使用它们,但是 zmq_recv() 不擅长处理任意大小的消息:它会截断消息到你提供的缓冲区大小。因此,还有第二个 API,它使用 zmq_msg_t 结构体,提供更丰富但更难用的 API:, 初始化消息.

- `zmq_msg_init()` `zmq_msg_send()`, `zmq_msg_recv()`.

- `zmq_msg_init_size()` `zmq_msg_init_data()`.

- 发送和接收消息 `zmq_msg_send()`, `zmq_msg_recv()`, 释放消息.

- `zmq_msg_close()` 访问消息内容, `zmq_msg_data()`.

- `zmq_msg_size()` `zmq_msg_more()`, 处理消息属性.

`zmq_msg_get()`

`zmq_msg_set()`消息操作`zmq_msg_copy()`

-

`zmq_msg_move()`消息操作在网络传输中,ZeroMQ 消息是大小从零开始、能在内存中容纳的任意二进制大对象。你使用 Protocol Buffers、MsgPack、JSON 或任何你的应用程序需要的数据格式进行序列化。选择一种可移植的数据表示形式是明智的,但你可以自行决定取舍。

-

在内存中,ZeroMQ 消息是 libzmq`zmq_msg_t` `zmq_msg_recv()`.

-

结构体(或类,取决于你的语言)。以下是在 C 语言中使用 ZeroMQ 消息的基本规则: 核心库实际上有两种 API 来发送和接收消息。我们已经看到和使用过的 zmq_send() 和 zmq_recv() 方法是简单的单行代码。我们会经常使用它们,但是 zmq_recv() 不擅长处理任意大小的消息:它会截断消息到你提供的缓冲区大小。因此,还有第二个 API,它使用 zmq_msg_t 结构体,提供更丰富但更难用的 API:你创建并传递`zmq_msg_t`对象,而不是数据块。 `zmq_msg_send()`.

-

要读取消息,你使用 `zmq_msg_init_data()``zmq_msg_init()`

-

创建一个空消息,然后将其传递给 `zmq_msg_send()``zmq_msg_recv()` `zmq_msg_recv()`.

-

。 处理消息属性, `zmq_msg_more()`和 初始化消息要从新数据写入消息,你使用

-

`zmq_msg_init_size()` `zmq_msg_send()`创建一个消息,同时分配一块特定大小的数据。然后你使用

-

`memcpy` The`zmq_msg_send()` 这四种核心模式内置于 ZeroMQ 中。它们是 ZeroMQ API 的一部分,在核心 C++ 库中实现,并且保证在所有优秀零售店都有售。填充数据,然后将消息传递给

`zmq_msg_send()` libzmq。 `zmq_msg_more()`要释放(而非销毁)消息,你调用

`zmq_msg_close()`

。这会减少一个引用计数,最终 ZeroMQ 将销毁消息。

要访问消息内容,你使用

`zmq_msg_data()`

。要获取消息包含的数据大小,使用

- `zmq_msg_size()`

- 。

- 不要使用 zmq_msg_init() 或 zmq_msg_init_data(),除非你仔细阅读了手册页并确切知道为什么需要它们。消息操作当你将消息传递给

- `zmq_msg_send()`

- 后,ØMQ 会清除消息,即将大小设为零。你不能两次发送同一个消息,发送后也不能访问消息数据。

如果你使用

-

`zmq_send()`

-

或

-

`zmq_recv()`

-

,这些规则不适用,因为你传递的是字节数组,而不是消息结构体。

-

如果你想多次发送同一条消息,并且消息很大,创建一个新的消息,使用 `zmq_msg_init_data()``zmq_msg_init()`

初始化,然后使用 初始化消息`zmq_msg_copy()`

创建第一个消息的副本。这不会复制数据,而是复制一个引用。然后你可以发送消息两次(或更多次,如果你创建更多副本)并且消息只会在最后一个副本被发送或关闭后才会被最终销毁。

ZeroMQ 还支持多部分消息,它允许你将帧列表作为一个单一的网络消息发送或接收。这在实际应用程序中被广泛使用,我们将在本章稍后以及第 3 章 - 高级请求-响应模式中介绍这一点。

帧(在 ZeroMQ 参考手册页面中也称为“消息部分”)是 ZeroMQ 消息的基本网络格式。帧是指定长度的数据块。长度可以从零开始。如果你做过 TCP 编程,你会明白为什么帧是回答“现在我应该从这个网络 Socket 读取多少数据?”这一问题的有用方案。

- 有一个网络层协议叫做 ZMTP,它定义了 ZeroMQ 如何在 TCP 连接上读取和写入帧。如果你对它的工作原理感兴趣,规范文档很短。

- 最初,ZeroMQ 消息就像 UDP 一样只有一个帧。后来我们扩展了这一点,增加了多部分消息,它就是一系列将“更多”位设置为一的帧,后面跟着一个将该位设置为零的帧。ZeroMQ API 允许你写入带有“更多”标志的消息,当你读取消息时,它允许你检查是否还有“更多”部分。

- 因此,在低层 ZeroMQ API 和参考手册中,关于消息和帧有一些模糊之处。所以这里有一个有用的词汇表:

一条消息可以包含一个或多个部分。

这些部分也称为“帧”。 每个部分都是一个`zmq_msg_t` 每个部分都是一个对象。

在低层 API 中,你分别发送和接收每个部分。

更高级别的 API 提供包装器来发送整个多部分消息。

你可以发送零长度消息,例如用于从一个线程向另一个线程发送信号。

ZeroMQ 不会立即发送消息(无论是单部分还是多部分),而是在某个不确定的稍后时间发送。因此,多部分消息必须能容纳在内存中。

// Reading from multiple sockets

// This version uses a simple recv loop

#include "zhelpers.h"

int main (void)

{

// Connect to task ventilator

void *context = zmq_ctx_new ();

void *receiver = zmq_socket (context, ZMQ_PULL);

zmq_connect (receiver, "tcp://:5557");

// Connect to weather server

void *subscriber = zmq_socket (context, ZMQ_SUB);

zmq_connect (subscriber, "tcp://:5556");

zmq_setsockopt (subscriber, ZMQ_SUBSCRIBE, "10001 ", 6);

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while (1) {

char msg [256];

while (1) {

int size = zmq_recv (receiver, msg, 255, ZMQ_DONTWAIT);

if (size != -1) {

// Process task

}

else

break;

}

while (1) {

int size = zmq_recv (subscriber, msg, 255, ZMQ_DONTWAIT);

if (size != -1) {

// Process weather update

}

else

break;

}

// No activity, so sleep for 1 msec

s_sleep (1);

}

zmq_close (receiver);

zmq_close (subscriber);

zmq_ctx_destroy (context);

return 0;

}

你必须调用

//

// Reading from multiple sockets in C++

// This version uses a simple recv loop

//

#include "zhelpers.hpp"

int main (int argc, char *argv[])

{

// Prepare our context and sockets

zmq::context_t context(1);

// Connect to task ventilator

zmq::socket_t receiver(context, ZMQ_PULL);

receiver.connect("tcp://:5557");

// Connect to weather server

zmq::socket_t subscriber(context, ZMQ_SUB);

subscriber.connect("tcp://:5556");

subscriber.set(zmq::sockopt::subscribe, "10001 ");

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while (1) {

// Process any waiting tasks

bool rc;

do {

zmq::message_t task;

if ((rc = receiver.recv(&task, ZMQ_DONTWAIT)) == true) {

// process task

}

} while(rc == true);

// Process any waiting weather updates

do {

zmq::message_t update;

if ((rc = subscriber.recv(&update, ZMQ_DONTWAIT)) == true) {

// process weather update

}

} while(rc == true);

// No activity, so sleep for 1 msec

s_sleep(1);

}

return 0;

}

`zmq_msg_close()`

再次强调,不要使用

;;; -*- Mode:Lisp; Syntax:ANSI-Common-Lisp; -*-

;;;

;;; Reading from multiple sockets in Common Lisp

;;; This version uses a simple recv loop

;;;

;;; Kamil Shakirov <kamils80@gmail.com>

;;;

(defpackage #:zguide.msreader

(:nicknames #:msreader)

(:use #:cl #:zhelpers)

(:export #:main))

(in-package :zguide.msreader)

(defun main ()

;; Prepare our context and socket

(zmq:with-context (context 1)

;; Connect to task ventilator

(zmq:with-socket (receiver context zmq:pull)

(zmq:connect receiver "tcp://:5557")

;; Connect to weather server

(zmq:with-socket (subscriber context zmq:sub)

(zmq:connect subscriber "tcp://:5556")

(zmq:setsockopt subscriber zmq:subscribe "10001 ")

;; Process messages from both sockets

;; We prioritize traffic from the task ventilator

(loop

(handler-case

(loop

(let ((task (make-instance 'zmq:msg)))

(zmq:recv receiver task zmq:noblock)

;; process task

(dump-message task)

(finish-output)))

(zmq:error-again () nil))

;; Process any waiting weather updates

(handler-case

(loop

(let ((update (make-instance 'zmq:msg)))

(zmq:recv subscriber update zmq:noblock)

;; process weather update

(dump-message update)

(finish-output)))

(zmq:error-again () nil))

;; No activity, so sleep for 1 msec

(isys:usleep 1000)))))

(cleanup))

`zmq_msg_init_data()`

program msreader;

//

// Reading from multiple sockets

// This version uses a simple recv loop

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

;

var

context: TZMQContext;

receiver,

subscriber: TZMQSocket;

rc: Integer;

task,

update: TZMQFrame;

begin

// Prepare our context and sockets

context := TZMQContext.Create;

// Connect to task ventilator

receiver := Context.Socket( stPull );

receiver.RaiseEAgain := false;

receiver.connect( 'tcp://:5557' );

// Connect to weather server

subscriber := Context.Socket( stSub );

subscriber.RaiseEAgain := false;

subscriber.connect( 'tcp://:5556' );

subscriber.subscribe( '10001' );

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while True do

begin

// Process any waiting tasks

repeat

task := TZMQFrame.create;

rc := receiver.recv( task, [rfDontWait] );

if rc <> -1 then

begin

// process task

end;

task.Free;

until rc = -1;

// Process any waiting weather updates

repeat

update := TZMQFrame.Create;

rc := subscriber.recv( update, [rfDontWait] );

if rc <> -1 then

begin

// process weather update

end;

update.Free;

until rc = -1;

// No activity, so sleep for 1 msec

sleep (1);

end;

// We never get here but clean up anyhow

receiver.Free;

subscriber.Free;

context.Free;

end.

。这是一种零拷贝方法,保证会给你带来麻烦。在开始担心节省微秒之前,还有更重要的事情需要学习 ZeroMQ。

#! /usr/bin/env escript

%%

%% Reading from multiple sockets

%% This version uses a simple recv loop

%%

main(_) ->

%% Prepare our context and sockets

{ok, Context} = erlzmq:context(),

%% Connect to task ventilator

{ok, Receiver} = erlzmq:socket(Context, pull),

ok = erlzmq:connect(Receiver, "tcp://:5557"),

%% Connect to weather server

{ok, Subscriber} = erlzmq:socket(Context, sub),

ok = erlzmq:connect(Subscriber, "tcp://:5556"),

ok = erlzmq:setsockopt(Subscriber, subscribe, <<"10001">>),

%% Process messages from both sockets

loop(Receiver, Subscriber),

%% We never get here but clean up anyhow

ok = erlzmq:close(Receiver),

ok = erlzmq:close(Subscriber),

ok = erlzmq:term(Context).

loop(Receiver, Subscriber) ->

%% We prioritize traffic from the task ventilator

process_tasks(Receiver),

process_weather(Subscriber),

timer:sleep(1000),

loop(Receiver, Subscriber).

process_tasks(S) ->

%% Process any waiting tasks

case erlzmq:recv(S, [noblock]) of

{error, eagain} -> ok;

{ok, Msg} ->

io:format("Procesing task: ~s~n", [Msg]),

process_tasks(S)

end.

process_weather(S) ->

%% Process any waiting weather updates

case erlzmq:recv(S, [noblock]) of

{error, eagain} -> ok;

{ok, Msg} ->

io:format("Processing weather update: ~s~n", [Msg]),

process_weather(S)

end.

这个丰富的 API 使用起来可能会很繁琐。这些方法是为性能优化的,而非简单性。如果你开始使用它们,几乎肯定会犯错,除非你仔细阅读了手册页。因此,一个好的语言绑定(binding)的主要工作之一就是将这个 API 包装成更易于使用的类。

defmodule Msreader do

@moduledoc """

Generated by erl2ex (http://github.com/dazuma/erl2ex)

From Erlang source: (Unknown source file)

At: 2019-12-20 13:57:27

"""

def main() do

{:ok, context} = :erlzmq.context()

{:ok, receiver} = :erlzmq.socket(context, :pull)

:ok = :erlzmq.connect(receiver, 'tcp://:5557')

{:ok, subscriber} = :erlzmq.socket(context, :sub)

:ok = :erlzmq.connect(subscriber, 'tcp://:5556')

:ok = :erlzmq.setsockopt(subscriber, :subscribe, "10001")

loop(receiver, subscriber)

:ok = :erlzmq.close(receiver)

:ok = :erlzmq.close(subscriber)

:ok = :erlzmq.term(context)

end

def loop(receiver, subscriber) do

process_tasks(receiver)

process_weather(subscriber)

:timer.sleep(1000)

loop(receiver, subscriber)

end

#case(:erlzmq.recv(s, [:noblock])) do

def process_tasks(s) do

case(:erlzmq.recv(s, [:dontwait])) do

{:error, :eagain} ->

:ok

{:ok, msg} ->

:io.format('Procesing task: ~s~n', [msg])

process_tasks(s)

end

end

def process_weather(s) do

case(:erlzmq.recv(s, [:dontwait])) do

{:error, :eagain} ->

:ok

{:ok, msg} ->

:io.format('Processing weather update: ~s~n', [msg])

process_weather(s)

end

end

end

Msreader.main

处理多个 Socket #

等待 Socket 上的消息。

//

// Reading from multiple sockets

// This version uses a simple recv loop

//

open ZMQ;

// Prepare our context and sockets

var context = zmq_init 1;

// Connect to task ventilator

var receiver = context.mk_socket ZMQ_PULL;

receiver.connect "tcp://:5557";

// Connect to weather server

var subscriber = context.mk_socket ZMQ_SUB;

subscriber.connect "tcp://:5556";

subscriber.set_opt$ zmq_subscribe "101 ";

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while true do

// Process any waiting tasks

var task = receiver.recv_string_dontwait;

while task != "" do

// process task

task = receiver.recv_string_dontwait;

done

// Process any waiting weather updates

var update = subscriber.recv_string_dontwait;

while update != "" do

// process update

update = subscriber.recv_string_dontwait;

done

Faio::sleep (sys_clock,0.001); // 1 ms

done

处理消息。

//

// Reading from multiple sockets

// This version uses a simple recv loop

//

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

"time"

)

func main() {

context, _ := zmq.NewContext()

defer context.Close()

// Connect to task ventilator

receiver, _ := context.NewSocket(zmq.PULL)

defer receiver.Close()

receiver.Connect("tcp://:5557")

// Connect to weather server

subscriber, _ := context.NewSocket(zmq.SUB)

defer subscriber.Close()

subscriber.Connect("tcp://:5556")

subscriber.SetSubscribe("10001")

// Process messages from both sockets

// We prioritize traffic from the task ventilator

for {

// ventilator

for b, _ := receiver.Recv(zmq.NOBLOCK); b != nil; {

// fake process task

}

// weather server

for b, _ := subscriber.Recv(zmq.NOBLOCK); b != nil; {

// process task

fmt.Printf("found weather =%s\n", string(b))

}

// No activity, so sleep for 1 msec

time.Sleep(1e6)

}

fmt.Println("done")

}

重复。

要真正同时从多个 Socket 读取,请使用

。更好的方法可能是将

package guide;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZContext;

//

// Reading from multiple sockets in Java

// This version uses a simple recv loop

//

public class msreader

{

public static void main(String[] args) throws Exception

{

// Prepare our context and sockets

try (ZContext context = new ZContext()) {

// Connect to task ventilator

ZMQ.Socket receiver = context.createSocket(SocketType.PULL);

receiver.connect("tcp://:5557");

// Connect to weather server

ZMQ.Socket subscriber = context.createSocket(SocketType.SUB);

subscriber.connect("tcp://:5556");

subscriber.subscribe("10001 ".getBytes(ZMQ.CHARSET));

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while (!Thread.currentThread().isInterrupted()) {

// Process any waiting tasks

byte[] task;

while ((task = receiver.recv(ZMQ.DONTWAIT)) != null) {

System.out.println("process task");

}

// Process any waiting weather updates

byte[] update;

while ((update = subscriber.recv(ZMQ.DONTWAIT)) != null) {

System.out.println("process weather update");

}

// No activity, so sleep for 1 msec

Thread.sleep(1000);

}

}

}

}

`zmq_poll()`

#!/usr/bin/env julia

# Reading from multiple sockets

# The ZMQ.jl wrapper implements ZMQ.recv as a blocking function. Nonblocking i/o

# in Julia is typically done using coroutines (Tasks).

# The @async macro puts its enclosed expression in a Task. When the macro is

# executed, its Task gets scheduled and execution continues immediately to

# whatever follows the macro.

# Note: the msreader example in the zguide is presented as a "dirty hack"

# using the ZMQ_DONTWAIT and EAGAIN codes. Since the ZMQ.jl wrapper API

# does not expose DONTWAIT directly, this example skips the hack and instead

# provides an efficient solution.

using ZMQ

# Prepare our context and sockets

context = ZMQ.Context()

# Connect to task ventilator

receiver = Socket(context, ZMQ.PULL)

ZMQ.connect(receiver, "tcp://:5557")

# Connect to weather server

subscriber = Socket(context,ZMQ.SUB)

ZMQ.connect(subscriber,"tcp://:5556")

ZMQ.set_subscribe(subscriber, "10001")

while true

# Process any waiting tasks

@async begin

msg = unsafe_string(ZMQ.recv(receiver))

println(msg)

end

# Process any waiting weather updates

@async begin

msg = unsafe_string(ZMQ.recv(subscriber))

println(msg)

end

# Sleep for 1 msec

sleep(0.001)

end

封装在一个框架中,将其转换为优秀的事件驱动反应器(reactor),但这比我们想要在这里介绍的内容复杂得多。

--

-- Reading from multiple sockets

-- This version uses a simple recv loop

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zhelpers"

-- Prepare our context and sockets

local context = zmq.init(1)

-- Connect to task ventilator

local receiver = context:socket(zmq.PULL)

receiver:connect("tcp://:5557")

-- Connect to weather server

local subscriber = context:socket(zmq.SUB)

subscriber:connect("tcp://:5556")

subscriber:setopt(zmq.SUBSCRIBE, "10001 ")

-- Process messages from both sockets

-- We prioritize traffic from the task ventilator

while true do

-- Process any waiting tasks

local msg

while true do

msg = receiver:recv(zmq.NOBLOCK)

if not msg then break end

-- process task

end

-- Process any waiting weather updates

while true do

msg = subscriber:recv(zmq.NOBLOCK)

if not msg then break end

-- process weather update

end

-- No activity, so sleep for 1 msec

s_sleep (1)

end

-- We never get here but clean up anyhow

receiver:close()

subscriber:close()

context:term()

让我们从一个“脏活”(dirty hack)开始,部分是为了享受做错事的乐趣,但主要是因为它能向你展示如何进行非阻塞 Socket 读取。这是一个使用非阻塞读取从两个 Socket 读取的简单示例。这个有些混乱的程序既是天气更新的订阅者,又是并行任务的工作者

示例 msreader 缺少 Ada 实现:贡献翻译

/* msreader.m: Reads from multiple sockets the hard way.

* *** DON'T DO THIS - see mspoller.m for a better example. *** */

#import "ZMQObjC.h"

static NSString *const kTaskVentEndpoint = @"tcp://:5557";

static NSString *const kWeatherServerEndpoint = @"tcp://:5556";

#define MSEC_PER_NSEC (1000000)

int

main(void)

{

NSAutoreleasePool *pool = [[NSAutoreleasePool alloc] init];

ZMQContext *ctx = [[[ZMQContext alloc] initWithIOThreads:1U] autorelease];

/* Connect to task ventilator. */

ZMQSocket *receiver = [ctx socketWithType:ZMQ_PULL];

[receiver connectToEndpoint:kTaskVentEndpoint];

/* Connect to weather server. */

ZMQSocket *subscriber = [ctx socketWithType:ZMQ_SUB];

[subscriber connectToEndpoint:kWeatherServerEndpoint];

NSData *subData = [@"10001" dataUsingEncoding:NSUTF8StringEncoding];

[subscriber setData:subData forOption:ZMQ_SUBSCRIBE];

/* Process messages from both sockets, prioritizing the task vent. */

/* Could fair queue by checking each socket for activity in turn, rather

* than continuing to service the current socket as long as it is busy. */

struct timespec msec = {0, MSEC_PER_NSEC};

for (;;) {

/* Worst case: a task is always pending and we never get to weather,

* or vice versa. In such a case, memory use would rise without

* limit if we did not ensure the objects autoreleased by a single loop

* will be autoreleased whether we leave or continue in the loop. */

NSAutoreleasePool *p;

/* Process any waiting tasks. */

for (p = [[NSAutoreleasePool alloc] init];

nil != [receiver receiveDataWithFlags:ZMQ_NOBLOCK];

[p drain], p = [[NSAutoreleasePool alloc] init]);

[p drain];

/* No waiting tasks - process any waiting weather updates. */

for (p = [[NSAutoreleasePool alloc] init];

nil != [subscriber receiveDataWithFlags:ZMQ_NOBLOCK];

[p drain], p = [[NSAutoreleasePool alloc] init]);

[p drain];

/* Nothing doing - sleep for a millisecond. */

(void)nanosleep(&msec, NULL);

}

/* NOT REACHED */

[ctx closeSockets];

[pool drain]; /* This finally releases the autoreleased context. */

return EXIT_SUCCESS;

}

msreader:Basic 语言实现的多个 Socket 读取器

msreader:C 语言实现的多个 Socket 读取器

# Reading from multiple sockets in Perl

# This version uses a simple recv loop

use strict;

use warnings;

use v5.10;

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_PULL ZMQ_SUB ZMQ_DONTWAIT);

use TryCatch;

use Time::HiRes qw(usleep);

# Connect to task ventilator

my $context = ZMQ::FFI->new();

my $receiver = $context->socket(ZMQ_PULL);

$receiver->connect('tcp://:5557');

# Connect to weather server

my $subscriber = $context->socket(ZMQ_SUB);

$subscriber->connect('tcp://:5556');

$subscriber->subscribe('10001');

# Process messages from both sockets

# We prioritize traffic from the task ventilator

while (1) {

PROCESS_TASK:

while (1) {

try {

my $msg = $receiver->recv(ZMQ_DONTWAIT);

# Process task

}

catch {

last PROCESS_TASK;

}

}

PROCESS_UPDATE:

while (1) {

try {

my $msg = $subscriber->recv(ZMQ_DONTWAIT);

# Process weather update

}

catch {

last PROCESS_UPDATE;

}

}

# No activity, so sleep for 1 msec

usleep(1000);

}

编辑此示例

编辑此示例

<?php

/*

* Reading from multiple sockets

* This version uses a simple recv loop

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

// Prepare our context and sockets

$context = new ZMQContext();

// Connect to task ventilator

$receiver = new ZMQSocket($context, ZMQ::SOCKET_PULL);

$receiver->connect("tcp://:5557");

// Connect to weather server

$subscriber = new ZMQSocket($context, ZMQ::SOCKET_SUB);

$subscriber->connect("tcp://:5556");

$subscriber->setSockOpt(ZMQ::SOCKOPT_SUBSCRIBE, "10001");

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while (true) {

// Process any waiting tasks

try {

for ($rc = 0; !$rc;) {

if ($rc = $receiver->recv(ZMQ::MODE_NOBLOCK)) {

// process task

}

}

} catch (ZMQSocketException $e) {

// do nothing

}

try {

// Process any waiting weather updates

for ($rc = 0; !$rc;) {

if ($rc = $subscriber->recv(ZMQ::MODE_NOBLOCK)) {

// process weather update

}

}

} catch (ZMQSocketException $e) {

// do nothing

}

// No activity, so sleep for 1 msec

usleep(1);

}

msreader:C++ 语言实现的多个 Socket 读取器

# encoding: utf-8

#

# Reading from multiple sockets

# This version uses a simple recv loop

#

# Author: Jeremy Avnet (brainsik) <spork(dash)zmq(at)theory(dot)org>

#

import zmq

import time

# Prepare our context and sockets

context = zmq.Context()

# Connect to task ventilator

receiver = context.socket(zmq.PULL)

receiver.connect("tcp://:5557")

# Connect to weather server

subscriber = context.socket(zmq.SUB)

subscriber.connect("tcp://:5556")

subscriber.setsockopt(zmq.SUBSCRIBE, b"10001")

# Process messages from both sockets

# We prioritize traffic from the task ventilator

while True:

# Process any waiting tasks

while True:

try:

msg = receiver.recv(zmq.DONTWAIT)

except zmq.Again:

break

# process task

# Process any waiting weather updates

while True:

try:

msg = subscriber.recv(zmq.DONTWAIT)

except zmq.Again:

break

# process weather update

# No activity, so sleep for 1 msec

time.sleep(0.001)

msreader:C# 语言实现的多个 Socket 读取器

msreader:CL 语言实现的多个 Socket 读取器

msreader:Erlang 语言实现的多个 Socket 读取器

#!/usr/bin/env ruby

# author: Oleg Sidorov <4pcbr> i4pcbr@gmail.com

# this code is licenced under the MIT/X11 licence.

#

# Reading from multiple sockets

# This version uses a simple recv loop

require 'rubygems'

require 'ffi-rzmq'

context = ZMQ::Context.new

# Connect to task ventilator

receiver = context.socket(ZMQ::PULL)

receiver.connect('tcp://:5557')

# Connect to weather server

subscriber = context.socket(ZMQ::SUB)

subscriber.connect('tcp://:5556')

subscriber.setsockopt(ZMQ::SUBSCRIBE, '10001')

while true

if receiver.recv_string(receiver_msg = '',ZMQ::NOBLOCK) && !receiver_msg.empty?

# process task

puts "receiver: #{receiver_msg}"

end

if subscriber.recv_string(subscriber_msg = '',ZMQ::NOBLOCK) && !subscriber_msg.empty?

# process weather update

puts "weather: #{subscriber_msg}"

end

# No activity, so sleep for 1 msec

sleep 0.001

end

msreader:Elixir 语言实现的多个 Socket 读取器

use std::{thread, time};

fn main() {

let context = zmq::Context::new();

let receiver = context.socket(zmq::PULL).unwrap();

assert!(receiver.connect("tcp://:5557").is_ok());

let subscriber = context.socket(zmq::SUB).unwrap();

assert!(subscriber.connect("tcp://:5556").is_ok());

assert!(subscriber.set_subscribe(b"10001").is_ok());

loop {

loop {

if receiver.recv_msg(zmq::DONTWAIT).is_err() {

break;

}

}

loop {

if subscriber.recv_msg(zmq::DONTWAIT).is_err() {

break;

}

}

thread::sleep(time::Duration::from_millis(1));

}

}

msreader:F# 语言实现的多个 Socket 读取器

/*

*

* Reading from multiple sockets in Scala

* This version uses a simple recv loop

*

* @author Giovanni Ruggiero

* @email giovanni.ruggiero@gmail.com

*/

import org.zeromq.ZMQ

object msreader {

def main(args : Array[String]) {

// Prepare our context and sockets

val context = ZMQ.context(1)

// Connect to task ventilator

val receiver = context.socket(ZMQ.PULL)

receiver.connect("tcp://:5557")

// Connect to weather server

val subscriber = context.socket(ZMQ.SUB)

subscriber.connect("tcp://:5556")

subscriber.subscribe("10001 ".getBytes())

// Process messages from both sockets

// We prioritize traffic from the task ventilator

while (true) {

// Process any waiting tasks

val task = receiver.recv(ZMQ.NOBLOCK)

while(task != null) {

// process task

}

// Process any waiting weather updates

val update = subscriber.recv(ZMQ.NOBLOCK)

while (update != null) {

// process weather update

}

// No activity, so sleep for 1 msec

Thread.sleep(1)

}

}

}

示例 msreader 缺少 F# 实现:贡献翻译

#

# Reading from multiple sockets

# This version uses a simple recv loop

#

package require zmq

# Prepare our context and sockets

zmq context context

# Connect to task ventilator

zmq socket receiver context PULL

receiver connect "tcp://:5557"

# Connect to weather server

zmq socket subscriber context SUB

subscriber connect "tcp://*:5556"

subscriber setsockopt SUBSCRIBE "10001"

# Socket to send messages to

zmq socket sender context PUSH

sender connect "tcp://:5558"

# Process messages from both sockets

# We prioritize traffic from the task ventilator

while {1} {

# Process any waiting task

for {set rc 0} {!$rc} {} {

zmq message task

if {[set rc [receiver recv_msg task NOBLOCK]] == 0} {

# Do the work

set string [task data]

puts "Process task: $string"

after $string

# Send result to sink

sender send "$string"

}

task close

}

# Process any waiting weather update

for {set rc 0} {!$rc} {} {

zmq message msg

if {[set rc [subscriber recv_msg msg NOBLOCK]] == 0} {

puts "Weather update: [msg data]"

}

msg close

}

# No activity, sleep for 1 msec

after 1

}

# We never get here but clean up anyhow

sender close

receiver close

subscriber close

context term

msreader:Felix 语言实现的多个 Socket 读取器

示例 msreader 缺少 Haskell 实现:贡献翻译

msreader:Haxe 语言实现的多个 Socket 读取器

示例 msreader 缺少 Haxe 实现:贡献翻译 每个部分都是一个:

msreader:Java 语言实现的多个 Socket 读取器

msreader:Lua 语言实现的多个 Socket 读取器

示例 msreader 缺少 Node.js 实现:贡献翻译

// Reading from multiple sockets

// This version uses zmq_poll()

#include "zhelpers.h"

int main (void)

{

// Connect to task ventilator

void *context = zmq_ctx_new ();

void *receiver = zmq_socket (context, ZMQ_PULL);

zmq_connect (receiver, "tcp://:5557");

// Connect to weather server

void *subscriber = zmq_socket (context, ZMQ_SUB);

zmq_connect (subscriber, "tcp://:5556");

zmq_setsockopt (subscriber, ZMQ_SUBSCRIBE, "10001 ", 6);

zmq_pollitem_t items [] = {

{ receiver, 0, ZMQ_POLLIN, 0 },

{ subscriber, 0, ZMQ_POLLIN, 0 }

};

// Process messages from both sockets

while (1) {

char msg [256];

zmq_poll (items, 2, -1);

if (items [0].revents & ZMQ_POLLIN) {

int size = zmq_recv (receiver, msg, 255, 0);

if (size != -1) {

// Process task

}

}

if (items [1].revents & ZMQ_POLLIN) {

int size = zmq_recv (subscriber, msg, 255, 0);

if (size != -1) {

// Process weather update

}

}

}

zmq_close (subscriber);

zmq_ctx_destroy (context);

return 0;

}

msreader:Objective-C 语言实现的多个 Socket 读取器

//

// Reading from multiple sockets in C++

// This version uses zmq_poll()

//

#include "zhelpers.hpp"

int main (int argc, char *argv[])

{

zmq::context_t context(1);

// Connect to task ventilator

zmq::socket_t receiver(context, ZMQ_PULL);

receiver.connect("tcp://:5557");

// Connect to weather server

zmq::socket_t subscriber(context, ZMQ_SUB);

subscriber.connect("tcp://:5556");

subscriber.set(zmq::sockopt::subscribe, "10001 ");

// Initialize poll set

zmq::pollitem_t items [] = {

{ receiver, 0, ZMQ_POLLIN, 0 },

{ subscriber, 0, ZMQ_POLLIN, 0 }

};

// Process messages from both sockets

while (1) {

zmq::message_t message;

zmq::poll (&items [0], 2, -1);

if (items [0].revents & ZMQ_POLLIN) {

receiver.recv(&message);

// Process task

}

if (items [1].revents & ZMQ_POLLIN) {

subscriber.recv(&message);

// Process weather update

}

}

return 0;

}

msreader:ooc 语言实现的多个 Socket 读取器

msreader:Perl 语言实现的多个 Socket 读取器

;;; -*- Mode:Lisp; Syntax:ANSI-Common-Lisp; -*-

;;;

;;; Reading from multiple sockets in Common Lisp

;;; This version uses zmq_poll()

;;;

;;; Kamil Shakirov <kamils80@gmail.com>

;;;

(defpackage #:zguide.mspoller

(:nicknames #:mspoller)

(:use #:cl #:zhelpers)

(:export #:main))

(in-package :zguide.mspoller)

(defun main ()

(zmq:with-context (context 1)

;; Connect to task ventilator

(zmq:with-socket (receiver context zmq:pull)

(zmq:connect receiver "tcp://:5557")

;; Connect to weather server

(zmq:with-socket (subscriber context zmq:sub)

(zmq:connect subscriber "tcp://:5556")

(zmq:setsockopt subscriber zmq:subscribe "10001 ")

;; Initialize poll set

(zmq:with-polls ((items . ((receiver . zmq:pollin)

(subscriber . zmq:pollin))))

;; Process messages from both sockets

(loop

(let ((revents (zmq:poll items)))

(when (= (first revents) zmq:pollin)

(let ((message (make-instance 'zmq:msg)))

(zmq:recv receiver message)

;; Process task

(dump-message message)

(finish-output)))

(when (= (second revents) zmq:pollin)

(let ((message (make-instance 'zmq:msg)))

(zmq:recv subscriber message)

;; Process weather update

(dump-message message)

(finish-output)))))))))

(cleanup))

msreader:PHP 语言实现的多个 Socket 读取器

program mspoller;

//

// Reading from multiple sockets

// This version uses zmq_poll()

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

;

var

context: TZMQContext;

receiver,

subscriber: TZMQSocket;

i,pc: Integer;

task: TZMQFrame;

poller: TZMQPoller;

pollResult: TZMQPollItem;

begin

// Prepare our context and sockets

context := TZMQContext.Create;

// Connect to task ventilator

receiver := Context.Socket( stPull );

receiver.connect( 'tcp://:5557' );

// Connect to weather server

subscriber := Context.Socket( stSub );

subscriber.connect( 'tcp://:5556' );

subscriber.subscribe( '10001' );

// Initialize poll set

poller := TZMQPoller.Create( true );

poller.Register( receiver, [pePollIn] );

poller.Register( subscriber, [pePollIn] );

task := nil;

// Process messages from both sockets

while True do

begin

pc := poller.poll;

if pePollIn in poller.PollItem[0].revents then

begin

receiver.recv( task );

// Process task

FreeAndNil( task );

end;

if pePollIn in poller.PollItem[1].revents then

begin

subscriber.recv( task );

// Process task

FreeAndNil( task );

end;

end;

// We never get here

poller.Free;

receiver.Free;

subscriber.Free;

context.Free;

end.

msreader:Python 语言实现的多个 Socket 读取器

#! /usr/bin/env escript

%%

%% Reading from multiple sockets

%% This version uses active sockets

%%

main(_) ->

{ok,Context} = erlzmq:context(),

%% Connect to task ventilator

{ok, Receiver} = erlzmq:socket(Context, [pull, {active, true}]),

ok = erlzmq:connect(Receiver, "tcp://:5557"),

%% Connect to weather server

{ok, Subscriber} = erlzmq:socket(Context, [sub, {active, true}]),

ok = erlzmq:connect(Subscriber, "tcp://:5556"),

ok = erlzmq:setsockopt(Subscriber, subscribe, <<"10001">>),

%% Process messages from both sockets

loop(Receiver, Subscriber),

%% We never get here

ok = erlzmq:close(Receiver),

ok = erlzmq:close(Subscriber),

ok = erlzmq:term(Context).

loop(Tasks, Weather) ->

receive

{zmq, Tasks, Msg, _Flags} ->

io:format("Processing task: ~s~n",[Msg]),

loop(Tasks, Weather);

{zmq, Weather, Msg, _Flags} ->

io:format("Processing weather update: ~s~n",[Msg]),

loop(Tasks, Weather)

end.

msreader:Q 语言实现的多个 Socket 读取器

defmodule Mspoller do

@moduledoc """

Generated by erl2ex (http://github.com/dazuma/erl2ex)

From Erlang source: (Unknown source file)

At: 2019-12-20 13:57:27

"""

def main() do

{:ok, context} = :erlzmq.context()

{:ok, receiver} = :erlzmq.socket(context, [:pull, {:active, true}])

:ok = :erlzmq.connect(receiver, 'tcp://:5557')

{:ok, subscriber} = :erlzmq.socket(context, [:sub, {:active, true}])

:ok = :erlzmq.connect(subscriber, 'tcp://:5556')

:ok = :erlzmq.setsockopt(subscriber, :subscribe, "10001")

loop(receiver, subscriber)

:ok = :erlzmq.close(receiver)

:ok = :erlzmq.close(subscriber)

:ok = :erlzmq.term(context)

end

def loop(tasks, weather) do

receive do

{:zmq, ^tasks, msg, _flags} ->

:io.format('Processing task: ~s~n', [msg])

loop(tasks, weather)

{:zmq, ^weather, msg, _flags} ->

:io.format('Processing weather update: ~s~n', [msg])

loop(tasks, weather)

end

end

end

Mspoller.main

示例 msreader 缺少 Q 实现:贡献翻译

示例 msreader 缺少 Racket 实现:贡献翻译

//

// Reading from multiple sockets

// This version uses zmq_poll()

//

open ZMQ;

var context = zmq_init 1;

// Connect to task ventilator

var receiver = context.mk_socket ZMQ_PULL;

receiver.connect "tcp://:5557";

// Connect to weather server

var subscriber = context.mk_socket ZMQ_SUB;

subscriber.connect "tcp://:5556";

subscriber.set_opt$ zmq_subscribe "101 ";

// Initialize poll set

var items = varray(

zmq_poll_item (receiver, ZMQ_POLLIN),

zmq_poll_item (subscriber, ZMQ_POLLOUT))

;

// Process messages from both sockets

while true do

C_hack::ignore$ poll (items, -1.0);

if (items.[0].revents \& ZMQ_POLLIN).short != 0s do

var s = receiver.recv_string;

// Process task

done

if (items.[1].revents \& ZMQ_POLLIN).short != 0s do

s = subscriber.recv_string;

done

done

msreader:Ruby 语言实现的多个 Socket 读取器

//

// Reading from multiple sockets

// This version uses zmq.Poll()

//

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

)

func main() {

context, _ := zmq.NewContext()

defer context.Close()

// Connect to task ventilator

receiver, _ := context.NewSocket(zmq.PULL)

defer receiver.Close()

receiver.Connect("tcp://:5557")

// Connect to weather server

subscriber, _ := context.NewSocket(zmq.SUB)

defer subscriber.Close()

subscriber.Connect("tcp://:5556")

subscriber.SetSubscribe("10001")

pi := zmq.PollItems{

zmq.PollItem{Socket: receiver, Events: zmq.POLLIN},

zmq.PollItem{Socket: subscriber, Events: zmq.POLLIN},

}

// Process messages from both sockets

for {

_, _ = zmq.Poll(pi, -1)

switch {

case pi[0].REvents&zmq.POLLIN != 0:

// Process task

pi[0].Socket.Recv(0) // eat the incoming message

case pi[1].REvents&zmq.POLLIN != 0:

// Process weather update

pi[1].Socket.Recv(0) // eat the incoming message

}

}

fmt.Println("done")

}

msreader:Rust 语言实现的多个 Socket 读取器

{-# LANGUAGE OverloadedStrings #-}

-- Reading from multiple sockets

-- This version uses zmq_poll()

module Main where

import Control.Monad

import System.ZMQ4.Monadic

main :: IO ()

main = runZMQ $ do

-- Connect to task ventilator

receiver <- socket Pull

connect receiver "tcp://:5557"

-- Connect to weather server

subscriber <- socket Sub

connect subscriber "tcp://:5556"

subscribe subscriber "10001 "

-- Process messages from both sockets

forever $

poll (-1) [ Sock receiver [In] (Just receiver_callback)

, Sock subscriber [In] (Just subscriber_callback)

]

where

-- Process task

receiver_callback :: [Event] -> ZMQ z ()

receiver_callback _ = return ()

-- Process weather update

subscriber_callback :: [Event] -> ZMQ z ()

subscriber_callback _ = return ()

msreader:Scala 语言实现的多个 Socket 读取器

msreader:OCaml 语言实现的多个 Socket 读取器

package guide;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZContext;

//

// Reading from multiple sockets in Java

// This version uses ZMQ.Poller

//

public class mspoller

{

public static void main(String[] args)

{

try (ZContext context = new ZContext()) {

// Connect to task ventilator

ZMQ.Socket receiver = context.createSocket(SocketType.PULL);

receiver.connect("tcp://:5557");

// Connect to weather server

ZMQ.Socket subscriber = context.createSocket(SocketType.SUB);

subscriber.connect("tcp://:5556");

subscriber.subscribe("10001 ".getBytes(ZMQ.CHARSET));

// Initialize poll set

ZMQ.Poller items = context.createPoller(2);

items.register(receiver, ZMQ.Poller.POLLIN);

items.register(subscriber, ZMQ.Poller.POLLIN);

// Process messages from both sockets

while (!Thread.currentThread().isInterrupted()) {

byte[] message;

items.poll();

if (items.pollin(0)) {

message = receiver.recv(0);

System.out.println("Process task");

}

if (items.pollin(1)) {

message = subscriber.recv(0);

System.out.println("Process weather update");

}

}

}

}

}

mspoller: Lua 中的多套接字 poller

--

-- Reading from multiple sockets

-- This version uses :poll()

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zmq.poller"

require"zhelpers"

local context = zmq.init(1)

-- Connect to task ventilator

local receiver = context:socket(zmq.PULL)

receiver:connect("tcp://:5557")

-- Connect to weather server

local subscriber = context:socket(zmq.SUB)

subscriber:connect("tcp://:5556")

subscriber:setopt(zmq.SUBSCRIBE, "10001 ", 6)

local poller = zmq.poller(2)

poller:add(receiver, zmq.POLLIN, function()

local msg = receiver:recv()

-- Process task

end)

poller:add(subscriber, zmq.POLLIN, function()

local msg = subscriber:recv()

-- Process weather update

end)

-- Process messages from both sockets

-- start poller's event loop

poller:start()

-- We never get here

receiver:close()

subscriber:close()

context:term()

mspoller: Node.js 中的多套接字 poller

// Reading from multiple sockets.

// This version listens for emitted 'message' events.

var zmq = require('zeromq')

// Connect to task ventilator

var receiver = zmq.socket('pull')

receiver.on('message', function(msg) {

console.log("From Task Ventilator:", msg.toString())

})

// Connect to weather server.

var subscriber = zmq.socket('sub')

subscriber.subscribe('10001')

subscriber.on('message', function(msg) {

console.log("Weather Update:", msg.toString())

})

receiver.connect('tcp://:5557')

subscriber.connect('tcp://:5556')

mspoller: Objective-C 中的多套接字 poller

/* msreader.m: Reads from multiple sockets the right way. */

#import "ZMQObjC.h"

static NSString *const kTaskVentEndpoint = @"tcp://:5557";

static NSString *const kWeatherServerEndpoint = @"tcp://:5556";

int

main(void)

{

NSAutoreleasePool *pool = [[NSAutoreleasePool alloc] init];

ZMQContext *ctx = [[[ZMQContext alloc] initWithIOThreads:1U] autorelease];

/* Connect to task ventilator. */

ZMQSocket *receiver = [ctx socketWithType:ZMQ_PULL];

[receiver connectToEndpoint:kTaskVentEndpoint];

/* Connect to weather server. */

ZMQSocket *subscriber = [ctx socketWithType:ZMQ_SUB];

[subscriber connectToEndpoint:kWeatherServerEndpoint];

NSData *subData = [@"10001" dataUsingEncoding:NSUTF8StringEncoding];

[subscriber setData:subData forOption:ZMQ_SUBSCRIBE];

/* Initialize poll set. */

zmq_pollitem_t items[2];

[receiver getPollItem:&items[0] forEvents:ZMQ_POLLIN];

[subscriber getPollItem:&items[1] forEvents:ZMQ_POLLIN];

/* Process messages from both sockets. */

for (;;) {

NSAutoreleasePool *p = [[NSAutoreleasePool alloc] init];

[ZMQContext pollWithItems:items count:2

timeoutAfterUsec:ZMQPollTimeoutNever];

[p drain];

}

/* NOT REACHED */

[ctx closeSockets];

[pool drain]; /* This finally releases the autoreleased context. */

return EXIT_SUCCESS;

}

mspoller: ooc 中的多套接字 poller

mspoller: Perl 中的多套接字 poller

# Reading from multiple sockets in Perl

# This version uses AnyEvent to poll the sockets

use strict;

use warnings;

use v5.10;

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_PULL ZMQ_SUB);

use AnyEvent;

use EV;

# Connect to the task ventilator

my $context = ZMQ::FFI->new();

my $receiver = $context->socket(ZMQ_PULL);

$receiver->connect('tcp://:5557');

# Connect to weather server

my $subscriber = $context->socket(ZMQ_SUB);

$subscriber->connect('tcp://:5556');

$subscriber->subscribe('10001');

my $pull_poller = AE::io $receiver->get_fd, 0, sub {

while ($receiver->has_pollin) {

my $msg = $receiver->recv();

# Process task

}

};

my $sub_poller = AE::io $subscriber->get_fd, 0, sub {

while ($subscriber->has_pollin) {

my $msg = $subscriber->recv();

# Process weather update

}

};

EV::run;

mspoller: PHP 中的多套接字 poller

<?php

/*

* Reading from multiple sockets

* This version uses zmq_poll()

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

$context = new ZMQContext();

// Connect to task ventilator

$receiver = new ZMQSocket($context, ZMQ::SOCKET_PULL);

$receiver->connect("tcp://:5557");

// Connect to weather server

$subscriber = new ZMQSocket($context, ZMQ::SOCKET_SUB);

$subscriber->connect("tcp://:5556");

$subscriber->setSockOpt(ZMQ::SOCKOPT_SUBSCRIBE, "10001");

// Initialize poll set

$poll = new ZMQPoll();

$poll->add($receiver, ZMQ::POLL_IN);

$poll->add($subscriber, ZMQ::POLL_IN);

$readable = $writeable = array();

// Process messages from both sockets

while (true) {

$events = $poll->poll($readable, $writeable);

if ($events > 0) {

foreach ($readable as $socket) {

if ($socket === $receiver) {

$message = $socket->recv();

// Process task

} elseif ($socket === $subscriber) {

$mesage = $socket->recv();

// Process weather update

}

}

}

}

// We never get here

mspoller: Python 中的多套接字 poller

# encoding: utf-8

#

# Reading from multiple sockets

# This version uses zmq.Poller()

#

# Author: Jeremy Avnet (brainsik) <spork(dash)zmq(at)theory(dot)org>

#

import zmq

# Prepare our context and sockets

context = zmq.Context()

# Connect to task ventilator

receiver = context.socket(zmq.PULL)

receiver.connect("tcp://:5557")

# Connect to weather server

subscriber = context.socket(zmq.SUB)

subscriber.connect("tcp://:5556")

subscriber.setsockopt(zmq.SUBSCRIBE, b"10001")

# Initialize poll set

poller = zmq.Poller()

poller.register(receiver, zmq.POLLIN)

poller.register(subscriber, zmq.POLLIN)

# Process messages from both sockets

while True:

try:

socks = dict(poller.poll())

except KeyboardInterrupt:

break

if receiver in socks:

message = receiver.recv()

# process task

if subscriber in socks:

message = subscriber.recv()

# process weather update

mspoller: Q 中的多套接字 poller

mspoller: Racket 中的多套接字 poller

mspoller: Ruby 中的多套接字 poller

#!/usr/bin/env ruby

# author: Oleg Sidorov <4pcbr> i4pcbr@gmail.com

# this code is licenced under the MIT/X11 licence.

#

# Reading from multiple sockets

# This version uses a polling

require 'rubygems'

require 'ffi-rzmq'

context = ZMQ::Context.new

# Connect to task ventilator

receiver = context.socket(ZMQ::PULL)

receiver.connect('tcp://:5557')

# Connect to weather server

subscriber = context.socket(ZMQ::SUB)

subscriber.connect('tcp://:5556')

subscriber.setsockopt(ZMQ::SUBSCRIBE, '10001')

# Initialize a poll set

poller = ZMQ::Poller.new

poller.register(receiver, ZMQ::POLLIN)

poller.register(subscriber, ZMQ::POLLIN)

while true

poller.poll(:blocking)

poller.readables.each do |socket|

if socket === receiver

socket.recv_string(message = '')

# process task

puts "task: #{message}"

elsif socket === subscriber

socket.recv_string(message = '')

# process weather update

puts "weather: #{message}"

end

end

end

mspoller: Rust 中的多套接字 poller

fn main() {

let context = zmq::Context::new();

let receiver = context.socket(zmq::PULL).unwrap();

assert!(receiver.connect("tcp://:5557").is_ok());

let subscriber = context.socket(zmq::SUB).unwrap();

assert!(subscriber.connect("tcp://:5556").is_ok());

assert!(subscriber.set_subscribe(b"10001").is_ok());

let items = &mut [

receiver.as_poll_item(zmq::POLLIN),

subscriber.as_poll_item(zmq::POLLIN),

];

loop {

zmq::poll(items, -1).unwrap();

if items[0].is_readable() {

let _ = receiver.recv_msg(0);

}

if items[1].is_readable() {

let _ = subscriber.recv_msg(0);

}

}

}

mspoller: Scala 中的多套接字 poller

/*

* Reading from multiple sockets in Scala

* This version uses ZMQ.Poller

*

* @author Giovanni Ruggiero

* @email giovanni.ruggiero@gmail.com

*/

import org.zeromq.ZMQ

object mspoller {

def main(args : Array[String]) {

val context = ZMQ.context(1)

// Connect to task ventilator

val receiver = context.socket(ZMQ.PULL)

receiver.connect("tcp://:5557")

// Connect to weather server

val subscriber = context.socket(ZMQ.SUB)

subscriber.connect("tcp://:5556")

subscriber.subscribe("10001 ".getBytes())

// Initialize poll set

val items = context.poller(2)

items.register(receiver, 0)

items.register(subscriber, 0)

// Process messages from both sockets

while (true) {

items.poll()

if (items.pollin(0)) {

val message0 = receiver.recv(0)

// Process task

}

if (items.pollin(1)) {

val message1 = subscriber.recv(0)

// Process weather update

}

}

}

}

mspoller: Tcl 中的多套接字 poller

#

# Reading from multiple sockets

# This version uses a simple recv loop

#

package require zmq

# Prepare our context and sockets

zmq context context

# Connect to task ventilator

zmq socket receiver context PULL

receiver connect "tcp://:5557"

# Connect to weather server

zmq socket subscriber context SUB

subscriber connect "tcp://*:5556"

subscriber setsockopt SUBSCRIBE "10001"

# Socket to send messages to

zmq socket sender context PUSH

sender connect "tcp://:5558"

# Initialise poll set

set poll_set [list [list receiver [list POLLIN]] [list subscriber [list POLLIN]]]

# Process message from both sockets

while {1} {

set rpoll_set [zmq poll $poll_set -1]

foreach rpoll $rpoll_set {

switch [lindex $rpoll 0] {

receiver {

if {"POLLIN" in [lindex $rpoll 1]} {

set string [receiver recv]

# Do the work

puts "Process task: $string"

after $string

# Send result to sink

sender send "$string"

}

}

subscriber {

if {"POLLIN" in [lindex $rpoll 1]} {

set string [subscriber recv]

puts "Weather update: $string"

}

}

}

}

# No activity, sleep for 1 msec

after 1

}

# We never get here but clean up anyhow

sender close

receiver close

subscriber close

context term

mspoller: OCaml 中的多套接字 poller

item 结构包含这四个成员

typedef struct {

void *socket; // ZeroMQ socket to poll on

int fd; // OR, native file handle to poll on

short events; // Events to poll on

short revents; // Events returned after poll

} zmq_pollitem_t;

分段消息 #

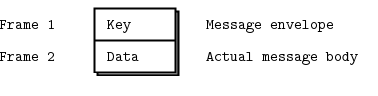

ZeroMQ 允许我们将消息由多个帧组成,形成“分段消息”。实际应用中广泛使用分段消息,既可以用于用地址信息封装消息,也可以用于简单的序列化。我们稍后会讨论回复信封。

现在我们将学习如何在任何需要不检查消息内容而转发消息的应用(例如代理)中盲目且安全地读写分段消息。

当你处理分段消息时,每个部分都是一个zmq_msgitem。例如,如果你发送一个包含五个部分的消息,你必须构造、发送并销毁五个zmq_msgitem。你可以提前完成这些操作(并将这些zmq_msgitem 存储在数组或其他结构中),或者在发送时一个接一个地完成。

以下是发送分段消息中帧的方法(我们将每个帧放入一个消息对象中)

zmq_msg_send (&message, socket, ZMQ_SNDMORE);

...

zmq_msg_send (&message, socket, ZMQ_SNDMORE);

...

zmq_msg_send (&message, socket, 0);

以下是接收和处理消息中所有部分(无论是单部分还是多部分)的方法

while (1) {

zmq_msg_t message;

zmq_msg_init (&message);

zmq_msg_recv (&message, socket, 0);

// Process the message frame

...

zmq_msg_close (&message);

if (!zmq_msg_more (&message))

break; // Last message frame

}

关于分段消息的一些须知事项

- 当你发送分段消息时,只有发送最后一部分时,第一部分(以及所有后续部分)才会真正发送到网络上。

- 如果你正在使用 每个部分都是一个,当你接收到消息的第一部分时,其余部分也已经到达了。

- 你将接收到消息的所有部分,或者一个都没有。

- 消息的每个部分都是一个独立的zmq_msgitem。

- 无论你是否检查 more 属性,你都将接收到消息的所有部分。

- 发送时,ZeroMQ 在内存中排队消息帧,直到接收到最后一个,然后一次性发送所有帧。

- 除了关闭套接字外,无法取消已部分发送的消息。

中介与代理 #

ZeroMQ 旨在实现去中心化智能,但这并不意味着你的网络中间是空的。它充满了消息感知基础设施,而且我们经常使用 ZeroMQ 来构建这些基础设施。ZeroMQ 的管道可以从微小的管道到成熟的面向服务的经纪人。消息传递行业称之为中介,意味着中间的部分处理两端。在 ZeroMQ 中,根据上下文,我们称之为代理、队列、转发器、设备或经纪人。

这种模式在现实世界中极为普遍,这也是为什么我们的社会和经济中充满了中介,他们的主要功能就是降低大型网络的复杂性和扩展成本。现实世界中的中介通常被称为批发商、分销商、经理等等。

动态发现问题 #

在设计大型分布式架构时遇到的问题之一是发现。也就是说,各个部分如何相互知晓?如果各个部分动态地出现和消失,这个问题就尤为困难,因此我们称之为“动态发现问题”。

动态发现有几种解决方案。最简单的方法是通过硬编码(或配置)网络架构来完全避免它,从而手动完成发现。也就是说,当你添加一个新部分时,你需要重新配置网络以使其知晓。

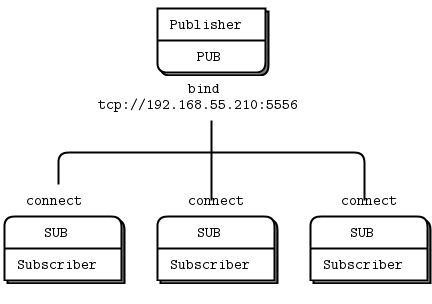

在实践中,这会导致架构变得越来越脆弱和笨重。假设你有一个发布者和一百个订阅者。你通过在每个订阅者中配置发布者端点来将每个订阅者连接到发布者。这很容易。订阅者是动态的;发布者是静态的。现在假设你添加了更多的发布者。突然之间,事情就不那么容易了。如果你继续将每个订阅者连接到每个发布者,避免动态发现的成本就会越来越高。

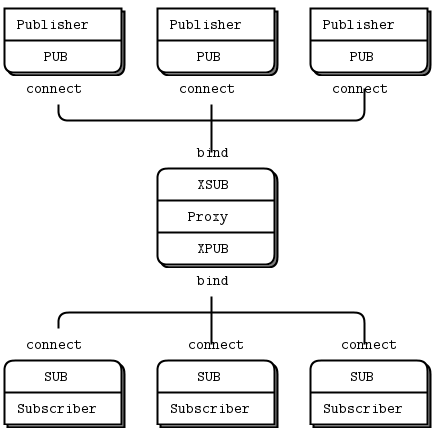

这个问题有很多解决方案,但最简单的答案是添加一个中介;也就是说,在网络中设置一个所有其他节点都连接到的静态点。在经典消息传递中,这是消息经纪人的工作。ZeroMQ 本身不带消息经纪人,但它允许我们很容易地构建中介。

你可能会想,如果所有网络最终都变得足够大而需要中介,为什么我们不直接为所有应用设置一个消息经纪人呢?对于初学者来说,这是一个合理的折衷方案。只要始终使用星形拓扑,忽略性能,通常就能正常工作。然而,消息经纪人是很贪婪的东西;作为中心中介,它们变得过于复杂、状态过多,最终成为一个问题。

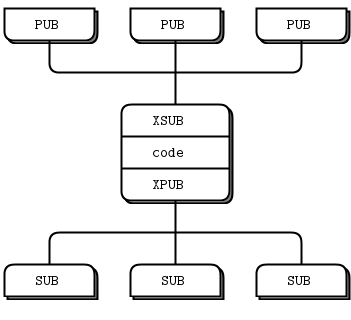

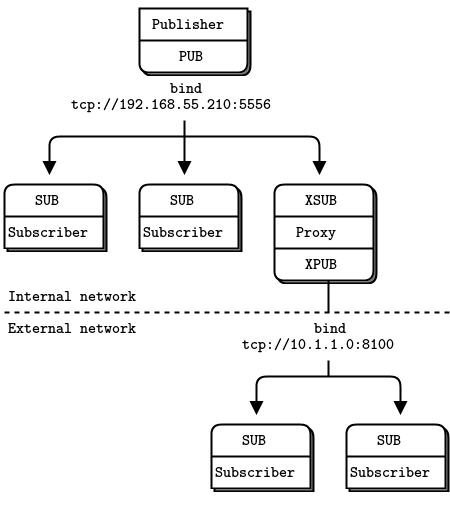

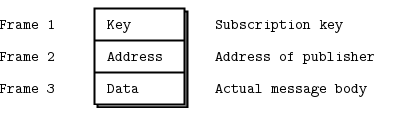

最好将中介视为简单的无状态消息开关。一个好的类比是 HTTP 代理;它在那里,但没有任何特殊作用。添加一个发布/订阅代理解决了我们示例中的动态发现问题。我们将代理设置在网络的“中间”。代理打开一个 XSUB 套接字和一个 XPUB 套接字,并将它们分别绑定到众所周知的 IP 地址和端口。然后,所有其他进程都连接到代理,而不是相互连接。添加更多订阅者或发布者就变得微不足道了。

我们需要 XPUB 和 XSUB 套接字,因为 ZeroMQ 会将订阅从订阅者转发到发布者。XSUB 和 XPUB 与 SUB 和 PUB 完全相同,只是它们将订阅作为特殊消息暴露出来。代理必须将这些订阅消息从订阅者端转发到发布者端,方法是从 XPUB 套接字读取并将它们写入 XSUB 套接字。这是 XSUB 和 XPUB 的主要用例。

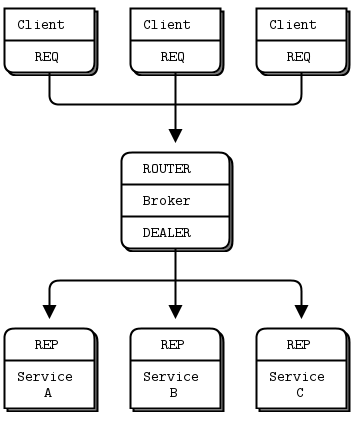

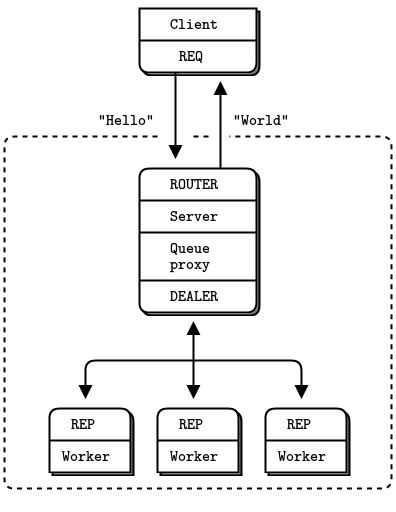

共享队列 (DEALER 和 ROUTER 套接字) #

在 Hello World 客户端/服务器应用中,我们有一个客户端与一个服务通信。然而,在实际案例中,我们通常需要允许多个服务以及多个客户端。这使得我们可以扩展服务的能力(许多线程或进程或节点而不仅仅是一个)。唯一的限制是服务必须是无状态的,所有状态都包含在请求中或在某些共享存储中,例如数据库。

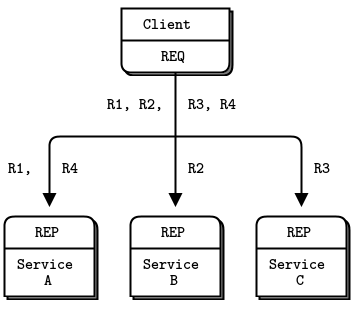

有两种方法将多个客户端连接到多个服务器。蛮力方法是将每个客户端套接字连接到多个服务端点。一个客户端套接字可以连接到多个服务套接字,然后 REQ 套接字将在这些服务之间分发请求。假设你将一个客户端套接字连接到三个服务端点:A、B 和 C。客户端发出请求 R1、R2、R3、R4。R1 和 R4 发送到服务 A,R2 发送到 B,R3 发送到服务 C。

这种设计允许你廉价地添加更多客户端。你也可以添加更多服务。每个客户端会将其请求分发到这些服务。但是每个客户端都必须知道服务拓扑。如果你有 100 个客户端,然后决定再添加三个服务,你需要重新配置并重启 100 个客户端,以便客户端了解这三个新服务。

这显然不是我们在凌晨 3 点,当我们的超级计算集群资源耗尽,急需添加数百个新服务节点时想做的事情。太多静态部分就像液态混凝土:知识是分散的,静态部分越多,改变拓扑所需的努力就越大。我们想要的是客户端和服务之间有一个集中了所有拓扑知识的东西。理想情况下,我们应该能够在任何时候添加和移除服务或客户端,而不影响拓扑的任何其他部分。

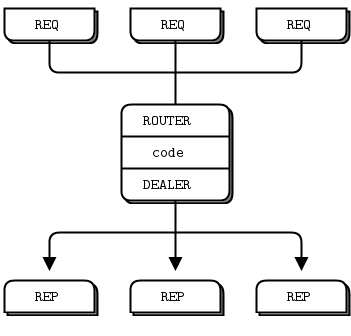

因此,我们将编写一个小的消息队列经纪人,为我们提供这种灵活性。该经纪人绑定到两个端点,一个面向客户端的前端和一个面向服务的后端。然后它使用 每个部分都是一个来监视这两个套接字的活动,当有活动时,它就会在两个套接字之间传递消息。它实际上并不显式管理任何队列——ZeroMQ 在每个套接字上自动完成。

当你使用 REQ 与 REP 通信时,你会得到一个严格同步的请求-回复对话。客户端发送请求。服务读取请求并发送回复。客户端然后读取回复。如果客户端或服务尝试做任何其他事情(例如,连续发送两个请求而不等待响应),它们将收到错误。

但我们的经纪人必须是非阻塞的。显然,我们可以使用 每个部分都是一个来等待任一套接字上的活动,但我们不能使用 REP 和 REQ。

幸运的是,有两种套接字叫做 DEALER 和 ROUTER,它们允许你进行非阻塞的请求-响应。你将在第 3 章 - 高级请求-回复模式中看到 DEALER 和 ROUTER 套接字如何让你构建各种异步请求-回复流程。现在,我们只看看 DEALER 和 ROUTER 如何让我们通过一个中介(也就是我们的小经纪人)扩展 REQ-REP。

在这个简单的扩展请求-回复模式中,REQ 与 ROUTER 通信,DEALER 与 REP 通信。在 DEALER 和 ROUTER 之间,我们必须有代码(像我们的经纪人)将消息从一个套接字取出并推送到另一个套接字。

请求-回复经纪人绑定到两个端点,一个供客户端连接(前端套接字),一个供工作节点连接(后端套接字)。为了测试这个经纪人,你需要修改你的工作节点,使其连接到后端套接字。以下是展示我意思的客户端代码

rrclient: Ada 中的请求-回复客户端

rrclient: Basic 中的请求-回复客户端

rrclient: C 中的请求-回复客户端

// Hello World client

// Connects REQ socket to tcp://:5559

// Sends "Hello" to server, expects "World" back

#include "zhelpers.h"

int main (void)

{

void *context = zmq_ctx_new ();

// Socket to talk to server

void *requester = zmq_socket (context, ZMQ_REQ);

zmq_connect (requester, "tcp://:5559");

int request_nbr;

for (request_nbr = 0; request_nbr != 10; request_nbr++) {

s_send (requester, "Hello");

char *string = s_recv (requester);

printf ("Received reply %d [%s]\n", request_nbr, string);

free (string);

}

zmq_close (requester);

zmq_ctx_destroy (context);

return 0;

}

rrclient: C++ 中的请求-回复客户端

// Request-reply client in C++

// Connects REQ socket to tcp://:5559

// Sends "Hello" to server, expects "World" back

//

#include "zhelpers.hpp"

int main (int argc, char *argv[])

{

zmq::context_t context(1);

zmq::socket_t requester(context, ZMQ_REQ);

requester.connect("tcp://:5559");

for( int request = 0 ; request < 10 ; request++) {

s_send (requester, std::string("Hello"));

std::string string = s_recv (requester);

std::cout << "Received reply " << request

<< " [" << string << "]" << std::endl;

}

}

rrclient: C# 中的请求-回复客户端

rrclient: CL 中的请求-回复客户端

;;; -*- Mode:Lisp; Syntax:ANSI-Common-Lisp; -*-

;;;

;;; Hello World client in Common Lisp

;;; Connects REQ socket to tcp://:5555

;;; Sends "Hello" to server, expects "World" back

;;;

;;; Kamil Shakirov <kamils80@gmail.com>

;;;

(defpackage #:zguide.rrclient

(:nicknames #:rrclient)

(:use #:cl #:zhelpers)

(:export #:main))

(in-package :zguide.rrclient)

(defun main ()

(zmq:with-context (context 1)

;; Socket to talk to server

(zmq:with-socket (requester context zmq:req)

(zmq:connect requester "tcp://:5559")

(dotimes (request-nbr 10)

(let ((request (make-instance 'zmq:msg :data "Hello")))

(zmq:send requester request))

(let ((response (make-instance 'zmq:msg)))

(zmq:recv requester response)

(message "Received reply ~D: [~A]~%"

request-nbr (zmq:msg-data-as-string response))))))

(cleanup))

rrclient: Delphi 中的请求-回复客户端

program rrclient;

//

// Hello World client

// Connects REQ socket to tcp://:5559

// Sends "Hello" to server, expects "World" back

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

;

var

context: TZMQContext;

requester: TZMQSocket;

i: Integer;

s: Utf8String;

begin

context := TZMQContext.Create;

// Socket to talk to server

requester := Context.Socket( stReq );

requester.connect( 'tcp://:5559' );

for i := 0 to 9 do

begin

requester.send( 'Hello' );

requester.recv( s );

Writeln( Format( 'Received reply %d [%s]',[i, s] ) );

end;

requester.Free;

context.Free;

end.

rrclient: Erlang 中的请求-回复客户端

#! /usr/bin/env escript

%%

%% Hello World client

%% Connects REQ socket to tcp://:5559

%% Sends "Hello" to server, expects "World" back

%%

main(_) ->

{ok, Context} = erlzmq:context(),

%% Socket to talk to server

{ok, Requester} = erlzmq:socket(Context, req),

ok = erlzmq:connect(Requester, "tcp://*:5559"),

lists:foreach(

fun(Num) ->

erlzmq:send(Requester, <<"Hello">>),

{ok, Reply} = erlzmq:recv(Requester),

io:format("Received reply ~b [~s]~n", [Num, Reply])

end, lists:seq(1, 10)),

ok = erlzmq:close(Requester),

ok = erlzmq:term(Context).

rrclient: Elixir 中的请求-回复客户端

defmodule Rrclient do

@moduledoc """

Generated by erl2ex (http://github.com/dazuma/erl2ex)

From Erlang source: (Unknown source file)

At: 2019-12-20 13:57:31

"""

def main() do

{:ok, context} = :erlzmq.context()

{:ok, requester} = :erlzmq.socket(context, :req)

#:ok = :erlzmq.connect(requester, 'tcp://*:5559')

:ok = :erlzmq.connect(requester, 'tcp://:5559')

:lists.foreach(fn num ->

:erlzmq.send(requester, "Hello")

{:ok, reply} = :erlzmq.recv(requester)

:io.format('Received reply ~b [~s]~n', [num, reply])

end, :lists.seq(1, 10))

:ok = :erlzmq.close(requester)

:ok = :erlzmq.term(context)

end

end

Rrclient.main()

rrclient: F# 中的请求-回复客户端

rrclient: Felix 中的请求-回复客户端

rrclient: Go 中的请求-回复客户端

// Hello World client

// Connects REQ socket to tcp://:5559

// Sends "Hello" to server, expects "World" back

//

// Author: Brendan Mc.

// Requires: http://github.com/alecthomas/gozmq

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

)

func main() {

context, _ := zmq.NewContext()

defer context.Close()

// Socket to talk to clients

requester, _ := context.NewSocket(zmq.REQ)

defer requester.Close()

requester.Connect("tcp://:5559")

for i := 0; i < 10; i++ {

requester.Send([]byte("Hello"), 0)

reply, _ := requester.Recv(0)

fmt.Printf("Received reply %d [%s]\n", i, reply)

}

}

rrclient: Haskell 中的请求-回复客户端

{-# LANGUAGE OverloadedStrings #-}

-- |

-- Request/Reply Hello World with broker (p.50)

-- Binds REQ socket to tcp://:5559

-- Sends "Hello" to server, expects "World" back

--

-- Use with `rrbroker.hs` and `rrworker.hs`

-- You need to start the broker first !

module Main where

import System.ZMQ4.Monadic

import Control.Monad (forM_)

import Data.ByteString.Char8 (unpack)

import Text.Printf

main :: IO ()

main =

runZMQ $ do

requester <- socket Req

connect requester "tcp://:5559"

forM_ [1..10] $ \i -> do

send requester [] "Hello"

msg <- receive requester

liftIO $ printf "Received reply %d %s\n" (i ::Int) (unpack msg)

rrclient: Haxe 中的请求-回复客户端

package ;

import neko.Lib;

import haxe.io.Bytes;

import org.zeromq.ZMQ;

import org.zeromq.ZMQContext;

import org.zeromq.ZMQSocket;

/**

* Hello World Client

* Connects REQ socket to tcp://:5559

* Sends "Hello" to server, expects "World" back

*

* See: https://zguide.zeromq.cn/page:all#A-Request-Reply-Broker

*

* Use with RrServer and RrBroker

*/

class RrClient

{

public static function main() {

var context:ZMQContext = ZMQContext.instance();

Lib.println("** RrClient (see: https://zguide.zeromq.cn/page:all#A-Request-Reply-Broker)");

var requester:ZMQSocket = context.socket(ZMQ_REQ);

requester.connect ("tcp://:5559");

Lib.println ("Launch and connect client.");

// Do 10 requests, waiting each time for a response

for (i in 0...10) {

var requestString = "Hello ";

// Send the message

requester.sendMsg(Bytes.ofString(requestString));

// Wait for the reply

var msg:Bytes = requester.recvMsg();

Lib.println("Received reply " + i + ": [" + msg.toString() + "]");

}

// Shut down socket and context

requester.close();

context.term();

}

}rrclient: Java 中的请求-回复客户端

package guide;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZContext;

/**

* Hello World client

* Connects REQ socket to tcp://:5559

* Sends "Hello" to server, expects "World" back

*/

public class rrclient

{

public static void main(String[] args)

{

try (ZContext context = new ZContext()) {

// Socket to talk to server

Socket requester = context.createSocket(SocketType.REQ);

requester.connect("tcp://:5559");

System.out.println("launch and connect client.");

for (int request_nbr = 0; request_nbr < 10; request_nbr++) {

requester.send("Hello", 0);

String reply = requester.recvStr(0);

System.out.println(

"Received reply " + request_nbr + " [" + reply + "]"

);

}

}

}

}

rrclient: Julia 中的请求-回复客户端

rrclient: Lua 中的请求-回复客户端

--

-- Hello World client

-- Connects REQ socket to tcp://:5559

-- Sends "Hello" to server, expects "World" back

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zhelpers"

local context = zmq.init(1)

-- Socket to talk to server

local requester = context:socket(zmq.REQ)

requester:connect("tcp://:5559")

for n=0,9 do

requester:send("Hello")

local msg = requester:recv()

printf ("Received reply %d [%s]\n", n, msg)

end

requester:close()

context:term()

rrclient: Node.js 中的请求-回复客户端

// Hello World client in Node.js

// Connects REQ socket to tcp://:5559

// Sends "Hello" to server, expects "World" back

var zmq = require('zeromq')

, requester = zmq.socket('req');

requester.connect('tcp://:5559');

var replyNbr = 0;

requester.on('message', function(msg) {

console.log('got reply', replyNbr, msg.toString());

replyNbr += 1;

});

for (var i = 0; i < 10; ++i) {

requester.send("Hello");

}

rrclient: Objective-C 中的请求-回复客户端

rrclient: ooc 中的请求-回复客户端

rrclient: Perl 中的请求-回复客户端

# Hello world client in Perl

# Connects REQ socket to tcp://:5559

# Sends "Hello" to server, expects "World" back

use strict;

use warnings;

use v5.10;

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_REQ);

my $context = ZMQ::FFI->new();

# Socket to talk to server

my $requester = $context->socket(ZMQ_REQ);

$requester->connect('tcp://:5559');

for my $request_nbr (1..10) {

$requester->send("Hello");

my $string = $requester->recv();

say "Received reply $request_nbr [$string]";

}

rrclient: PHP 中的请求-回复客户端

<?php

/*

* Hello World client

* Connects REQ socket to tcp://:5559

* Sends "Hello" to server, expects "World" back

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

$context = new ZMQContext();

// Socket to talk to server

$requester = new ZMQSocket($context, ZMQ::SOCKET_REQ);

$requester->connect("tcp://:5559");

for ($request_nbr = 0; $request_nbr < 10; $request_nbr++) {

$requester->send("Hello");

$string = $requester->recv();

printf ("Received reply %d [%s]%s", $request_nbr, $string, PHP_EOL);

}

rrclient: Python 中的请求-回复客户端

#

# Request-reply client in Python

# Connects REQ socket to tcp://:5559

# Sends "Hello" to server, expects "World" back

#

import zmq

# Prepare our context and sockets

context = zmq.Context()

socket = context.socket(zmq.REQ)

socket.connect("tcp://:5559")

# Do 10 requests, waiting each time for a response

for request in range(1, 11):

socket.send(b"Hello")

message = socket.recv()

print(f"Received reply {request} [{message}]")

rrclient: Q 中的请求-回复客户端

rrclient: Racket 中的请求-回复客户端

#lang racket

#|

# Request-reply client in Racket

# Connects REQ socket to tcp://:5559

# Sends "Hello" to server, expects "World" back

|#

(require net/zmq)

; Prepare our context and sockets

(define ctxt (context 1))

(define sock (socket ctxt 'REQ))

(socket-connect! sock "tcp://:5559")

; Do 10 requests, waiting each time for a response

(for ([request (in-range 10)])

(printf "Sending request ~a...\n" request)

(socket-send! sock #"Hello")

; Get the reply.

(define message (socket-recv! sock))

(printf "Received reply ~a [~a]\n" request message))

(context-close! ctxt)

rrclient: Ruby 中的请求-回复客户端

#!/usr/bin/env ruby

# author: Oleg Sidorov <4pcbr> i4pcbr@gmail.com

# this code is licenced under the MIT/X11 licence.

require 'rubygems'

require 'ffi-rzmq'

context = ZMQ::Context.new

socket = context.socket(ZMQ::REQ)

socket.connect('tcp://:5559')

10.times do |request|

string = "Hello #{request}"

socket.send_string(string)

puts "Sending string [#{string}]"

socket.recv_string(message = '')

puts "Received reply #{request}[#{message}]"

end

rrclient: Rust 中的请求-回复客户端

fn main() {

let context = zmq::Context::new();

let requester = context.socket(zmq::REQ).unwrap();

assert!(requester.connect("tcp://:5559").is_ok());

for request_nbr in 0..10 {

requester.send("Hello", 0).unwrap();

let string = requester.recv_string(0).unwrap().unwrap();

println!("Received reply {} {}", request_nbr, string);

}

}

rrclient: Scala 中的请求-回复客户端

/*

* Hello World client in Scala

* Connects REQ socket to tcp://:5555

* Sends "Hello" to server, expects "World" back

*

* @author Giovanni Ruggiero

* @email giovanni.ruggiero@gmail.com

*/

import org.zeromq.ZMQ

import org.zeromq.ZMQ.{Context,Socket}

object rrclient{

def main(args : Array[String]) {

// Prepare our context and socket

val context = ZMQ.context(1)

val requester = context.socket(ZMQ.REQ)

requester.connect ("tcp://:5559")

for (request_nbr <- 1 to 10) {

val request = "Hello ".getBytes()

request(request.length-1)=0 //Sets the last byte to 0

// Send the message

println("Sending request " + request_nbr + "...") + request.toString

requester.send(request, 0)

// Get the reply.