第三章 - 高级请求-应答模式 #

在第二章 - 套接字和模式中,我们通过开发一系列小型应用程序,每次探索 ZeroMQ 的新方面,来学习 ZeroMQ 的基础知识。本章我们将继续采用这种方法,探索基于 ZeroMQ 核心请求-应答模式构建的高级模式。

我们将涵盖:

- 请求-应答机制如何工作

- 如何组合使用 REQ、REP、DEALER 和 ROUTER 套接字

- ROUTER 套接字的工作原理详解

- 负载均衡模式

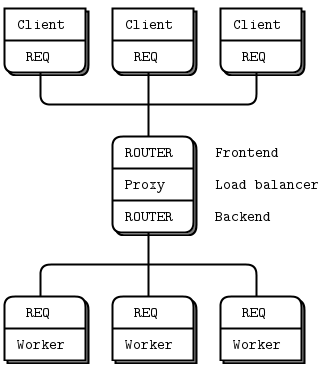

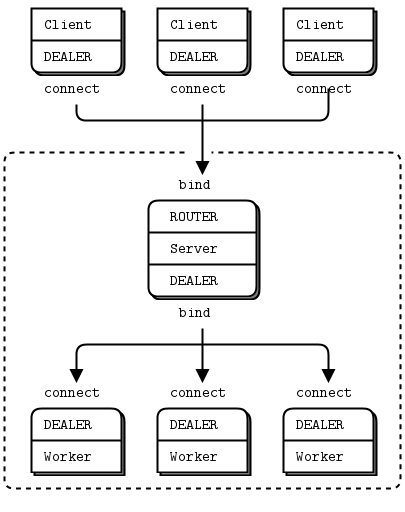

- 构建一个简单的负载均衡消息代理

- 设计 ZeroMQ 高级 API

- 构建异步请求-应答服务器

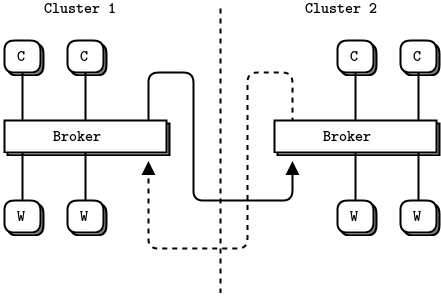

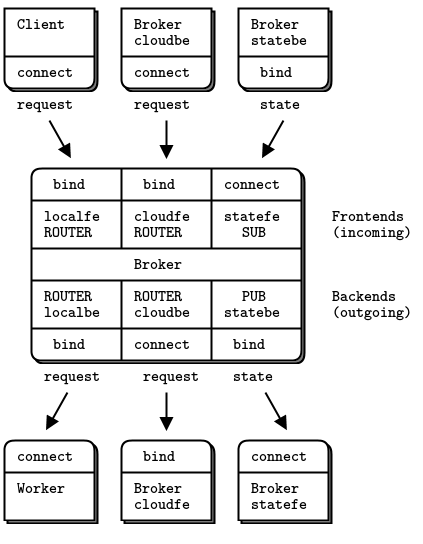

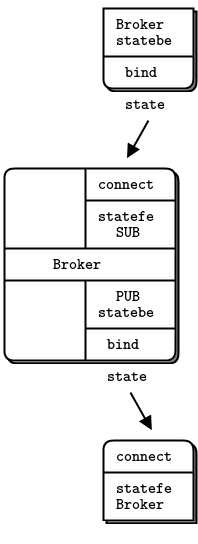

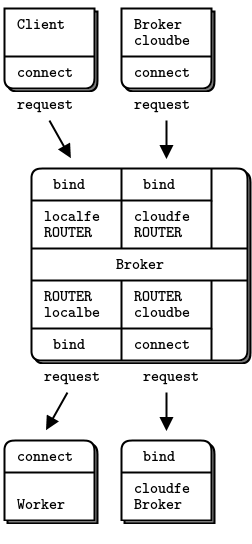

- 详细的代理间路由示例

请求-应答机制 #

我们已经简单了解了多部分消息。现在让我们看看一个主要的用例,即应答消息信封。信封是一种安全地将数据与地址打包在一起的方式,而无需触碰数据本身。通过将应答地址分离到信封中,我们可以编写通用的中介(如 API 和代理),它们无论消息载荷或结构如何,都能创建、读取和删除地址。

在请求-应答模式中,信封包含了应答的返回地址。这是无状态的 ZeroMQ 网络如何创建往返请求-应答对话的方式。

当你使用 REQ 和 REP 套接字时,你甚至看不到信封;这些套接字会自动处理它们。但对于大多数有趣的请求-应答模式,你会需要理解信封,特别是 ROUTER 套接字。我们将一步步进行。

简单应答信封 #

请求-应答交换包括一个请求消息和一个最终的应答消息。在简单请求-应答模式中,每个请求对应一个应答。在更高级的模式中,请求和应答可以异步流动。然而,应答信封的工作方式始终相同。

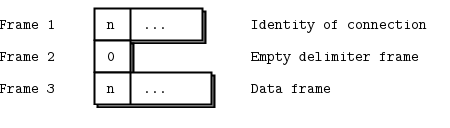

ZeroMQ 应答信封正式由零个或多个应答地址,后跟一个空帧(信封分隔符),再后跟消息体(零个或多个帧)组成。信封由链中协同工作的多个套接字创建。我们将详细分解这一点。

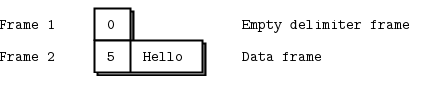

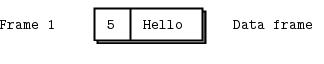

我们将从通过 REQ 套接字发送“Hello”开始。REQ 套接字创建了最简单的应答信封,它没有地址,只有一个空的分隔符帧和包含“Hello”字符串的消息帧。这是一个两帧消息。

REP 套接字完成匹配工作:它剥离信封,直到并包括分隔符帧,保存整个信封,并将“Hello”字符串传递给应用程序。因此,我们最初的 Hello World 示例在内部使用了请求-应答信封,但应用程序从未见过它们。

如果你窥探网络数据流,在hwclient和hwserver之间,你会看到:每个请求和每个应答实际上是两个帧,一个空帧,然后是消息体。对于简单的 REQ-REP 对话来说,这似乎没有多大意义。然而,当我们探索 ROUTER 和 DEALER 如何处理信封时,你就会明白其中的原因。

扩展应答信封 #

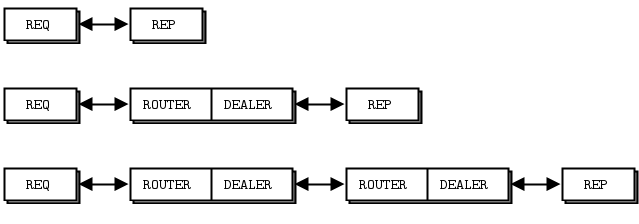

现在,让我们在 REQ-REP 对中间添加一个 ROUTER-DEALER 代理,看看这如何影响应答信封。这就是我们在第二章 - 套接字和模式中已经见过的扩展请求-应答模式。实际上,我们可以插入任意数量的代理步骤。其机制是相同的。

代理执行以下伪代码操作:

prepare context, frontend and backend sockets

while true:

poll on both sockets

if frontend had input:

read all frames from frontend

send to backend

if backend had input:

read all frames from backend

send to frontend

ROUTER 套接字与其他套接字不同,它会跟踪其所有连接,并将这些连接信息告知调用者。告知调用者的方式是将连接的身份放在接收到的每条消息前面。身份,有时也称为地址,只是一个二进制字符串,其含义仅为“这是连接的唯一句柄”。然后,当你通过 ROUTER 套接字发送消息时,你首先发送一个身份帧。

的 zmq_socket()手册页如此描述:

接收消息时,ZMQ_ROUTER 套接字应在将消息传递给应用程序之前,在消息前面加上包含发起对端身份的消息部分。接收到的消息会从所有连接的对端中公平地排队。发送消息时,ZMQ_ROUTER 套接字应移除消息的第一部分,并使用它来确定消息应路由到的对端的身份。

历史注:ZeroMQ v2.2 及更早版本使用 UUID 作为身份。ZeroMQ v3.0 及更高版本默认生成一个 5 字节的身份(0 + 一个随机 32 位整数)。这对网络性能有一些影响,但只在使用多个代理跳跃时才会发生,这种情况很少见。主要改动是为了通过移除对 UUID 库的依赖来简化构建libzmq。

身份是一个难以理解的概念,但如果你想成为 ZeroMQ 专家,这是必不可少的。ROUTER 套接字会为其与之工作的每个连接发明一个随机身份。如果有三个 REQ 套接字连接到 ROUTER 套接字,它将为每个 REQ 套接字发明三个随机身份。

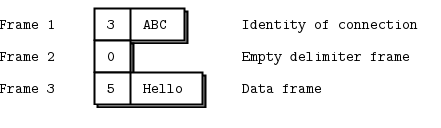

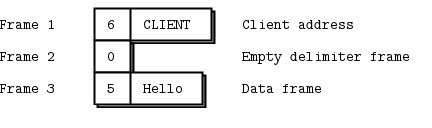

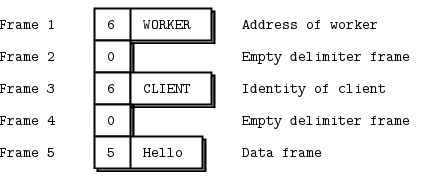

因此,如果我们继续之前的示例,假设 REQ 套接字有一个 3 字节的身份:ABC。在内部,这意味着 ROUTER 套接字维护一个哈希表,它可以在其中搜索ABC并找到 REQ 套接字的 TCP 连接。

当我们从 ROUTER 套接字接收消息时,我们会得到三个帧。

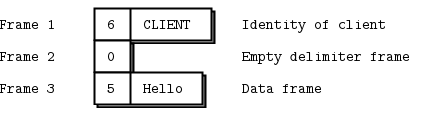

代理循环的核心是“从一个套接字读取,写入另一个套接字”,所以我们实际上将这三个帧原封不动地通过 DEALER 套接字发送出去。如果你现在嗅探网络流量,你会看到这三个帧从 DEALER 套接字飞向 REP 套接字。REP 套接字像以前一样,剥离整个信封,包括新的应答地址,然后再次将“Hello”传递给调用者。

顺带一提,REP 套接字一次只能处理一个请求-应答交换,这就是为什么如果你试图读取多个请求或发送多个应答而不遵循严格的接收-发送循环时,它会报错。

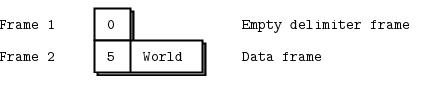

现在你应该能够想象回程路径了。当hwserver发送“World”回来时,REP 套接字会用它保存的信封将消息包装起来,然后通过网络发送一个包含三个帧的应答消息到 DEALER 套接字。

现在 DEALER 读取这三个帧,并将全部三个通过 ROUTER 套接字发送出去。ROUTER 取消息的第一个帧,即ABC身份,并查找与之对应的连接。如果找到,它就会将接下来的两个帧发送到网络上。

REQ 套接字接收到这条消息,并检查第一个帧是否为空分隔符,确实如此。REQ 套接字丢弃该帧,并将“World”传递给调用应用程序,应用程序打印出来,让第一次接触 ZeroMQ 的我们感到惊奇。

有什么用? #

老实说,严格请求-应答或扩展请求-应答的用例有些受限。例如,当服务器由于有 bug 的应用程序代码而崩溃时,没有简单的方法恢复。我们将在第四章 - 可靠请求-应答模式中看到更多相关内容。然而,一旦你掌握了这四种套接字处理信封的方式以及它们之间如何交互,你就可以做很多有用的事情。我们看到了 ROUTER 如何使用应答信封来决定将应答路由回哪个客户端 REQ 套接字。现在让我们换一种方式来表达:

- 每次 ROUTER 给你一条消息时,它都会告诉你这条消息来自哪个对端,以身份的形式。

- 你可以将此信息与哈希表(以身份作为键)结合使用,以跟踪新连接的对端。

- 如果你将身份作为消息的第一个帧前缀,ROUTER 将异步地将消息路由到与其连接的任何对端。

ROUTER 套接字并不关心整个信封。它们不知道空分隔符的存在。它们只关心那个身份帧,以便确定将消息发送到哪个连接。

请求-应答套接字回顾 #

我们来回顾一下:

-

REQ 套接字在消息数据之前向网络发送一个空分隔符帧。REQ 套接字是同步的。REQ 套接字总是发送一个请求,然后等待一个应答。REQ 套接字一次与一个对端通信。如果将 REQ 套接字连接到多个对端,请求会轮流分配给每个对端,并轮流从每个对端接收应答。

-

REP 套接字读取并保存所有身份帧,直到并包括空分隔符,然后将随后的一个或多个帧传递给调用者。REP 套接字是同步的,一次与一个对端通信。如果将 REP 套接字连接到多个对端,请求会以公平的方式从对端读取,应答总是发送给发出上一个请求的那个对端。

-

DEALER 套接字对应答信封一无所知,并像处理任何多部分消息一样处理它。DEALER 套接字是异步的,并且像 PUSH 和 PULL 的组合。它们将发送的消息分发到所有连接,并从所有连接中公平地排队接收消息。

-

ROUTER 套接字对回复信封一无所知,就像 DEALER 一样。它为其连接创建身份,并将这些身份作为接收到的任何消息的第一个帧传递给调用者。反之,当调用者发送消息时,它使用消息的第一个帧作为身份来查找要发送到的连接。ROUTER 是异步的。

请求-应答组合 #

我们有四种请求-应答套接字,每种都有特定的行为。我们已经了解了它们在简单和扩展请求-应答模式中的连接方式。但这些套接字是你可以用来解决许多问题的构建块。

以下是合法的组合:

- REQ 到 REP

- DEALER 到 REP

- REQ 到 ROUTER

- DEALER 到 ROUTER

- DEALER 到 DEALER

- ROUTER 到 ROUTER

以下组合是无效的(我将解释原因):

- REQ 到 REQ

- REQ 到 DEALER

- REP 到 REP

- REP 到 ROUTER

这里有一些记住语义的技巧。DEALER 类似于异步 REQ 套接字,而 ROUTER 类似于异步 REP 套接字。在我们使用 REQ 套接字的地方,可以使用 DEALER;我们只需要自己读写信封。在我们使用 REP 套接字的地方,可以使用 ROUTER;我们只需要自己管理身份。

将 REQ 和 DEALER 套接字视为“客户端”,将 REP 和 ROUTER 套接字视为“服务器”。大多数情况下,你会希望绑定 REP 和 ROUTER 套接字,并将 REQ 和 DEALER 套接字连接到它们。这并非总是如此简单,但这是一个清晰且易于记忆的起点。

REQ 与 REP 组合 #

我们已经介绍了 REQ 客户端与 REP 服务器通信的情况,但我们来看看一个方面:REQ 客户端必须启动消息流。REP 服务器不能与尚未首先向其发送请求的 REQ 客户端通信。从技术上讲,这甚至是不可能的,如果你尝试这样做,API 也会返回一个EFSM错误。

DEALER 与 REP 组合 #

现在,让我们用 DEALER 替换 REQ 客户端。这为我们提供了一个可以与多个 REP 服务器通信的异步客户端。如果我们使用 DEALER 重写“Hello World”客户端,我们将能够发送任意数量的“Hello”请求,而无需等待应答。

当我们使用 DEALER 与 REP 套接字通信时,我们必须精确地模拟 REQ 套接字会发送的信封,否则 REP 套接字会将消息丢弃为无效。因此,要发送消息,我们:

- 发送一个设置了 MORE 标志的空消息帧;然后

- 发送消息体。

接收消息时,我们:

- 接收第一个帧,如果它不是空的,则丢弃整个消息;

- 接收下一个帧并将其传递给应用程序。

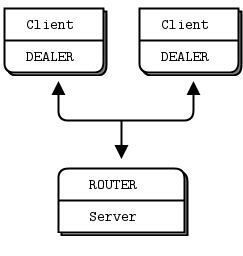

REQ 与 ROUTER 组合 #

就像我们可以用 DEALER 替换 REQ 一样,我们可以用 ROUTER 替换 REP。这为我们提供了一个可以同时与多个 REQ 客户端通信的异步服务器。如果我们使用 ROUTER 重写“Hello World”服务器,我们将能够并行处理任意数量的“Hello”请求。我们在第二章 - 套接字和模式中的mtserver示例中看到了这一点。

我们可以以两种不同的方式使用 ROUTER:

- 作为在前端和后端套接字之间切换消息的代理。

- 作为读取消息并对其进行操作的应用程序。

第一种情况下,ROUTER 只是简单地读取所有帧,包括人工身份帧,并盲目地将其传递下去。第二种情况下,ROUTER 必须知道发送给它的应答信封的格式。由于另一个对端是 REQ 套接字,ROUTER 将接收身份帧、一个空帧,然后是数据帧。

DEALER 与 ROUTER 组合 #

现在我们可以用 DEALER 和 ROUTER 替换 REQ 和 REP,从而获得最强大的套接字组合,即 DEALER 与 ROUTER 通信。它使异步客户端能够与异步服务器通信,并且双方都可以完全控制消息格式。

由于 DEALER 和 ROUTER 都可以处理任意消息格式,如果你希望安全地使用它们,你就必须稍微扮演一下协议设计者的角色。至少,你必须决定是否希望模仿 REQ/REP 应答信封。这取决于你是否确实需要发送应答。

DEALER 与 DEALER 组合 #

你可以用 ROUTER 替换 REP,但如果 DEALER 只与一个对端通信,你也可以用 DEALER 替换 REP。

当你用 DEALER 替换 REP 时,你的工作者可以突然完全异步,发送任意数量的应答。代价是你必须自己管理应答信封,并且要确保正确无误,否则一切都不会工作。我们稍后会看到一个实例。现在姑且说,DEALER 与 DEALER 组合是比较难以正确实现的模式之一,幸运的是我们很少需要它。

ROUTER 与 ROUTER 组合 #

这听起来很适合 N 对 N 连接,但它是最难使用的组合。在深入学习 ZeroMQ 之前,你应该避免使用它。我们将在第四章 - 可靠请求-应答模式的 Freelance 模式中看到一个例子,并在第八章 - 分布式计算框架中看到一种用于点对点工作的 DEALER 与 ROUTER 替代设计。

无效组合 #

通常来说,试图将客户端连接到客户端,或将服务器连接到服务器是一个糟糕的主意,并且不会奏效。然而,与其给出笼统含糊的警告,我将详细解释原因:

-

REQ 到 REQ:双方都想通过向对方发送消息来开始通信,这只有在你精确地安排时序,使得双方同时交换消息时才可能奏效。光是想想就让人头疼。

-

REQ 到 DEALER:理论上你可以这样做,但如果你添加第二个 REQ,它就会崩溃,因为 DEALER 没有办法将应答发送回原始对端。因此,REQ 套接字会混乱,并且/或者返回原本应发送给其他客户端的消息。

-

REP 到 REP:双方都会等待对方发送第一条消息。

-

REP 到 ROUTER:理论上,ROUTER 套接字可以在知道 REP 套接字已经连接并且知道该连接的身份的情况下发起对话并发送格式正确的请求。但这很混乱,并且相比 DEALER 到 ROUTER 没有任何额外的好处。

这些有效和无效组合分类的共同点是,ZeroMQ 套接字连接总是偏向于一个绑定到端点的对端,以及另一个连接到该端点的对端。此外,哪一方绑定,哪一方连接并非任意的,而是遵循自然模式。我们期望“始终存在”的一方进行绑定:它将是服务器、代理、发布者、收集者。“来来往往”的一方进行连接:它将是客户端和工作者。记住这一点将有助于你设计更好的 ZeroMQ 架构。

探索 ROUTER 套接字 #

让我们更仔细地看看 ROUTER 套接字。我们已经了解了它们通过将单独的消息路由到特定连接来工作的方式。我将更详细地解释我们如何识别这些连接,以及当 ROUTER 套接字无法发送消息时会发生什么。

身份和地址 #

ZeroMQ 中的身份概念特指 ROUTER 套接字及其如何识别与其他套接字的连接。更广泛地说,身份在应答信封中用作地址。在大多数情况下,身份是任意的,并且是 ROUTER 套接字的本地概念:它是哈希表中的查找键。独立于身份,对端可以拥有一个物理地址(如网络端点“tcp://192.168.55.117:5670”)或逻辑地址(如 UUID、电子邮件地址或其他唯一键)。

使用 ROUTER 套接字与特定对端通信的应用程序,如果构建了必要的哈希表,可以将逻辑地址转换为身份。因为 ROUTER 套接字只有在对端发送消息时才会公布该连接(到特定对端)的身份,所以你实际上只能回复消息,而不能自发地与对端通信。

即使你颠倒规则,让 ROUTER 连接对端而不是等待对端连接 ROUTER,这也是成立的。然而,你可以强制 ROUTER 套接字使用逻辑地址作为其身份。的zmq_setsockopt参考页将此称为设置套接字身份。其工作方式如下:

- 对端应用程序在绑定或连接之前,设置其对端套接字(DEALER 或 REQ)的ZMQ_IDENTITY选项。

- 通常情况下,对端会连接到已绑定的 ROUTER 套接字。但 ROUTER 也可以连接到对端。

- 在连接时,对端套接字会告诉路由器套接字:“请对该连接使用此身份”。

- 如果对端套接字没有指定,路由器会为其连接生成通常的任意随机身份。

- ROUTER 套接字现在将此逻辑地址作为前缀身份帧提供给应用程序,用于来自该对端的任何消息。

- ROUTER 也期望逻辑地址作为任何传出消息的前缀身份帧。

这是一个简单的例子,说明两个对端连接到 ROUTER 套接字,其中一个强加了一个逻辑地址“PEER2”:

identity: Ada 中的身份检查

identity: Basic 中的身份检查

identity: C 中的身份检查

// Demonstrate request-reply identities

#include "zhelpers.h"

int main (void)

{

void *context = zmq_ctx_new ();

void *sink = zmq_socket (context, ZMQ_ROUTER);

zmq_bind (sink, "inproc://example");

// First allow 0MQ to set the identity

void *anonymous = zmq_socket (context, ZMQ_REQ);

zmq_connect (anonymous, "inproc://example");

s_send (anonymous, "ROUTER uses a generated 5 byte identity");

s_dump (sink);

// Then set the identity ourselves

void *identified = zmq_socket (context, ZMQ_REQ);

zmq_setsockopt (identified, ZMQ_IDENTITY, "PEER2", 5);

zmq_connect (identified, "inproc://example");

s_send (identified, "ROUTER socket uses REQ's socket identity");

s_dump (sink);

zmq_close (sink);

zmq_close (anonymous);

zmq_close (identified);

zmq_ctx_destroy (context);

return 0;

}

identity: C++ 中的身份检查

//

// Demonstrate identities as used by the request-reply pattern. Run this

// program by itself.

//

#include <zmq.hpp>

#include "zhelpers.hpp"

int main () {

zmq::context_t context(1);

zmq::socket_t sink(context, ZMQ_ROUTER);

sink.bind( "inproc://example");

// First allow 0MQ to set the identity

zmq::socket_t anonymous(context, ZMQ_REQ);

anonymous.connect( "inproc://example");

s_send (anonymous, std::string("ROUTER uses a generated 5 byte identity"));

s_dump (sink);

// Then set the identity ourselves

zmq::socket_t identified (context, ZMQ_REQ);

identified.set( zmq::sockopt::routing_id, "PEER2");

identified.connect( "inproc://example");

s_send (identified, std::string("ROUTER socket uses REQ's socket identity"));

s_dump (sink);

return 0;

}

identity: C# 中的身份检查

identity: CL 中的身份检查

;;; -*- Mode:Lisp; Syntax:ANSI-Common-Lisp; -*-

;;;

;;; Demonstrate identities as used by the request-reply pattern in Common Lisp.

;;; Run this program by itself. Note that the utility functions are

;;; provided by zhelpers.lisp. It gets boring for everyone to keep repeating

;;; this code.

;;;

;;; Kamil Shakirov <kamils80@gmail.com>

;;;

(defpackage #:zguide.identity

(:nicknames #:identity)

(:use #:cl #:zhelpers)

(:export #:main))

(in-package :zguide.identity)

(defun main ()

(zmq:with-context (context 1)

(zmq:with-socket (sink context zmq:router)

(zmq:bind sink "inproc://example")

;; First allow 0MQ to set the identity

(zmq:with-socket (anonymous context zmq:req)

(zmq:connect anonymous "inproc://example")

(send-text anonymous "ROUTER uses a generated 5 byte identity")

(dump-socket sink)

;; Then set the identity ourselves

(zmq:with-socket (identified context zmq:req)

(zmq:setsockopt identified zmq:identity "PEER2")

(zmq:connect identified "inproc://example")

(send-text identified "ROUTER socket uses REQ's socket identity")

(dump-socket sink)))))

(cleanup))

identity: Delphi 中的身份检查

program identity;

//

// Demonstrate identities as used by the request-reply pattern. Run this

// program by itself.

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

, zhelpers

;

var

context: TZMQContext;

sink,

anonymous,

identified: TZMQSocket;

begin

context := TZMQContext.create;

sink := context.Socket( stRouter );

sink.bind( 'inproc://example' );

// First allow 0MQ to set the identity

anonymous := context.Socket( stReq );

anonymous.connect( 'inproc://example' );

anonymous.send( 'ROUTER uses a generated 5 byte identity' );

s_dump( sink );

// Then set the identity ourself

identified := context.Socket( stReq );

identified.Identity := 'PEER2';

identified.connect( 'inproc://example' );

identified.send( 'ROUTER socket uses REQ''s socket identity' );

s_dump( sink );

sink.Free;

anonymous.Free;

identified.Free;

context.Free;

end.

identity: Erlang 中的身份检查

#! /usr/bin/env escript

%%

%% Demonstrate identities as used by the request-reply pattern.

%%

main(_) ->

{ok, Context} = erlzmq:context(),

{ok, Sink} = erlzmq:socket(Context, router),

ok = erlzmq:bind(Sink, "inproc://example"),

%% First allow 0MQ to set the identity

{ok, Anonymous} = erlzmq:socket(Context, req),

ok = erlzmq:connect(Anonymous, "inproc://example"),

ok = erlzmq:send(Anonymous, <<"ROUTER uses a generated 5 byte identity">>),

erlzmq_util:dump(Sink),

%% Then set the identity ourselves

{ok, Identified} = erlzmq:socket(Context, req),

ok = erlzmq:setsockopt(Identified, identity, <<"PEER2">>),

ok = erlzmq:connect(Identified, "inproc://example"),

ok = erlzmq:send(Identified,

<<"ROUTER socket uses REQ's socket identity">>),

erlzmq_util:dump(Sink),

erlzmq:close(Sink),

erlzmq:close(Anonymous),

erlzmq:close(Identified),

erlzmq:term(Context).

identity: Elixir 中的身份检查

defmodule Identity do

@moduledoc """

Generated by erl2ex (http://github.com/dazuma/erl2ex)

From Erlang source: (Unknown source file)

At: 2019-12-20 13:57:24

"""

def main() do

{:ok, context} = :erlzmq.context()

{:ok, sink} = :erlzmq.socket(context, :router)

:ok = :erlzmq.bind(sink, 'inproc://example')

{:ok, anonymous} = :erlzmq.socket(context, :req)

:ok = :erlzmq.connect(anonymous, 'inproc://example')

:ok = :erlzmq.send(anonymous, "ROUTER uses a generated 5 byte identity")

#:erlzmq_util.dump(sink)

IO.inspect(sink, label: "1. sink")

{:ok, identified} = :erlzmq.socket(context, :req)

:ok = :erlzmq.setsockopt(identified, :identity, "PEER2")

:ok = :erlzmq.connect(identified, 'inproc://example')

:ok = :erlzmq.send(identified, "ROUTER socket uses REQ's socket identity")

#:erlzmq_util.dump(sink)

IO.inspect(sink, label: "2. sink")

:erlzmq.close(sink)

:erlzmq.close(anonymous)

:erlzmq.close(identified)

:erlzmq.term(context)

end

end

Identity.main

identity: F# 中的身份检查

identity: Felix 中的身份检查

identity: Go 中的身份检查

//

// Demonstrate identities as used by the request-reply pattern. Run this

// program by itself.

//

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

)

func dump(sink *zmq.Socket) {

parts, err := sink.RecvMultipart(0)

if err != nil {

fmt.Println(err)

}

for _, msgdata := range parts {

is_text := true

fmt.Printf("[%03d] ", len(msgdata))

for _, char := range msgdata {

if char < 32 || char > 127 {

is_text = false

}

}

if is_text {

fmt.Printf("%s\n", msgdata)

} else {

fmt.Printf("%X\n", msgdata)

}

}

}

func main() {

context, _ := zmq.NewContext()

defer context.Close()

sink, err := context.NewSocket(zmq.ROUTER)

if err != nil {

print(err)

}

defer sink.Close()

sink.Bind("inproc://example")

// First allow 0MQ to set the identity

anonymous, err := context.NewSocket(zmq.REQ)

defer anonymous.Close()

if err != nil {

fmt.Println(err)

}

anonymous.Connect("inproc://example")

err = anonymous.Send([]byte("ROUTER uses a generated 5 byte identity"), 0)

if err != nil {

fmt.Println(err)

}

dump(sink)

// Then set the identity ourselves

identified, err := context.NewSocket(zmq.REQ)

if err != nil {

print(err)

}

defer identified.Close()

identified.SetIdentity("PEER2")

identified.Connect("inproc://example")

identified.Send([]byte("ROUTER socket uses REQ's socket identity"), zmq.NOBLOCK)

dump(sink)

}

identity: Haskell 中的身份检查

{-# LANGUAGE OverloadedStrings #-}

module Main where

import System.ZMQ4.Monadic

import ZHelpers (dumpSock)

main :: IO ()

main =

runZMQ $ do

sink <- socket Router

bind sink "inproc://example"

anonymous <- socket Req

connect anonymous "inproc://example"

send anonymous [] "ROUTER uses a generated 5 byte identity"

dumpSock sink

identified <- socket Req

setIdentity (restrict "PEER2") identified

connect identified "inproc://example"

send identified [] "ROUTER socket uses REQ's socket identity"

dumpSock sink

identity: Haxe 中的身份检查

package ;

import ZHelpers;

import neko.Lib;

import neko.Sys;

import haxe.io.Bytes;

import org.zeromq.ZMQ;

import org.zeromq.ZContext;

import org.zeromq.ZFrame;

import org.zeromq.ZMQSocket;

/**

* Demonstrate identities as used by the request-reply pattern. Run this

* program by itself.

*/

class Identity

{

public static function main() {

var context:ZContext = new ZContext();

Lib.println("** Identity (see: https://zguide.zeromq.cn/page:all#Request-Reply-Envelopes)");

// Socket facing clients

var sink:ZMQSocket = context.createSocket(ZMQ_ROUTER);

sink.bind("inproc://example");

// First allow 0MQ to set the identity

var anonymous:ZMQSocket = context.createSocket(ZMQ_REQ);

anonymous.connect("inproc://example");

anonymous.sendMsg(Bytes.ofString("ROUTER uses a generated 5 byte identity"));

ZHelpers.dump(sink);

// Then set the identity ourselves

var identified:ZMQSocket = context.createSocket(ZMQ_REQ);

identified.setsockopt(ZMQ_IDENTITY, Bytes.ofString("PEER2"));

identified.connect("inproc://example");

identified.sendMsg(Bytes.ofString("ROUTER socket uses REQ's socket identity"));

ZHelpers.dump(sink);

context.destroy();

}

}

identity: Java 中的身份检查

package guide;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZContext;

/**

* Demonstrate identities as used by the request-reply pattern.

*/

public class identity

{

public static void main(String[] args) throws InterruptedException

{

try (ZContext context = new ZContext()) {

Socket sink = context.createSocket(SocketType.ROUTER);

sink.bind("inproc://example");

// First allow 0MQ to set the identity, [00] + random 4byte

Socket anonymous = context.createSocket(SocketType.REQ);

anonymous.connect("inproc://example");

anonymous.send("ROUTER uses a generated UUID", 0);

ZHelper.dump(sink);

// Then set the identity ourself

Socket identified = context.createSocket(SocketType.REQ);

identified.setIdentity("PEER2".getBytes(ZMQ.CHARSET));

identified.connect("inproc://example");

identified.send("ROUTER socket uses REQ's socket identity", 0);

ZHelper.dump(sink);

}

}

}

identity: Julia 中的身份检查

identity: Lua 中的身份检查

--

-- Demonstrate identities as used by the request-reply pattern. Run this

-- program by itself. Note that the utility functions s_ are provided by

-- zhelpers.h. It gets boring for everyone to keep repeating this code.

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zhelpers"

local context = zmq.init(1)

local sink = context:socket(zmq.ROUTER)

sink:bind("inproc://example")

-- First allow 0MQ to set the identity

local anonymous = context:socket(zmq.REQ)

anonymous:connect("inproc://example")

anonymous:send("ROUTER uses a generated 5 byte identity")

s_dump(sink)

-- Then set the identity ourselves

local identified = context:socket(zmq.REQ)

identified:setopt(zmq.IDENTITY, "PEER2")

identified:connect("inproc://example")

identified:send("ROUTER socket uses REQ's socket identity")

s_dump(sink)

sink:close()

anonymous:close()

identified:close()

context:term()

identity: Node.js 中的身份检查

// Demonstrate request-reply identities

var zmq = require('zeromq'),

zhelpers = require('./zhelpers');

var sink = zmq.socket("router");

sink.bind("inproc://example");

sink.on("message", zhelpers.dumpFrames);

// First allow 0MQ to set the identity

var anonymous = zmq.socket("req");

anonymous.connect("inproc://example");

anonymous.send("ROUTER uses generated 5 byte identity");

// Then set the identity ourselves

var identified = zmq.socket("req");

identified.identity = "PEER2";

identified.connect("inproc://example");

identified.send("ROUTER uses REQ's socket identity");

setTimeout(function() {

anonymous.close();

identified.close();

sink.close();

}, 250);

identity: Objective-C 中的身份检查

identity: ooc 中的身份检查

identity: Perl 中的身份检查

# Demonstrate request-reply identities in Perl

use strict;

use warnings;

use v5.10;

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_ROUTER ZMQ_REQ ZMQ_IDENTITY);

use zhelpers;

my $context = ZMQ::FFI->new();

my $sink = $context->socket(ZMQ_ROUTER);

$sink->bind('inproc://example');

# First allow 0MQ to set the identity

my $anonymous = $context->socket(ZMQ_REQ);

$anonymous->connect('inproc://example');

$anonymous->send('ROUTER uses a generated 5 byte identity');

zhelpers::dump($sink);

# Then set the identity ourselves

my $identified = $context->socket(ZMQ_REQ);

$identified->set_identity('PEER2');

$identified->connect('inproc://example');

$identified->send("ROUTER socket uses REQ's socket identity");

zhelpers::dump($sink);

identity: PHP 中的身份检查

<?php

/*

* Demonstrate identities as used by the request-reply pattern. Run this

* program by itself. Note that the utility functions s_ are provided by

* zhelpers.h. It gets boring for everyone to keep repeating this code.

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

include 'zhelpers.php';

$context = new ZMQContext();

$sink = new ZMQSocket($context, ZMQ::SOCKET_ROUTER);

$sink->bind("inproc://example");

// First allow 0MQ to set the identity

$anonymous = new ZMQSocket($context, ZMQ::SOCKET_REQ);

$anonymous->connect("inproc://example");

$anonymous->send("ROUTER uses a generated 5 byte identity");

s_dump ($sink);

// Then set the identity ourselves

$identified = new ZMQSocket($context, ZMQ::SOCKET_REQ);

$identified->setSockOpt(ZMQ::SOCKOPT_IDENTITY, "PEER2");

$identified->connect("inproc://example");

$identified->send("ROUTER socket uses REQ's socket identity");

s_dump ($sink);

identity: Python 中的身份检查

# encoding: utf-8

#

# Demonstrate identities as used by the request-reply pattern. Run this

# program by itself.

#

# Author: Jeremy Avnet (brainsik) <spork(dash)zmq(at)theory(dot)org>

#

import zmq

import zhelpers

context = zmq.Context()

sink = context.socket(zmq.ROUTER)

sink.bind("inproc://example")

# First allow 0MQ to set the identity

anonymous = context.socket(zmq.REQ)

anonymous.connect("inproc://example")

anonymous.send(b"ROUTER uses a generated 5 byte identity")

zhelpers.dump(sink)

# Then set the identity ourselves

identified = context.socket(zmq.REQ)

identified.setsockopt(zmq.IDENTITY, b"PEER2")

identified.connect("inproc://example")

identified.send(b"ROUTER socket uses REQ's socket identity")

zhelpers.dump(sink)

identity: Q 中的身份检查

// Demonstrate identities as used by the request-reply pattern.

\l qzmq.q

ctx:zctx.new[]

sink:zsocket.new[ctx; zmq`ROUTER]

port:zsocket.bind[sink; `inproc://example]

// First allow 0MQ to set the identity

anonymous:zsocket.new[ctx; zmq`REQ]

zsocket.connect[anonymous; `inproc://example]

m0:zmsg.new[]

zmsg.push[m0; zframe.new["ROUTER uses a generated 5 byte identity"]]

zmsg.send[m0; anonymous]

zmsg.dump[zmsg.recv[sink]]

// Then set the identity ourselves

identified:zsocket.new[ctx; zmq`REQ]

zsockopt.set_identity[identified; "PEER2"]

zsocket.connect[identified; `inproc://example]

m1:zmsg.new[]

zmsg.push[m1; zframe.new["ROUTER socket users REQ's socket identity"]]

zmsg.send[m1; identified]

zmsg.dump[zmsg.recv[sink]]

zsocket.destroy[ctx; sink]

zsocket.destroy[ctx; anonymous]

zsocket.destroy[ctx; identified]

zctx.destroy[ctx]

\\

identity: Racket 中的身份检查

identity: Ruby 中的身份检查

#!/usr/bin/env ruby

#

#

# Identity check in Ruby

#

#

require 'ffi-rzmq'

require './zhelpers.rb'

context = ZMQ::Context.new

uri = "inproc://example"

sink = context.socket(ZMQ::ROUTER)

sink.bind(uri)

# 0MQ will set the identity here

anonymous = context.socket(ZMQ::DEALER)

anonymous.connect(uri)

anon_message = ZMQ::Message.new("ROUTER uses a generated 5 byte identity")

anonymous.sendmsg(anon_message)

s_dump(sink)

# Set the identity ourselves

identified = context.socket(ZMQ::DEALER)

identified.setsockopt(ZMQ::IDENTITY, "PEER2")

identified.connect(uri)

identified_message = ZMQ::Message.new("Router uses socket identity")

identified.sendmsg(identified_message)

s_dump(sink)

identity: Rust 中的身份检查

identity: Scala 中的身份检查

// Demonstrate identities as used by the request-reply pattern.

//

// @author Giovanni Ruggiero

// @email giovanni.ruggiero@gmail.com

import org.zeromq.ZMQ

import ZHelpers._

object identity {

def main(args : Array[String]) {

val context = ZMQ.context(1)

val sink = context.socket(ZMQ.DEALER)

sink.bind("inproc://example")

val anonymous = context.socket(ZMQ.REQ)

anonymous.connect("inproc://example")

anonymous.send("ROUTER uses a generated 5 byte identity".getBytes,0)

dump(sink)

val identified = context.socket(ZMQ.REQ)

identified.setIdentity("PEER2" getBytes)

identified.connect("inproc://example")

identified.send("ROUTER socket uses REQ's socket identity".getBytes,0)

dump(sink)

identified.close

}

}

identity: Tcl 中的身份检查

#

# Demonstrate identities as used by the request-reply pattern. Run this

# program by itself.

#

package require zmq

zmq context context

zmq socket sink context ROUTER

sink bind "inproc://example"

# First allow 0MQ to set the identity

zmq socket anonymous context REQ

anonymous connect "inproc://example"

anonymous send "ROUTER uses a generated 5 byte identity"

puts "--------------------------------------------------"

puts [join [sink dump] \n]

# Then set the identity ourselves

zmq socket identified context REQ

identified setsockopt IDENTITY "PEER2"

identified connect "inproc://example"

identified send "ROUTER socket uses REQ's socket identity"

puts "--------------------------------------------------"

puts [join [sink dump] \n]

sink close

anonymous close

identified close

context term

identity: OCaml 中的身份检查

以下是程序输出:

----------------------------------------

[005] 006B8B4567

[000]

[039] ROUTER uses a generated 5 byte identity

----------------------------------------

[005] PEER2

[000]

[038] ROUTER uses REQ's socket identity

ROUTER 错误处理 #

ROUTER 套接字处理无法发送的消息的方式有些简单粗暴:它们会静默丢弃这些消息。这种处理方式在正常工作的代码中是合理的,但会使调试变得困难。“将身份作为第一个帧发送”的方法本身就很微妙,我们在学习时经常会出错,而 ROUTER 在我们搞砸时的冰冷沉默并不具建设性。

自 ZeroMQ v3.2 起,你可以设置一个套接字选项来捕获此错误:ZMQ_ROUTER_MANDATORY。在 ROUTER 套接字上设置此选项后,当你发送消息时提供一个无法路由的身份,套接字将报告一个EHOSTUNREACH错误。

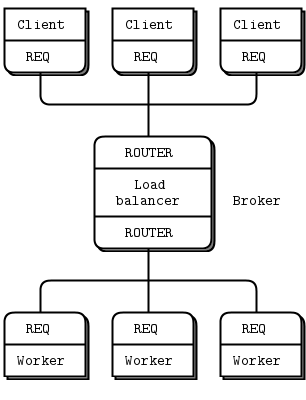

负载均衡模式 #

现在让我们看一些代码。我们将了解如何将 ROUTER 套接字连接到 REQ 套接字,然后再连接到 DEALER 套接字。这两个例子遵循相同的逻辑,即负载均衡模式。这种模式是我们第一次接触使用 ROUTER 套接字进行有意识的路由,而不仅仅是充当应答通道。

负载均衡模式非常常见,我们将在本书中多次看到它。它解决了简单的轮询路由(如 PUSH 和 DEALER 提供)的主要问题,即如果任务花费的时间差异很大,轮询就会变得效率低下。

这就像邮局的例子。如果你每个柜台都有一个队列,并且有些人购买邮票(一个快速、简单的交易),而有些人开设新账户(一个非常慢的交易),那么你会发现购买邮票的人会不公平地被困在队列中。就像在邮局一样,如果你的消息架构不公平,人们就会感到恼火。

邮局的解决方案是创建一个单一队列,这样即使一两个柜台被缓慢的工作卡住,其他柜台仍将继续以先到先得的方式为客户服务。

PUSH 和 DEALER 使用这种简单方法的其中一个原因是纯粹的性能。如果你抵达美国任何一个主要机场,你会看到排队等待过海关的长队。边境巡逻官员会提前将人们分配到每个柜台前排队,而不是使用单一队列。让人们提前走五十码可以为每位乘客节省一两分钟。而且由于每个护照检查所需的时间大致相同,所以或多或少是公平的。这就是 PUSH 和 DEALER 的策略:提前发送工作负载,以减少传输距离。

这是 ZeroMQ 反复出现的主题:世界上的问题是多样的,你可以通过以正确的方式解决不同的问题来受益。机场不是邮局,一刀切的方案对谁都不适用,真的。

让我们回到工作者(DEALER 或 REQ)连接到代理(ROUTER)的场景。代理必须知道工作者何时准备就绪,并维护一个工作者列表,以便每次都可以选择最近最少使用的工作者。

实际上,解决方案非常简单:工作者在启动时以及完成每个任务后发送一个“ready”消息。代理逐一读取这些消息。每次读取消息时,都是来自上一次使用的工作者。由于我们使用的是 ROUTER 套接字,我们得到了一个身份,然后可以使用这个身份将任务发送回工作者。

这可以看作是对请求-应答的一种变体,因为任务是随应答一起发送的,而任务的任何响应则作为一个新的请求发送。下面的代码示例应该会使其更清晰。

ROUTER 代理和 REQ 工作者 #

以下是一个使用 ROUTER 代理与一组 REQ 工作者通信的负载均衡模式示例:

rtreq: Ada 中的 ROUTER 到 REQ

rtreq: Basic 中的 ROUTER 到 REQ

rtreq: C 中的 ROUTER 到 REQ

// 2015-01-16T09:56+08:00

// ROUTER-to-REQ example

#include "zhelpers.h"

#include <pthread.h>

#define NBR_WORKERS 10

static void *

worker_task(void *args)

{

void *context = zmq_ctx_new();

void *worker = zmq_socket(context, ZMQ_REQ);

#if (defined (WIN32))

s_set_id(worker, (intptr_t)args);

#else

s_set_id(worker); // Set a printable identity.

#endif

zmq_connect(worker, "tcp://:5671");

int total = 0;

while (1) {

// Tell the broker we're ready for work

s_send(worker, "Hi Boss");

// Get workload from broker, until finished

char *workload = s_recv(worker);

int finished = (strcmp(workload, "Fired!") == 0);

free(workload);

if (finished) {

printf("Completed: %d tasks\n", total);

break;

}

total++;

// Do some random work

s_sleep(randof(500) + 1);

}

zmq_close(worker);

zmq_ctx_destroy(context);

return NULL;

}

// .split main task

// While this example runs in a single process, that is only to make

// it easier to start and stop the example. Each thread has its own

// context and conceptually acts as a separate process.

int main(void)

{

void *context = zmq_ctx_new();

void *broker = zmq_socket(context, ZMQ_ROUTER);

zmq_bind(broker, "tcp://*:5671");

srandom((unsigned)time(NULL));

int worker_nbr;

for (worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

pthread_t worker;

pthread_create(&worker, NULL, worker_task, (void *)(intptr_t)worker_nbr);

}

// Run for five seconds and then tell workers to end

int64_t end_time = s_clock() + 5000;

int workers_fired = 0;

while (1) {

// Next message gives us least recently used worker

char *identity = s_recv(broker);

s_sendmore(broker, identity);

free(identity);

free(s_recv(broker)); // Envelope delimiter

free(s_recv(broker)); // Response from worker

s_sendmore(broker, "");

// Encourage workers until it's time to fire them

if (s_clock() < end_time)

s_send(broker, "Work harder");

else {

s_send(broker, "Fired!");

if (++workers_fired == NBR_WORKERS)

break;

}

}

zmq_close(broker);

zmq_ctx_destroy(context);

return 0;

}rtreq: C++ 中的 ROUTER 到 REQ

//

// Custom routing Router to Mama (ROUTER to REQ)

//

#include "zhelpers.hpp"

#include <thread>

#include <vector>

static void *

worker_thread(void *arg) {

zmq::context_t context(1);

zmq::socket_t worker(context, ZMQ_REQ);

// We use a string identity for ease here

#if (defined (WIN32))

s_set_id(worker, (intptr_t)arg);

worker.connect("tcp://:5671"); // "ipc" doesn't yet work on windows.

#else

s_set_id(worker);

worker.connect("ipc://routing.ipc");

#endif

int total = 0;

while (1) {

// Tell the broker we're ready for work

s_send(worker, std::string("Hi Boss"));

// Get workload from broker, until finished

std::string workload = s_recv(worker);

if ("Fired!" == workload) {

std::cout << "Processed: " << total << " tasks" << std::endl;

break;

}

total++;

// Do some random work

s_sleep(within(500) + 1);

}

return NULL;

}

int main() {

zmq::context_t context(1);

zmq::socket_t broker(context, ZMQ_ROUTER);

#if (defined(WIN32))

broker.bind("tcp://*:5671"); // "ipc" doesn't yet work on windows.

#else

broker.bind("ipc://routing.ipc");

#endif

const int NBR_WORKERS = 10;

std::vector<std::thread> workers;

for (int worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

workers.push_back(std::move(std::thread(worker_thread, (void *)(intptr_t)worker_nbr)));

}

// Run for five seconds and then tell workers to end

int64_t end_time = s_clock() + 5000;

int workers_fired = 0;

while (1) {

// Next message gives us least recently used worker

std::string identity = s_recv(broker);

s_recv(broker); // Envelope delimiter

s_recv(broker); // Response from worker

s_sendmore(broker, identity);

s_sendmore(broker, std::string(""));

// Encourage workers until it's time to fire them

if (s_clock() < end_time)

s_send(broker, std::string("Work harder"));

else {

s_send(broker, std::string("Fired!"));

if (++workers_fired == NBR_WORKERS)

break;

}

}

for (int worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

workers[worker_nbr].join();

}

return 0;

}

rtreq: C# 中的 ROUTER 到 REQ

rtreq: CL 中的 ROUTER 到 REQ

;;; -*- Mode:Lisp; Syntax:ANSI-Common-Lisp; -*-

;;;

;;; Custom routing Router to Mama (ROUTER to REQ) in Common Lisp

;;;

;;; Kamil Shakirov <kamils80@gmail.com>

;;;

(defpackage #:zguide.rtmama

(:nicknames #:rtmama)

(:use #:cl #:zhelpers)

(:export #:main))

(in-package :zguide.rtmama)

(defparameter *number-workers* 10)

(defun worker-thread (context)

(zmq:with-socket (worker context zmq:req)

;; We use a string identity for ease here

(set-socket-id worker)

(zmq:connect worker "ipc://routing.ipc")

(let ((total 0))

(loop

;; Tell the router we're ready for work

(send-text worker "ready")

;; Get workload from router, until finished

(let ((workload (recv-text worker)))

(when (string= workload "END")

(message "Processed: ~D tasks~%" total)

(return))

(incf total))

;; Do some random work

(isys:usleep (within 100000))))))

(defun main ()

(zmq:with-context (context 1)

(zmq:with-socket (client context zmq:router)

(zmq:bind client "ipc://routing.ipc")

(dotimes (i *number-workers*)

(bt:make-thread (lambda () (worker-thread context))

:name (format nil "worker-thread-~D" i)))

(loop :repeat (* 10 *number-workers*) :do

;; LRU worker is next waiting in queue

(let ((address (recv-text client)))

(recv-text client) ; empty

(recv-text client) ; ready

(send-more-text client address)

(send-more-text client "")

(send-text client "This is the workload")))

;; Now ask mamas to shut down and report their results

(loop :repeat *number-workers* :do

;; LRU worker is next waiting in queue

(let ((address (recv-text client)))

(recv-text client) ; empty

(recv-text client) ; ready

(send-more-text client address)

(send-more-text client "")

(send-text client "END")))

;; Give 0MQ/2.0.x time to flush output

(sleep 1)))

(cleanup))

rtreq: Delphi 中的 ROUTER 到 REQ

program rtreq;

//

// ROUTER-to-REQ example

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, Windows

, zmqapi

, zhelpers

;

const

NBR_WORKERS = 10;

procedure worker_task( args: Pointer );

var

context: TZMQContext;

worker: TZMQSocket;

total: Integer;

workload: Utf8String;

begin

context := TZMQContext.create;

worker := context.Socket( stReq );

s_set_id( worker ); // Set a printable identity

worker.connect( 'tcp://:5671' );

total := 0;

while true do

begin

// Tell the broker we're ready for work

worker.send( 'Hi Boss' );

// Get workload from broker, until finished

worker.recv( workload );

if workload = 'Fired!' then

begin

zNote( Format( 'Completed: %d tasks', [total] ) );

break;

end;

Inc( total );

// Do some random work

sleep( random( 500 ) + 1 );

end;

worker.Free;

context.Free;

end;

// While this example runs in a single process, that is just to make

// it easier to start and stop the example. Each thread has its own

// context and conceptually acts as a separate process.

var

context: TZMQContext;

broker: TZMQSocket;

i,

workers_fired: Integer;

tid: Cardinal;

identity,

s: Utf8String;

fFrequency,

fstart,

fStop,

dt: Int64;

begin

context := TZMQContext.create;

broker := context.Socket( stRouter );

broker.bind( 'tcp://*:5671' );

Randomize;

for i := 0 to NBR_WORKERS - 1 do

BeginThread( nil, 0, @worker_task, nil, 0, tid );

// Start our clock now

QueryPerformanceFrequency( fFrequency );

QueryPerformanceCounter( fStart );

// Run for five seconds and then tell workers to end

workers_fired := 0;

while true do

begin

// Next message gives us least recently used worker

broker.recv( identity );

broker.send( identity, [sfSndMore] );

broker.recv( s ); // Envelope delimiter

broker.recv( s ); // Response from worker

broker.send( '', [sfSndMore] );

QueryPerformanceCounter( fStop );

dt := ( MSecsPerSec * ( fStop - fStart ) ) div fFrequency;

if dt < 5000 then

broker.send( 'Work harder' )

else begin

broker.send( 'Fired!' );

Inc( workers_fired );

if workers_fired = NBR_WORKERS then

break;

end;

end;

broker.Free;

context.Free;

end.

rtreq: Erlang 中的 ROUTER 到 REQ

#! /usr/bin/env escript

%%

%% Custom routing Router to Mama (ROUTER to REQ)

%%

%% While this example runs in a single process, that is just to make

%% it easier to start and stop the example. Each thread has its own

%% context and conceptually acts as a separate process.

%%

-define(NBR_WORKERS, 10).

worker_task() ->

random:seed(now()),

{ok, Context} = erlzmq:context(),

{ok, Worker} = erlzmq:socket(Context, req),

%% We use a string identity for ease here

ok = erlzmq:setsockopt(Worker, identity, pid_to_list(self())),

ok = erlzmq:connect(Worker, "ipc://routing.ipc"),

Total = handle_tasks(Worker, 0),

io:format("Processed ~b tasks~n", [Total]),

erlzmq:close(Worker),

erlzmq:term(Context).

handle_tasks(Worker, TaskCount) ->

%% Tell the router we're ready for work

ok = erlzmq:send(Worker, <<"ready">>),

%% Get workload from router, until finished

case erlzmq:recv(Worker) of

{ok, <<"END">>} -> TaskCount;

{ok, _} ->

%% Do some random work

timer:sleep(random:uniform(1000) + 1),

handle_tasks(Worker, TaskCount + 1)

end.

main(_) ->

{ok, Context} = erlzmq:context(),

{ok, Client} = erlzmq:socket(Context, router),

ok = erlzmq:bind(Client, "ipc://routing.ipc"),

start_workers(?NBR_WORKERS),

route_work(Client, ?NBR_WORKERS * 10),

stop_workers(Client, ?NBR_WORKERS),

ok = erlzmq:close(Client),

ok = erlzmq:term(Context).

start_workers(0) -> ok;

start_workers(N) when N > 0 ->

spawn(fun() -> worker_task() end),

start_workers(N - 1).

route_work(_Client, 0) -> ok;

route_work(Client, N) when N > 0 ->

%% LRU worker is next waiting in queue

{ok, Address} = erlzmq:recv(Client),

{ok, <<>>} = erlzmq:recv(Client),

{ok, <<"ready">>} = erlzmq:recv(Client),

ok = erlzmq:send(Client, Address, [sndmore]),

ok = erlzmq:send(Client, <<>>, [sndmore]),

ok = erlzmq:send(Client, <<"This is the workload">>),

route_work(Client, N - 1).

stop_workers(_Client, 0) -> ok;

stop_workers(Client, N) ->

%% Ask mama to shut down and report their results

{ok, Address} = erlzmq:recv(Client),

{ok, <<>>} = erlzmq:recv(Client),

{ok, _Ready} = erlzmq:recv(Client),

ok = erlzmq:send(Client, Address, [sndmore]),

ok = erlzmq:send(Client, <<>>, [sndmore]),

ok = erlzmq:send(Client, <<"END">>),

stop_workers(Client, N - 1).

rtreq: Elixir 中的 ROUTER 到 REQ

defmodule Rtreq do

@moduledoc """

Generated by erl2ex (http://github.com/dazuma/erl2ex)

From Erlang source: (Unknown source file)

At: 2019-12-20 13:57:33

"""

defmacrop erlconst_NBR_WORKERS() do

quote do

10

end

end

def worker_task() do

:random.seed(:erlang.now())

{:ok, context} = :erlzmq.context()

{:ok, worker} = :erlzmq.socket(context, :req)

:ok = :erlzmq.setsockopt(worker, :identity, :erlang.pid_to_list(self()))

:ok = :erlzmq.connect(worker, 'ipc://routing.ipc')

total = handle_tasks(worker, 0)

:io.format('Processed ~b tasks~n', [total])

:erlzmq.close(worker)

:erlzmq.term(context)

end

def handle_tasks(worker, taskCount) do

:ok = :erlzmq.send(worker, "ready")

case(:erlzmq.recv(worker)) do

{:ok, "END"} ->

taskCount

{:ok, _} ->

:timer.sleep(:random.uniform(1000) + 1)

handle_tasks(worker, taskCount + 1)

end

end

def main() do

{:ok, context} = :erlzmq.context()

{:ok, client} = :erlzmq.socket(context, :router)

:ok = :erlzmq.bind(client, 'ipc://routing.ipc')

start_workers(erlconst_NBR_WORKERS())

route_work(client, erlconst_NBR_WORKERS() * 10)

stop_workers(client, erlconst_NBR_WORKERS())

:ok = :erlzmq.close(client)

:ok = :erlzmq.term(context)

end

def start_workers(0) do

:ok

end

def start_workers(n) when n > 0 do

:erlang.spawn(fn -> worker_task() end)

start_workers(n - 1)

end

def route_work(_client, 0) do

:ok

end

def route_work(client, n) when n > 0 do

{:ok, address} = :erlzmq.recv(client)

{:ok, <<>>} = :erlzmq.recv(client)

{:ok, "ready"} = :erlzmq.recv(client)

:ok = :erlzmq.send(client, address, [:sndmore])

:ok = :erlzmq.send(client, <<>>, [:sndmore])

:ok = :erlzmq.send(client, "This is the workload")

route_work(client, n - 1)

end

def stop_workers(_client, 0) do

:ok

end

def stop_workers(client, n) do

{:ok, address} = :erlzmq.recv(client)

{:ok, <<>>} = :erlzmq.recv(client)

{:ok, _ready} = :erlzmq.recv(client)

:ok = :erlzmq.send(client, address, [:sndmore])

:ok = :erlzmq.send(client, <<>>, [:sndmore])

:ok = :erlzmq.send(client, "END")

stop_workers(client, n - 1)

end

end

Rtreq.main

rtreq: F# 中的 ROUTER 到 REQ

rtreq: Felix 中的 ROUTER 到 REQ

rtreq: Go 中的 ROUTER 到 REQ

//

// ROUTER-to-REQ example

//

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

"math/rand"

"strings"

"time"

)

const NBR_WORKERS = 10

func randomString() string {

source := "abcdefghijklmnopqrstuvwxyz"

target := make([]string, 20)

for i := 0; i < 20; i++ {

target[i] = string(source[rand.Intn(len(source))])

}

return strings.Join(target, "")

}

func workerTask() {

context, _ := zmq.NewContext()

defer context.Close()

worker, _ := context.NewSocket(zmq.REQ)

worker.SetIdentity(randomString())

worker.Connect("tcp://:5671")

defer worker.Close()

total := 0

for {

err := worker.Send([]byte("Hi Boss"), 0)

if err != nil {

print(err)

}

workload, _ := worker.Recv(0)

if string(workload) == "Fired!" {

id, _ := worker.Identity()

fmt.Printf("Completed: %d tasks (%s)\n", total, id)

break

}

total += 1

msec := rand.Intn(1000)

time.Sleep(time.Duration(msec) * time.Millisecond)

}

}

// While this example runs in a single process, that is just to make

// it easier to start and stop the example. Each goroutine has its own

// context and conceptually acts as a separate process.

func main() {

context, _ := zmq.NewContext()

defer context.Close()

broker, _ := context.NewSocket(zmq.ROUTER)

defer broker.Close()

broker.Bind("tcp://*:5671")

rand.Seed(time.Now().Unix())

for i := 0; i < NBR_WORKERS; i++ {

go workerTask()

}

end_time := time.Now().Unix() + 5

workers_fired := 0

for {

// Next message gives us least recently used worker

parts, err := broker.RecvMultipart(0)

if err != nil {

print(err)

}

identity := parts[0]

now := time.Now().Unix()

if now < end_time {

broker.SendMultipart([][]byte{identity, []byte(""), []byte("Work harder")}, 0)

} else {

broker.SendMultipart([][]byte{identity, []byte(""), []byte("Fired!")}, 0)

workers_fired++

if workers_fired == NBR_WORKERS {

break

}

}

}

}

rtreq: Haskell 中的 ROUTER 到 REQ

{-# LANGUAGE OverloadedStrings #-}

-- |

-- Router broker and REQ workers (p.92)

module Main where

import System.ZMQ4.Monadic

import Control.Concurrent (threadDelay, forkIO)

import Control.Concurrent.MVar (withMVar, newMVar, MVar)

import Data.ByteString.Char8 (unpack)

import Control.Monad (replicateM_, unless)

import ZHelpers (setRandomIdentity)

import Text.Printf

import Data.Time.Clock (diffUTCTime, getCurrentTime, UTCTime)

import System.Random

nbrWorkers :: Int

nbrWorkers = 10

-- In general, although locks are an antipattern in ZeroMQ, we need a lock

-- for the stdout handle, otherwise we will get jumbled text. We don't

-- use the lock for anything zeroMQ related, just output to screen.

workerThread :: MVar () -> IO ()

workerThread lock =

runZMQ $ do

worker <- socket Req

setRandomIdentity worker

connect worker "ipc://routing.ipc"

work worker

where

work = loop 0 where

loop val sock = do

send sock [] "ready"

workload <- receive sock

if unpack workload == "Fired!"

then liftIO $ withMVar lock $ \_ -> printf "Completed: %d tasks\n" (val::Int)

else do

rand <- liftIO $ getStdRandom (randomR (500::Int, 5000))

liftIO $ threadDelay rand

loop (val+1) sock

main :: IO ()

main =

runZMQ $ do

client <- socket Router

bind client "ipc://routing.ipc"

-- We only need MVar for printing the output (so output doesn't become interleaved)

-- The alternative is to Make an ipc channel, but that distracts from the example

-- or to 'NoBuffering' 'stdin'

lock <- liftIO $ newMVar ()

liftIO $ replicateM_ nbrWorkers (forkIO $ workerThread lock)

start <- liftIO getCurrentTime

clientTask client start

-- You need to give some time to the workers so they can exit properly

liftIO $ threadDelay $ 1 * 1000 * 1000

where

clientTask :: Socket z Router -> UTCTime -> ZMQ z ()

clientTask = loop nbrWorkers where

loop c sock start = unless (c <= 0) $ do

-- Next message is the leaset recently used worker

ident <- receive sock

send sock [SendMore] ident

-- Envelope delimiter

receive sock

-- Ready signal from worker

receive sock

-- Send delimiter

send sock [SendMore] ""

-- Send Work unless time is up

now <- liftIO getCurrentTime

if c /= nbrWorkers || diffUTCTime now start > 5

then do

send sock [] "Fired!"

loop (c-1) sock start

else do

send sock [] "Work harder"

loop c sock startrtreq: Haxe 中的 ROUTER 到 REQ

package ;

import haxe.io.Bytes;

import neko.Lib;

import neko.Sys;

#if (neko || cpp)

import neko.vm.Thread;

#end

import org.zeromq.ZFrame;

import org.zeromq.ZMQ;

import org.zeromq.ZContext;

import org.zeromq.ZMQSocket;

import ZHelpers;

/**

* Custom routing Router to Mama (ROUTER to REQ)

*

* While this example runs in a single process (for cpp & neko), that is just

* to make it easier to start and stop the example. Each thread has its own

* context and conceptually acts as a separate process.

*

* See: https://zguide.zeromq.cn/page:all#Least-Recently-Used-Routing-LRU-Pattern

*/

class RTMama

{

private static inline var NBR_WORKERS = 10;

public static function workerTask() {

var context:ZContext = new ZContext();

var worker:ZMQSocket = context.createSocket(ZMQ_REQ);

// Use a random string identity for ease here

var id = ZHelpers.setID(worker);

worker.connect("ipc:///tmp/routing.ipc");

var total = 0;

while (true) {

// Tell the router we are ready

ZFrame.newStringFrame("ready").send(worker);

// Get workload from router, until finished

var workload:ZFrame = ZFrame.recvFrame(worker);

if (workload == null) break;

if (workload.streq("END")) {

Lib.println("Processed: " + total + " tasks");

break;

}

total++;

// Do some random work

Sys.sleep((ZHelpers.randof(1000) + 1) / 1000.0);

}

context.destroy();

}

public static function main() {

Lib.println("** RTMama (see: https://zguide.zeromq.cn/page:all#Least-Recently-Used-Routing-LRU-Pattern)");

// Implementation note: Had to move php forking before main thread ZMQ Context creation to

// get the main thread to receive messages from the child processes.

for (worker_nbr in 0 ... NBR_WORKERS) {

#if php

forkWorkerTask();

#else

Thread.create(workerTask);

#end

}

var context:ZContext = new ZContext();

var client:ZMQSocket = context.createSocket(ZMQ_ROUTER);

// Implementation note: Had to add the /tmp prefix to get this to work on Linux Ubuntu 10

client.bind("ipc:///tmp/routing.ipc");

Sys.sleep(1);

for (task_nbr in 0 ... NBR_WORKERS * 10) {

// LRU worker is next waiting in queue

var address:ZFrame = ZFrame.recvFrame(client);

var empty:ZFrame = ZFrame.recvFrame(client);

var ready:ZFrame = ZFrame.recvFrame(client);

address.send(client, ZFrame.ZFRAME_MORE);

ZFrame.newStringFrame("").send(client, ZFrame.ZFRAME_MORE);

ZFrame.newStringFrame("This is the workload").send(client);

}

// Now ask mamas to shut down and report their results

for (worker_nbr in 0 ... NBR_WORKERS) {

var address:ZFrame = ZFrame.recvFrame(client);

var empty:ZFrame = ZFrame.recvFrame(client);

var ready:ZFrame = ZFrame.recvFrame(client);

address.send(client, ZFrame.ZFRAME_MORE);

ZFrame.newStringFrame("").send(client, ZFrame.ZFRAME_MORE);

ZFrame.newStringFrame("END").send(client);

}

context.destroy();

}

#if php

private static inline function forkWorkerTask() {

untyped __php__('

$pid = pcntl_fork();

if ($pid == 0) {

RTMama::workerTask();

exit();

}');

return;

}

#end

}rtreq: Java 中的 ROUTER 到 REQ

package guide;

import java.util.Random;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZContext;

/**

* ROUTER-TO-REQ example

*/

public class rtreq

{

private static Random rand = new Random();

private static final int NBR_WORKERS = 10;

private static class Worker extends Thread

{

@Override

public void run()

{

try (ZContext context = new ZContext()) {

Socket worker = context.createSocket(SocketType.REQ);

ZHelper.setId(worker); // Set a printable identity

worker.connect("tcp://:5671");

int total = 0;

while (true) {

// Tell the broker we're ready for work

worker.send("Hi Boss");

// Get workload from broker, until finished

String workload = worker.recvStr();

boolean finished = workload.equals("Fired!");

if (finished) {

System.out.printf("Completed: %d tasks\n", total);

break;

}

total++;

// Do some random work

try {

Thread.sleep(rand.nextInt(500) + 1);

}

catch (InterruptedException e) {

}

}

}

}

}

/**

* While this example runs in a single process, that is just to make

* it easier to start and stop the example. Each thread has its own

* context and conceptually acts as a separate process.

*/

public static void main(String[] args) throws Exception

{

try (ZContext context = new ZContext()) {

Socket broker = context.createSocket(SocketType.ROUTER);

broker.bind("tcp://*:5671");

for (int workerNbr = 0; workerNbr < NBR_WORKERS; workerNbr++) {

Thread worker = new Worker();

worker.start();

}

// Run for five seconds and then tell workers to end

long endTime = System.currentTimeMillis() + 5000;

int workersFired = 0;

while (true) {

// Next message gives us least recently used worker

String identity = broker.recvStr();

broker.sendMore(identity);

broker.recvStr(); // Envelope delimiter

broker.recvStr(); // Response from worker

broker.sendMore("");

// Encourage workers until it's time to fire them

if (System.currentTimeMillis() < endTime)

broker.send("Work harder");

else {

broker.send("Fired!");

if (++workersFired == NBR_WORKERS)

break;

}

}

}

}

}

rtreq: Julia 中的 ROUTER 到 REQ

rtreq: Lua 中的 ROUTER 到 REQ

--

-- Custom routing Router to Mama (ROUTER to REQ)

--

-- While this example runs in a single process, that is just to make

-- it easier to start and stop the example. Each thread has its own

-- context and conceptually acts as a separate process.

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zmq.threads"

require"zhelpers"

NBR_WORKERS = 10

local pre_code = [[

local identity, seed = ...

local zmq = require"zmq"

require"zhelpers"

math.randomseed(seed)

]]

local worker_task = pre_code .. [[

local context = zmq.init(1)

local worker = context:socket(zmq.REQ)

-- We use a string identity for ease here

worker:setopt(zmq.IDENTITY, identity)

worker:connect("ipc://routing.ipc")

local total = 0

while true do

-- Tell the router we're ready for work

worker:send("ready")

-- Get workload from router, until finished

local workload = worker:recv()

local finished = (workload == "END")

if (finished) then

printf ("Processed: %d tasks\n", total)

break

end

total = total + 1

-- Do some random work

s_sleep (randof (1000) + 1)

end

worker:close()

context:term()

]]

s_version_assert (2, 1)

local context = zmq.init(1)

local client = context:socket(zmq.ROUTER)

client:bind("ipc://routing.ipc")

math.randomseed(os.time())

local workers = {}

for n=1,NBR_WORKERS do

local identity = string.format("%04X-%04X", randof (0x10000), randof (0x10000))

local seed = os.time() + math.random()

workers[n] = zmq.threads.runstring(context, worker_task, identity, seed)

workers[n]:start()

end

for n=1,(NBR_WORKERS * 10) do

-- LRU worker is next waiting in queue

local address = client:recv()

local empty = client:recv()

local ready = client:recv()

client:send(address, zmq.SNDMORE)

client:send("", zmq.SNDMORE)

client:send("This is the workload")

end

-- Now ask mamas to shut down and report their results

for n=1,NBR_WORKERS do

local address = client:recv()

local empty = client:recv()

local ready = client:recv()

client:send(address, zmq.SNDMORE)

client:send("", zmq.SNDMORE)

client:send("END")

end

for n=1,NBR_WORKERS do

assert(workers[n]:join())

end

client:close()

context:term()

rtreq: Node.js 中的 ROUTER 到 REQ

var zmq = require('zeromq');

var WORKERS_NUM = 10;

var router = zmq.socket('router');

var d = new Date();

var endTime = d.getTime() + 5000;

router.bindSync('tcp://*:9000');

router.on('message', function () {

// get the identity of current worker

var identity = Array.prototype.slice.call(arguments)[0];

var d = new Date();

var time = d.getTime();

if (time < endTime) {

router.send([identity, '', 'Work harder!'])

} else {

router.send([identity, '', 'Fired!']);

}

});

// To keep it simple we going to use

// workers in closures and tcp instead of

// node clusters and threads

for (var i = 0; i < WORKERS_NUM; i++) {

(function () {

var worker = zmq.socket('req');

worker.connect('tcp://127.0.0.1:9000');

var total = 0;

worker.on('message', function (msg) {

var message = msg.toString();

if (message === 'Fired!'){

console.log('Completed %d tasks', total);

worker.close();

}

total++;

setTimeout(function () {

worker.send('Hi boss!');

}, 1000)

});

worker.send('Hi boss!');

})();

}

rtreq: Objective-C 中的 ROUTER 到 REQ

rtreq: ooc 中的 ROUTER 到 REQ

rtreq: Perl 中的 ROUTER 到 REQ

# ROUTER-to-REQ in Perl

use strict;

use warnings;

use v5.10;

use threads;

use Time::HiRes qw(usleep);

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_REQ ZMQ_ROUTER);

my $NBR_WORKERS = 10;

sub worker_task {

my $context = ZMQ::FFI->new();

my $worker = $context->socket(ZMQ_REQ);

$worker->set_identity(Time::HiRes::time());

$worker->connect('tcp://:5671');

my $total = 0;

WORKER_LOOP:

while (1) {

# Tell the broker we're ready for work

$worker->send('Hi Boss');

# Get workload from broker, until finished

my $workload = $worker->recv();

my $finished = $workload eq "Fired!";

if ($finished) {

say "Completed $total tasks";

last WORKER_LOOP;

}

$total++;

# Do some random work

usleep int(rand(500_000)) + 1;

}

}

# While this example runs in a single process, that is only to make

# it easier to start and stop the example. Each thread has its own

# context and conceptually acts as a separate process.

my $context = ZMQ::FFI->new();

my $broker = $context->socket(ZMQ_ROUTER);

$broker->bind('tcp://*:5671');

for my $worker_nbr (1..$NBR_WORKERS) {

threads->create('worker_task')->detach();

}

# Run for five seconds and then tell workers to end

my $end_time = time() + 5;

my $workers_fired = 0;

BROKER_LOOP:

while (1) {

# Next message gives us least recently used worker

my ($identity, $delimiter, $response) = $broker->recv_multipart();

# Encourage workers until it's time to fire them

if ( time() < $end_time ) {

$broker->send_multipart([$identity, '', 'Work harder']);

}

else {

$broker->send_multipart([$identity, '', 'Fired!']);

if ( ++$workers_fired == $NBR_WORKERS) {

last BROKER_LOOP;

}

}

}

rtreq: PHP 中的 ROUTER 到 REQ

<?php

/*

* Custom routing Router to Mama (ROUTER to REQ)

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>a

*/

define("NBR_WORKERS", 10);

function worker_thread()

{

$context = new ZMQContext();

$worker = new ZMQSocket($context, ZMQ::SOCKET_REQ);

$worker->connect("ipc://routing.ipc");

$total = 0;

while (true) {

// Tell the router we're ready for work

$worker->send("ready");

// Get workload from router, until finished

$workload = $worker->recv();

if ($workload == 'END') {

printf ("Processed: %d tasks%s", $total, PHP_EOL);

break;

}

$total++;

// Do some random work

usleep(mt_rand(1, 1000000));

}

}

for ($worker_nbr = 0; $worker_nbr < NBR_WORKERS; $worker_nbr++) {

if (pcntl_fork() == 0) {

worker_thread();

exit();

}

}

$context = new ZMQContext();

$client = $context->getSocket(ZMQ::SOCKET_ROUTER);

$client->bind("ipc://routing.ipc");

for ($task_nbr = 0; $task_nbr < NBR_WORKERS * 10; $task_nbr++) {

// LRU worker is next waiting in queue

$address = $client->recv();

$empty = $client->recv();

$read = $client->recv();

$client->send($address, ZMQ::MODE_SNDMORE);

$client->send("", ZMQ::MODE_SNDMORE);

$client->send("This is the workload");

}

// Now ask mamas to shut down and report their results

for ($task_nbr = 0; $task_nbr < NBR_WORKERS; $task_nbr++) {

// LRU worker is next waiting in queue

$address = $client->recv();

$empty = $client->recv();

$read = $client->recv();

$client->send($address, ZMQ::MODE_SNDMORE);

$client->send("", ZMQ::MODE_SNDMORE);

$client->send("END");

}

sleep (1); // Give 0MQ/2.0.x time to flush output

rtreq: Python 中的 ROUTER 到 REQ

# encoding: utf-8

#

# Custom routing Router to Mama (ROUTER to REQ)

#

# Author: Jeremy Avnet (brainsik) <spork(dash)zmq(at)theory(dot)org>

#

import time

import random

from threading import Thread

import zmq

import zhelpers

NBR_WORKERS = 10

def worker_thread(context=None):

context = context or zmq.Context.instance()

worker = context.socket(zmq.REQ)

# We use a string identity for ease here

zhelpers.set_id(worker)

worker.connect("tcp://:5671")

total = 0

while True:

# Tell the router we're ready for work

worker.send(b"ready")

# Get workload from router, until finished

workload = worker.recv()

finished = workload == b"END"

if finished:

print("Processed: %d tasks" % total)

break

total += 1

# Do some random work

time.sleep(0.1 * random.random())

context = zmq.Context.instance()

client = context.socket(zmq.ROUTER)

client.bind("tcp://*:5671")

for _ in range(NBR_WORKERS):

Thread(target=worker_thread).start()

for _ in range(NBR_WORKERS * 10):

# LRU worker is next waiting in the queue

address, empty, ready = client.recv_multipart()

client.send_multipart([

address,

b'',

b'This is the workload',

])

# Now ask mama to shut down and report their results

for _ in range(NBR_WORKERS):

address, empty, ready = client.recv_multipart()

client.send_multipart([

address,

b'',

b'END',

])

rtreq: Q 中的 ROUTER 到 REQ

rtreq: Racket 中的 ROUTER 到 REQ

rtreq: Ruby 中的 ROUTER 到 REQ

#!/usr/bin/env ruby

# Custom routing Router to Mama (ROUTER to REQ)

# Ruby version, based on the C version.

#

# While this example runs in a single process, that is just to make

# it easier to start and stop the example. Each thread has its own

# context and conceptually acts as a separate process.

#

# libzmq: 2.1.10

# ruby: 1.9.2p180 (2011-02-18 revision 30909) [i686-linux]

# ffi-rzmq: 0.9.0

#

# @author Pavel Mitin

# @email mitin.pavel@gmail.com

require 'rubygems'

require 'ffi-rzmq'

WORKER_NUMBER = 10

def receive_string(socket)

result = ''

socket.recv_string result

result

end

def worker_task

context = ZMQ::Context.new 1

worker = context.socket ZMQ::REQ

# We use a string identity for ease here

worker.setsockopt ZMQ::IDENTITY, sprintf("%04X-%04X", rand(10000), rand(10000))

worker.connect 'ipc://routing.ipc'

total = 0

loop do

# Tell the router we're ready for work

worker.send_string 'ready'

# Get workload from router, until finished

workload = receive_string worker

p "Processed: #{total} tasks" and break if workload == 'END'

total += 1

# Do some random work

sleep((rand(10) + 1) / 10.0)

end

end

context = ZMQ::Context.new 1

client = context.socket ZMQ::ROUTER

client.bind 'ipc://routing.ipc'

workers = (1..WORKER_NUMBER).map do

Thread.new { worker_task }

end

(WORKER_NUMBER * 10).times do

# LRU worker is next waitin in queue

address = receive_string client

empty = receive_string client

ready = receive_string client

client.send_string address, ZMQ::SNDMORE

client.send_string '', ZMQ::SNDMORE

client.send_string 'This is the workload'

end

# Now ask mamas to shut down and report their results

WORKER_NUMBER.times do

address = receive_string client

empty = receive_string client

ready = receive_string client

client.send_string address, ZMQ::SNDMORE

client.send_string '', ZMQ::SNDMORE

client.send_string 'END'

end

workers.each &:join

rtreq: Rust 中的 ROUTER 到 REQ

rtreq: Scala 中的 ROUTER 到 REQ

/*

* Custom routing Router to Mama (ROUTER to REQ)

*

* While this example runs in a single process, that is just to make

* it easier to start and stop the example. Each thread has its own

* context and conceptually acts as a separate process.

*

*

* @author Giovanni Ruggiero

* @email giovanni.ruggiero@gmail.com

*/

import org.zeromq.ZMQ

import ZHelpers._

object rtmama {

class WorkerTask() extends Runnable {

def run() {

val rand = new java.util.Random(System.currentTimeMillis)

val ctx = ZMQ.context(1)

val worker = ctx.socket(ZMQ.REQ)

// We use a string identity for ease here

setID(worker)

// println(new String(worker.getIdentity))

worker.connect("tcp://:5555")

var total = 0

var workload = ""

do {

// Tell the router we're ready for work

worker.send("Ready".getBytes,0)

workload = new String(worker.recv(0))

Thread.sleep (rand.nextInt(1) * 1000)

total += 1

// Get workload from router, until finished

} while (!workload.equalsIgnoreCase("END"))

printf("Processed: %d tasks\n", total)

}

}

def main(args : Array[String]) {

val NBR_WORKERS = 10

val ctx = ZMQ.context(1)

val client = ctx.socket(ZMQ.ROUTER)

// Workaround to ckeck version >= 2.1

assert(client.getType > -1)

client.bind("tcp://*:5555")

val workers = List.fill(NBR_WORKERS)(new Thread(new WorkerTask))

workers foreach (_.start)

for (i <- 1 to NBR_WORKERS * 10) {

// LRU worker is next waiting in queue

val address = client.recv(0)

val empty = client.recv(0)

val ready = client.recv(0)

client.send(address, ZMQ.SNDMORE)

client.send("".getBytes, ZMQ.SNDMORE)

client.send("This is the workload".getBytes,0)

}

// Now ask mamas to shut down and report their results

for (i <- 1 to NBR_WORKERS) {

val address = client.recv(0)

val empty = client.recv(0)

val ready = client.recv(0)

client.send(address, ZMQ.SNDMORE)

client.send("".getBytes, ZMQ.SNDMORE)

client.send("END".getBytes,0)

}

}

}

rtreq: Tcl 中的 ROUTER 到 REQ

#

# Custom routing Router to Mama (ROUTER to REQ)

#

package require zmq

if {[llength $argv] == 0} {

set argv [list driver 3]

} elseif {[llength $argv] != 2} {

puts "Usage: rtmama.tcl <driver|main|worker> <number_of_workers>"

exit 1

}

lassign $argv what NBR_WORKERS

set tclsh [info nameofexecutable]

set nbr_of_workers [lindex $argv 0]

expr {srand([pid])}

switch -exact -- $what {

worker {

zmq context context

zmq socket worker context REQ

# We use a string identity for ease here

set id [format "%04X-%04X" [expr {int(rand()*0x10000)}] [expr {int(rand()*0x10000)}]]

worker setsockopt IDENTITY $id

worker connect "ipc://routing.ipc"

set total 0

while {1} {

# Tell the router we're ready for work

worker send "ready"

# Get workload from router, until finished

set workload [worker recv]

if {$workload eq "END"} {

puts "Processed: $total tasks"

break

}

incr total

# Do some random work

after [expr {int(rand()*1000)}]

}

worker close

context term

}

main {

zmq context context

zmq socket client context ROUTER

client bind "ipc://routing.ipc"

for {set task_nbr 0} {$task_nbr < $NBR_WORKERS * 10} {incr task_nbr} {

# LRU worker is next waiting in queue

set address [client recv]

set empty [client recv]

set ready [client recv]

client sendmore $address

client sendmore ""

client send "This is the workload"

}

# Now ask mamas to shut down and report their results

for {set worker_nbr 0} {$worker_nbr < $NBR_WORKERS} {incr worker_nbr} {

set address [client recv]

set empty [client recv]

set ready [client recv]

client sendmore $address

client sendmore ""

client send "END"

}

client close

context term

}

driver {

puts "Start main, output redirected to main.log"

exec $tclsh rtmama.tcl main $NBR_WORKERS > main.log 2>@1 &

after 1000

for {set i 0} {$i < $NBR_WORKERS} {incr i} {

puts "Start worker $i, output redirected to worker$i.log"

exec $tclsh rtmama.tcl worker $NBR_WORKERS > worker$i.log 2>@1 &

}

}

}

rtreq: OCaml 中的 ROUTER 到 REQ

示例运行五秒钟,然后每个工作者打印他们处理了多少任务。如果路由工作正常,我们期望任务会被公平地分配。

Completed: 20 tasks

Completed: 18 tasks

Completed: 21 tasks

Completed: 23 tasks

Completed: 19 tasks

Completed: 21 tasks

Completed: 17 tasks

Completed: 17 tasks

Completed: 25 tasks

Completed: 19 tasks

在这个示例中与工作者对话,我们必须创建一个对 REQ 友好的信封,它由一个身份和一个空的信封分隔帧组成。

ROUTER 代理和 DEALER 工作者 #

任何可以使用 REQ 的地方,你都可以使用 DEALER。有两处具体的区别

- REQ 套接字在发送任何数据帧之前总是发送一个空的定界帧;DEALER 则不会。

- REQ 套接字在收到回复之前只会发送一条消息;DEALER 则是完全异步的。

同步与异步行为对我们的示例没有影响,因为我们执行的是严格的请求-回复模式。当我们在第 4 章 - 可靠请求-回复模式中讨论从故障中恢复时,它会更具相关性。

现在让我们看看完全相同的示例,但将 REQ 套接字替换为 DEALER 套接字

rtdealer:使用 Ada 的 ROUTER 到 DEALER 示例

rtdealer:使用 Basic 的 ROUTER 到 DEALER 示例

rtdealer:使用 C 的 ROUTER 到 DEALER 示例

// 2015-02-27T11:40+08:00

// ROUTER-to-DEALER example

#include "zhelpers.h"

#include <pthread.h>

#define NBR_WORKERS 10

static void *

worker_task(void *args)

{

void *context = zmq_ctx_new();

void *worker = zmq_socket(context, ZMQ_DEALER);

#if (defined (WIN32))

s_set_id(worker, (intptr_t)args);

#else

s_set_id(worker); // Set a printable identity

#endif

zmq_connect (worker, "tcp://:5671");

int total = 0;

while (1) {

// Tell the broker we're ready for work

s_sendmore(worker, "");

s_send(worker, "Hi Boss");

// Get workload from broker, until finished

free(s_recv(worker)); // Envelope delimiter

char *workload = s_recv(worker);

// .skip

int finished = (strcmp(workload, "Fired!") == 0);

free(workload);

if (finished) {

printf("Completed: %d tasks\n", total);

break;

}

total++;

// Do some random work

s_sleep(randof(500) + 1);

}

zmq_close(worker);

zmq_ctx_destroy(context);

return NULL;

}

// .split main task

// While this example runs in a single process, that is just to make

// it easier to start and stop the example. Each thread has its own

// context and conceptually acts as a separate process.

int main(void)

{

void *context = zmq_ctx_new();

void *broker = zmq_socket(context, ZMQ_ROUTER);

zmq_bind(broker, "tcp://*:5671");

srandom((unsigned)time(NULL));

int worker_nbr;

for (worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

pthread_t worker;

pthread_create(&worker, NULL, worker_task, (void *)(intptr_t)worker_nbr);

}

// Run for five seconds and then tell workers to end

int64_t end_time = s_clock() + 5000;

int workers_fired = 0;

while (1) {

// Next message gives us least recently used worker

char *identity = s_recv(broker);

s_sendmore(broker, identity);

free(identity);

free(s_recv(broker)); // Envelope delimiter

free(s_recv(broker)); // Response from worker

s_sendmore(broker, "");

// Encourage workers until it's time to fire them

if (s_clock() < end_time)

s_send(broker, "Work harder");

else {

s_send(broker, "Fired!");

if (++workers_fired == NBR_WORKERS)

break;

}

}

zmq_close(broker);

zmq_ctx_destroy(context);

return 0;

}

// .until

rtdealer:使用 C++ 的 ROUTER 到 DEALER 示例

//

// Custom routing Router to Dealer

//

#include "zhelpers.hpp"

#include <thread>

#include <vector>

static void *

worker_task(void *args)

{

zmq::context_t context(1);

zmq::socket_t worker(context, ZMQ_DEALER);

#if (defined (WIN32))

s_set_id(worker, (intptr_t)args);

#else

s_set_id(worker); // Set a printable identity

#endif

worker.connect("tcp://:5671");

int total = 0;

while (1) {

// Tell the broker we're ready for work

s_sendmore(worker, std::string(""));

s_send(worker, std::string("Hi Boss"));

// Get workload from broker, until finished

s_recv(worker); // Envelope delimiter

std::string workload = s_recv(worker);

// .skip

if ("Fired!" == workload) {

std::cout << "Completed: " << total << " tasks" << std::endl;

break;

}

total++;

// Do some random work

s_sleep(within(500) + 1);

}

return NULL;

}

// .split main task

// While this example runs in a single process, that is just to make

// it easier to start and stop the example. Each thread has its own

// context and conceptually acts as a separate process.

int main() {

zmq::context_t context(1);

zmq::socket_t broker(context, ZMQ_ROUTER);

broker.bind("tcp://*:5671");

srandom((unsigned)time(NULL));

const int NBR_WORKERS = 10;

std::vector<std::thread> workers;

for (int worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

workers.push_back(std::move(std::thread(worker_task, (void *)(intptr_t)worker_nbr)));

}

// Run for five seconds and then tell workers to end

int64_t end_time = s_clock() + 5000;

int workers_fired = 0;

while (1) {

// Next message gives us least recently used worker

std::string identity = s_recv(broker);

{

s_recv(broker); // Envelope delimiter

s_recv(broker); // Response from worker

}

s_sendmore(broker, identity);

s_sendmore(broker, std::string(""));

// Encourage workers until it's time to fire them

if (s_clock() < end_time)

s_send(broker, std::string("Work harder"));

else {

s_send(broker, std::string("Fired!"));

if (++workers_fired == NBR_WORKERS)

break;

}

}

for (int worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

workers[worker_nbr].join();

}

return 0;

}

rtdealer:使用 C# 的 ROUTER 到 DEALER 示例

rtdealer:使用 CL 的 ROUTER 到 DEALER 示例

;;; -*- Mode:Lisp; Syntax:ANSI-Common-Lisp; -*-

;;;

;;; Custom routing Router to Dealer in Common Lisp

;;;

;;; Kamil Shakirov <kamils80@gmail.com>

;;;

;;; We have two workers, here we copy the code, normally these would run on

;;; different boxes...

(defpackage #:zguide.rtdealer

(:nicknames #:rtdealer)

(:use #:cl #:zhelpers)

(:export #:main))

(in-package :zguide.rtdealer)

(defun worker-a (context)

(zmq:with-socket (worker context zmq:dealer)

(zmq:setsockopt worker zmq:identity "A")

(zmq:connect worker "ipc://routing.ipc")

(let ((total 0))

(loop

;; We receive one part, with the workload

(let ((request (recv-text worker)))

(when (string= request "END")

(message "A received: ~D~%" total)

(return))

(incf total))))))

(defun worker-b (context)

(zmq:with-socket (worker context zmq:dealer)

(zmq:setsockopt worker zmq:identity "B")

(zmq:connect worker "ipc://routing.ipc")

(let ((total 0))

(loop