第四章 - 可靠的请求-回复模式 #

第三章 - 高级请求-回复模式 涵盖了 ZeroMQ 请求-回复模式的高级用法,并提供了可运行的示例。本章着眼于可靠性这个普遍问题,并在 ZeroMQ 核心请求-回复模式的基础上构建了一系列可靠的消息模式。

在本章中,我们重点关注用户空间的请求-回复 模式,这些是可重用的模型,有助于你设计自己的 ZeroMQ 架构

- Lazy Pirate 模式:客户端的可靠请求-回复

- Simple Pirate 模式:使用负载均衡的可靠请求-回复

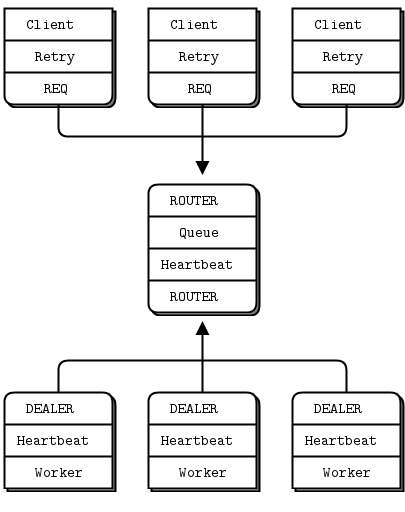

- Paranoid Pirate 模式:带心跳的可靠请求-回复

- Majordomo 模式:面向服务的可靠排队

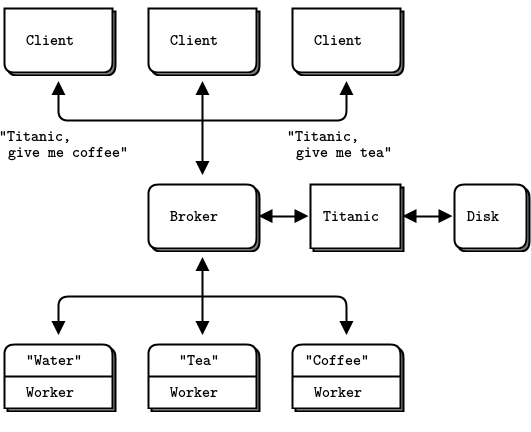

- Titanic 模式:基于磁盘/断开连接的可靠排队

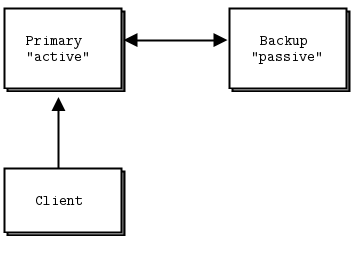

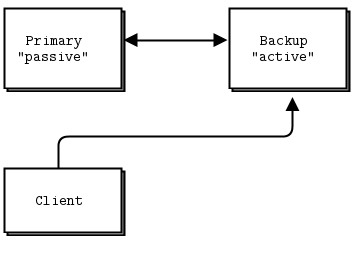

- Binary Star 模式:主备服务器故障转移

- Freelance 模式:无代理的可靠请求-回复

什么是“可靠性”? #

大多数谈论“可靠性”的人并不真正知道他们在说什么。我们只能根据失败来定义可靠性。也就是说,如果我们能够处理一组定义明确且易于理解的失败,那么相对于这些失败,我们就是可靠的。不多不少。所以让我们来看看分布式 ZeroMQ 应用中可能导致失败的原因,按概率大致降序排列:

-

应用程序代码是罪魁祸首。它可能崩溃退出,冻结并停止响应输入,运行太慢而无法处理输入,耗尽所有内存等等。

-

系统代码——例如我们使用 ZeroMQ 编写的代理——可能因与应用程序代码相同的原因而死亡。系统代码应该比应用程序代码更可靠,但它仍然可能崩溃和失败,特别是如果它试图为慢客户端排队消息时,可能会耗尽内存。

-

消息队列可能会溢出,这通常发生在学会残酷对待慢客户端的系统代码中。当队列溢出时,它会开始丢弃消息。因此,我们得到的是“丢失”的消息。

-

网络可能会发生故障(例如,WiFi 关闭或超出范围)。ZeroMQ 在这种情况下会自动重连,但在重连期间,消息可能会丢失。

-

硬件可能会发生故障,并导致该机器上运行的所有进程随之终止。

-

网络可能以奇特的方式发生故障,例如,交换机上的某些端口可能损坏,导致部分网络无法访问。

-

整个数据中心可能遭受雷击、地震、火灾,或更常见的电源或冷却故障。

要使一个软件系统能够完全可靠地应对所有这些可能的失败,这是一项极其困难和昂贵的工作,超出了本书的范围。

由于上述列表中的前五种情况涵盖了大型公司以外现实世界需求的 99.9%(根据我刚刚进行的一项高度科学的研究,该研究还告诉我 78% 的统计数据都是凭空捏造的,而且我们永远不应该相信未经我们自己证伪的统计数据),所以这就是我们将要探讨的内容。如果你是一家大型公司,有钱用于处理最后两种情况,请立即联系我的公司!我家海滨别墅后面有一个大洞,正等着改造成行政游泳池呢。

设计可靠性 #

因此,简单粗暴地说,可靠性就是“在代码冻结或崩溃时保持事物正常运行”,这种情况我们简称“死亡”。然而,我们希望保持正常运行的事物比简单的消息更复杂。我们需要研究每种核心 ZeroMQ 消息模式,看看即使代码“死亡”时,我们如何(如果可能的话)使其继续工作。

让我们逐一 살펴보

-

请求-回复:如果服务器“死亡”(正在处理请求时),客户端可以发现这一点,因为它收不到回复。然后它可以恼火地放弃,或者稍后等待并重试,或者寻找另一台服务器,等等。至于客户端“死亡”,我们现在可以将其视为“别人的问题”。

-

发布-订阅:如果客户端“死亡”(已经收到一些数据),服务器并不知道。发布-订阅不会从客户端向服务器发送任何信息。但客户端可以通过带外方式(例如,通过请求-回复)联系服务器,并询问“请重新发送我遗漏的所有内容”。至于服务器“死亡”,这超出了本文的范围。订阅者也可以自我验证它们是否运行得太慢,并在确实太慢时采取行动(例如,警告操作员并“死亡”)。

-

管道:如果一个 worker “死亡”(正在工作时),ventilator(鼓风机)并不知道。管道就像时间的齿轮一样,只在一个方向工作。但是下游的 collector(收集器)可以检测到某个任务没有完成,并向 ventilator 发送一条消息说:“嘿,重发任务 324!”如果 ventilator 或 collector 死亡,最初发送工作批次的任何上游客户端都可能因为等待太久而感到厌烦,然后重发全部任务。这并不优雅,但系统代码应该不会经常死亡到成为问题。

在本章中,我们将重点讨论请求-回复,这是可靠消息传递中容易实现的部分。

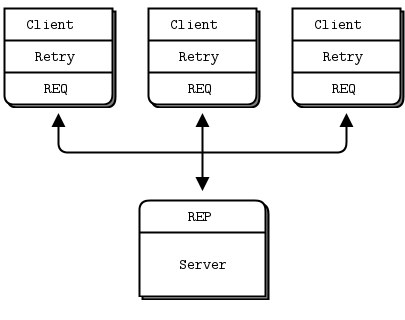

基本的请求-回复模式(一个 REQ 客户端套接字对 REP 服务器套接字进行阻塞发送/接收)在处理最常见的故障类型方面得分较低。如果服务器在处理请求时崩溃,客户端只会永远挂起。如果网络丢失了请求或回复,客户端也会永远挂起。

由于 ZeroMQ 能够静默重连对端、负载均衡消息等等,请求-回复仍然比 TCP 好得多。但它仍然不足以应对实际工作。唯一可以真正信任基本请求-回复模式的情况是在同一进程中的两个线程之间,因为没有网络或独立的服务器进程会“死亡”。

然而,只需做一些额外的工作,这种基础模式就能成为分布式网络中实际工作的良好基础,并且我们得到了一组可靠请求-回复 (RRR) 模式,我喜欢称之为 Pirate 模式(我希望你最终能明白这个笑话)。

根据我的经验,客户端连接服务器的方式大致有三种。每种都需要特定的可靠性处理方法

-

多个客户端直接与单个服务器通信。用例:客户端需要与之通信的单个知名服务器。我们旨在处理的故障类型:服务器崩溃和重启,以及网络断开连接。

-

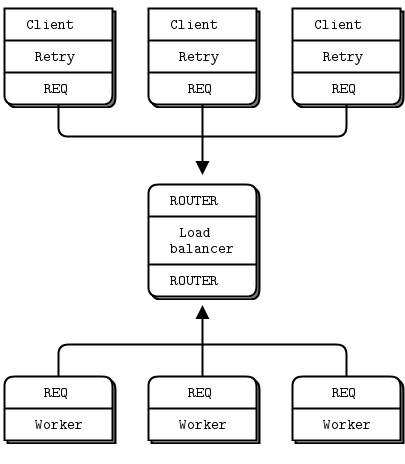

多个客户端与一个代理通信,该代理将工作分发给多个 worker。用例:面向服务的事务处理。我们旨在处理的故障类型:worker 崩溃和重启,worker 忙循环,worker 过载,队列崩溃和重启,以及网络断开连接。

-

多个客户端与多个服务器通信,中间没有代理。用例:分布式服务,如名称解析。我们旨在处理的故障类型:服务崩溃和重启,服务忙循环,服务过载,以及网络断开连接。

这些方法各有优缺点,而且你经常会混合使用它们。我们将详细 بررسی 这三种方法。

客户端可靠性 (Lazy Pirate 模式) #

通过对客户端进行一些更改,我们可以实现非常简单的可靠请求-回复。我们称之为 Lazy Pirate 模式。我们不是进行阻塞接收,而是:

- 轮询 REQ 套接字,并仅在确定收到回复时才进行接收。

- 如果在超时时间内没有收到回复,则重新发送请求。

- 如果几次请求后仍未收到回复,则放弃该事务。

如果你尝试以非严格的发送/接收方式使用 REQ 套接字,你会收到错误(技术上,REQ 套接字实现了一个小型有限状态机来强制执行发送/接收的乒乓式交互,因此错误代码称为“EFSM”)。这在我们想要在 pirate 模式中使用 REQ 时稍微有点烦人,因为我们在收到回复之前可能会发送多次请求。

一个相当不错的暴力解决方案是在出错后关闭并重新打开 REQ 套接字

lpclient: Ada 中的 Lazy Pirate 客户端

lpclient: Basic 中的 Lazy Pirate 客户端

lpclient: C 中的 Lazy Pirate 客户端

#include <czmq.h>

#define REQUEST_TIMEOUT 2500 // msecs, (>1000!)

#define REQUEST_RETRIES 3 // Before we abandon

#define SERVER_ENDPOINT "tcp://:5555"

int main()

{

zsock_t *client = zsock_new_req(SERVER_ENDPOINT);

printf("I: Connecting to server...\n");

assert(client);

int sequence = 0;

int retries_left = REQUEST_RETRIES;

printf("Entering while loop...\n");

while(retries_left) // interrupt needs to be handled

{

// We send a request, then we get a reply

char request[10];

sprintf(request, "%d", ++sequence);

zstr_send(client, request);

int expect_reply = 1;

while(expect_reply)

{

printf("Expecting reply....\n");

zmq_pollitem_t items [] = {{zsock_resolve(client), 0, ZMQ_POLLIN, 0}};

printf("After polling\n");

int rc = zmq_poll(items, 1, REQUEST_TIMEOUT * ZMQ_POLL_MSEC);

printf("Polling Done.. \n");

if (rc == -1)

break; // Interrupted

// Here we process a server reply and exit our loop if the

// reply is valid. If we didn't get a reply we close the

// client socket, open it again and resend the request. We

// try a number times before finally abandoning:

if (items[0].revents & ZMQ_POLLIN)

{

// We got a reply from the server, must match sequence

char *reply = zstr_recv(client);

if(!reply)

break; // interrupted

if (atoi(reply) == sequence)

{

printf("I: server replied OK (%s)\n", reply);

retries_left=REQUEST_RETRIES;

expect_reply = 0;

}

else

{

printf("E: malformed reply from server: %s\n", reply);

}

free(reply);

}

else

{

if(--retries_left == 0)

{

printf("E: Server seems to be offline, abandoning\n");

break;

}

else

{

printf("W: no response from server, retrying...\n");

zsock_destroy(&client);

printf("I: reconnecting to server...\n");

client = zsock_new_req(SERVER_ENDPOINT);

zstr_send(client, request);

}

}

}

zsock_destroy(&client);

return 0;

}

}lpclient: C++ 中的 Lazy Pirate 客户端

//

// Lazy Pirate client

// Use zmq_poll to do a safe request-reply

// To run, start piserver and then randomly kill/restart it

//

#include "zhelpers.hpp"

#include <sstream>

#define REQUEST_TIMEOUT 2500 // msecs, (> 1000!)

#define REQUEST_RETRIES 3 // Before we abandon

// Helper function that returns a new configured socket

// connected to the Hello World server

//

static zmq::socket_t * s_client_socket (zmq::context_t & context) {

std::cout << "I: connecting to server..." << std::endl;

zmq::socket_t * client = new zmq::socket_t (context, ZMQ_REQ);

client->connect ("tcp://:5555");

// Configure socket to not wait at close time

int linger = 0;

client->setsockopt (ZMQ_LINGER, &linger, sizeof (linger));

return client;

}

int main () {

zmq::context_t context (1);

zmq::socket_t * client = s_client_socket (context);

int sequence = 0;

int retries_left = REQUEST_RETRIES;

while (retries_left) {

std::stringstream request;

request << ++sequence;

s_send (*client, request.str());

sleep (1);

bool expect_reply = true;

while (expect_reply) {

// Poll socket for a reply, with timeout

zmq::pollitem_t items[] = {

{ *client, 0, ZMQ_POLLIN, 0 } };

zmq::poll (&items[0], 1, REQUEST_TIMEOUT);

// If we got a reply, process it

if (items[0].revents & ZMQ_POLLIN) {

// We got a reply from the server, must match sequence

std::string reply = s_recv (*client);

if (atoi (reply.c_str ()) == sequence) {

std::cout << "I: server replied OK (" << reply << ")" << std::endl;

retries_left = REQUEST_RETRIES;

expect_reply = false;

}

else {

std::cout << "E: malformed reply from server: " << reply << std::endl;

}

}

else

if (--retries_left == 0) {

std::cout << "E: server seems to be offline, abandoning" << std::endl;

expect_reply = false;

break;

}

else {

std::cout << "W: no response from server, retrying..." << std::endl;

// Old socket will be confused; close it and open a new one

delete client;

client = s_client_socket (context);

// Send request again, on new socket

s_send (*client, request.str());

}

}

}

delete client;

return 0;

}

lpclient: C# 中的 Lazy Pirate 客户端

lpclient: CL 中的 Lazy Pirate 客户端

lpclient: Delphi 中的 Lazy Pirate 客户端

program lpclient;

//

// Lazy Pirate client

// Use zmq_poll to do a safe request-reply

// To run, start lpserver and then randomly kill/restart it

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

;

const

REQUEST_TIMEOUT = 2500; // msecs, (> 1000!)

REQUEST_RETRIES = 3; // Before we abandon

SERVER_ENDPOINT = 'tcp://:5555';

var

ctx: TZMQContext;

client: TZMQSocket;

sequence,

retries_left,

expect_reply: Integer;

request,

reply: Utf8String;

poller: TZMQPoller;

begin

ctx := TZMQContext.create;

Writeln( 'I: connecting to server...' );

client := ctx.Socket( stReq );

client.Linger := 0;

client.connect( SERVER_ENDPOINT );

poller := TZMQPoller.Create( true );

poller.Register( client, [pePollIn] );

sequence := 0;

retries_left := REQUEST_RETRIES;

while ( retries_left > 0 ) and not ctx.Terminated do

try

// We send a request, then we work to get a reply

inc( sequence );

request := Format( '%d', [sequence] );

client.send( request );

expect_reply := 1;

while ( expect_reply > 0 ) do

begin

// Poll socket for a reply, with timeout

poller.poll( REQUEST_TIMEOUT );

// Here we process a server reply and exit our loop if the

// reply is valid. If we didn't a reply we close the client

// socket and resend the request. We try a number of times

// before finally abandoning:

if pePollIn in poller.PollItem[0].revents then

begin

// We got a reply from the server, must match sequence

client.recv( reply );

if StrToInt( reply ) = sequence then

begin

Writeln( Format( 'I: server replied OK (%s)', [reply] ) );

retries_left := REQUEST_RETRIES;

expect_reply := 0;

end else

Writeln( Format( 'E: malformed reply from server: %s', [ reply ] ) );

end else

begin

dec( retries_left );

if retries_left = 0 then

begin

Writeln( 'E: server seems to be offline, abandoning' );

break;

end else

begin

Writeln( 'W: no response from server, retrying...' );

// Old socket is confused; close it and open a new one

poller.Deregister( client, [pePollIn] );

client.Free;

Writeln( 'I: reconnecting to server...' );

client := ctx.Socket( stReq );

client.Linger := 0;

client.connect( SERVER_ENDPOINT );

poller.Register( client, [pePollIn] );

// Send request again, on new socket

client.send( request );

end;

end;

end;

except

end;

poller.Free;

ctx.Free;

end.

lpclient: Erlang 中的 Lazy Pirate 客户端

lpclient: Elixir 中的 Lazy Pirate 客户端

lpclient: F# 中的 Lazy Pirate 客户端

lpclient: Felix 中的 Lazy Pirate 客户端

lpclient: Go 中的 Lazy Pirate 客户端

// Lazy Pirate client

// Use zmq_poll to do a safe request-reply

// To run, start lpserver and then randomly kill/restart it

//

// Author: iano <scaly.iano@gmail.com>

// Based on C example

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

"strconv"

"time"

)

const (

REQUEST_TIMEOUT = time.Duration(2500) * time.Millisecond

REQUEST_RETRIES = 3

SERVER_ENDPOINT = "tcp://:5555"

)

func main() {

context, _ := zmq.NewContext()

defer context.Close()

fmt.Println("I: Connecting to server...")

client, _ := context.NewSocket(zmq.REQ)

client.Connect(SERVER_ENDPOINT)

for sequence, retriesLeft := 1, REQUEST_RETRIES; retriesLeft > 0; sequence++ {

fmt.Printf("I: Sending (%d)\n", sequence)

client.Send([]byte(strconv.Itoa(sequence)), 0)

for expectReply := true; expectReply; {

// Poll socket for a reply, with timeout

items := zmq.PollItems{

zmq.PollItem{Socket: client, Events: zmq.POLLIN},

}

if _, err := zmq.Poll(items, REQUEST_TIMEOUT); err != nil {

panic(err) // Interrupted

}

// .split process server reply

// Here we process a server reply and exit our loop if the

// reply is valid. If we didn't a reply we close the client

// socket and resend the request. We try a number of times

// before finally abandoning:

if item := items[0]; item.REvents&zmq.POLLIN != 0 {

// We got a reply from the server, must match sequence

reply, err := item.Socket.Recv(0)

if err != nil {

panic(err) // Interrupted

}

if replyInt, err := strconv.Atoi(string(reply)); replyInt == sequence && err == nil {

fmt.Printf("I: Server replied OK (%s)\n", reply)

retriesLeft = REQUEST_RETRIES

expectReply = false

} else {

fmt.Printf("E: Malformed reply from server: %s", reply)

}

} else if retriesLeft--; retriesLeft == 0 {

fmt.Println("E: Server seems to be offline, abandoning")

client.SetLinger(0)

client.Close()

break

} else {

fmt.Println("W: No response from server, retrying...")

// Old socket is confused; close it and open a new one

client.SetLinger(0)

client.Close()

client, _ = context.NewSocket(zmq.REQ)

client.Connect(SERVER_ENDPOINT)

fmt.Printf("I: Resending (%d)\n", sequence)

// Send request again, on new socket

client.Send([]byte(strconv.Itoa(sequence)), 0)

}

}

}

}

lpclient: Haskell 中的 Lazy Pirate 客户端

{--

Lazy Pirate client in Haskell

--}

module Main where

import System.ZMQ4.Monadic

import System.Random (randomRIO)

import System.Exit (exitSuccess)

import Control.Monad (forever, when)

import Control.Concurrent (threadDelay)

import Data.ByteString.Char8 (pack, unpack)

requestRetries = 3

requestTimeout_ms = 2500

serverEndpoint = "tcp://:5555"

main :: IO ()

main =

runZMQ $ do

liftIO $ putStrLn "I: Connecting to server"

client <- socket Req

connect client serverEndpoint

sendServer 1 requestRetries client

sendServer :: Int -> Int -> Socket z Req -> ZMQ z ()

sendServer _ 0 _ = return ()

sendServer seq retries client = do

send client [] (pack $ show seq)

pollServer seq retries client

pollServer :: Int -> Int -> Socket z Req -> ZMQ z ()

pollServer seq retries client = do

[evts] <- poll requestTimeout_ms [Sock client [In] Nothing]

if In `elem` evts

then do

reply <- receive client

if (read . unpack $ reply) == seq

then do

liftIO $ putStrLn $ "I: Server replied OK " ++ (unpack reply)

sendServer (seq+1) requestRetries client

else do

liftIO $ putStrLn $ "E: malformed reply from server: " ++ (unpack reply)

pollServer seq retries client

else

if retries == 0

then liftIO $ putStrLn "E: Server seems to be offline, abandoning" >> exitSuccess

else do

liftIO $ putStrLn $ "W: No response from server, retrying..."

client' <- socket Req

connect client' serverEndpoint

send client' [] (pack $ show seq)

pollServer seq (retries-1) client'

lpclient: Haxe 中的 Lazy Pirate 客户端

package ;

import haxe.Stack;

import neko.Lib;

import org.zeromq.ZContext;

import org.zeromq.ZFrame;

import org.zeromq.ZMQ;

import org.zeromq.ZMQException;

import org.zeromq.ZMQPoller;

import org.zeromq.ZSocket;

/**

* Lazy Pirate client

* Use zmq_poll to do a safe request-reply

* To run, start lpserver and then randomly kill / restart it.

*

* @see https://zguide.zeromq.cn/page:all#Client-side-Reliability-Lazy-Pirate-Pattern

*/

class LPClient

{

private static inline var REQUEST_TIMEOUT = 2500; // msecs, (> 1000!)

private static inline var REQUEST_RETRIES = 3; // Before we abandon

private static inline var SERVER_ENDPOINT = "tcp://:5555";

public static function main() {

Lib.println("** LPClient (see: https://zguide.zeromq.cn/page:all#Client-side-Reliability-Lazy-Pirate-Pattern)");

var ctx:ZContext = new ZContext();

Lib.println("I: connecting to server ...");

var client = ctx.createSocket(ZMQ_REQ);

if (client == null)

return;

client.connect(SERVER_ENDPOINT);

var sequence = 0;

var retries_left = REQUEST_RETRIES;

var poller = new ZMQPoller();

while (retries_left > 0 && !ZMQ.isInterrupted()) {

// We send a request, then we work to get a reply

var request = Std.string(++sequence);

ZFrame.newStringFrame(request).send(client);

var expect_reply = true;

while (expect_reply) {

poller.registerSocket(client, ZMQ.ZMQ_POLLIN());

// Poll socket for a reply, with timeout

try {

var res = poller.poll(REQUEST_TIMEOUT * 1000);

} catch (e:ZMQException) {

trace("ZMQException #:" + e.errNo + ", str:" + e.str());

trace (Stack.toString(Stack.exceptionStack()));

ctx.destroy();

return;

}

// If we got a reply, process it

if (poller.pollin(1)) {

// We got a reply from the server, must match sequence

var replyFrame = ZFrame.recvFrame(client);

if (replyFrame == null)

break; // Interrupted

if (Std.parseInt(replyFrame.toString()) == sequence) {

Lib.println("I: server replied OK (" + sequence + ")");

retries_left = REQUEST_RETRIES;

expect_reply = false;

} else

Lib.println("E: malformed reply from server: " + replyFrame.toString());

replyFrame.destroy();

} else if (--retries_left == 0) {

Lib.println("E: server seems to be offline, abandoning");

break;

} else {

Lib.println("W: no response from server, retrying...");

// Old socket is confused, close it and open a new one

ctx.destroySocket(client);

Lib.println("I: reconnecting to server...");

client = ctx.createSocket(ZMQ_REQ);

client.connect(SERVER_ENDPOINT);

// Send request again, on new socket

ZFrame.newStringFrame(request).send(client);

}

poller.unregisterAllSockets();

}

}

ctx.destroy();

}

}lpclient: Java 中的 Lazy Pirate 客户端

package guide;

import org.zeromq.SocketType;

import org.zeromq.ZContext;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Poller;

import org.zeromq.ZMQ.Socket;

//

// Lazy Pirate client

// Use zmq_poll to do a safe request-reply

// To run, start lpserver and then randomly kill/restart it

//

public class lpclient

{

private final static int REQUEST_TIMEOUT = 2500; // msecs, (> 1000!)

private final static int REQUEST_RETRIES = 3; // Before we abandon

private final static String SERVER_ENDPOINT = "tcp://:5555";

public static void main(String[] argv)

{

try (ZContext ctx = new ZContext()) {

System.out.println("I: connecting to server");

Socket client = ctx.createSocket(SocketType.REQ);

assert (client != null);

client.connect(SERVER_ENDPOINT);

Poller poller = ctx.createPoller(1);

poller.register(client, Poller.POLLIN);

int sequence = 0;

int retriesLeft = REQUEST_RETRIES;

while (retriesLeft > 0 && !Thread.currentThread().isInterrupted()) {

// We send a request, then we work to get a reply

String request = String.format("%d", ++sequence);

client.send(request);

int expect_reply = 1;

while (expect_reply > 0) {

// Poll socket for a reply, with timeout

int rc = poller.poll(REQUEST_TIMEOUT);

if (rc == -1)

break; // Interrupted

// Here we process a server reply and exit our loop if the

// reply is valid. If we didn't a reply we close the client

// socket and resend the request. We try a number of times

// before finally abandoning:

if (poller.pollin(0)) {

// We got a reply from the server, must match

// getSequence

String reply = client.recvStr();

if (reply == null)

break; // Interrupted

if (Integer.parseInt(reply) == sequence) {

System.out.printf(

"I: server replied OK (%s)\n", reply

);

retriesLeft = REQUEST_RETRIES;

expect_reply = 0;

}

else System.out.printf(

"E: malformed reply from server: %s\n", reply

);

}

else if (--retriesLeft == 0) {

System.out.println(

"E: server seems to be offline, abandoning\n"

);

break;

}

else {

System.out.println(

"W: no response from server, retrying\n"

);

// Old socket is confused; close it and open a new one

poller.unregister(client);

ctx.destroySocket(client);

System.out.println("I: reconnecting to server\n");

client = ctx.createSocket(SocketType.REQ);

client.connect(SERVER_ENDPOINT);

poller.register(client, Poller.POLLIN);

// Send request again, on new socket

client.send(request);

}

}

}

}

}

}

lpclient: Julia 中的 Lazy Pirate 客户端

lpclient: Lua 中的 Lazy Pirate 客户端

--

-- Lazy Pirate client

-- Use zmq_poll to do a safe request-reply

-- To run, start lpserver and then randomly kill/restart it

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zmq.poller"

require"zhelpers"

local REQUEST_TIMEOUT = 2500 -- msecs, (> 1000!)

local REQUEST_RETRIES = 3 -- Before we abandon

-- Helper function that returns a new configured socket

-- connected to the Hello World server

--

local function s_client_socket(context)

printf ("I: connecting to server...\n")

local client = context:socket(zmq.REQ)

client:connect("tcp://:5555")

-- Configure socket to not wait at close time

client:setopt(zmq.LINGER, 0)

return client

end

s_version_assert (2, 1)

local context = zmq.init(1)

local client = s_client_socket (context)

local sequence = 0

local retries_left = REQUEST_RETRIES

local expect_reply = true

local poller = zmq.poller(1)

local function client_cb()

-- We got a reply from the server, must match sequence

--local reply = assert(client:recv(zmq.NOBLOCK))

local reply = client:recv()

if (tonumber(reply) == sequence) then

printf ("I: server replied OK (%s)\n", reply)

retries_left = REQUEST_RETRIES

expect_reply = false

else

printf ("E: malformed reply from server: %s\n", reply)

end

end

poller:add(client, zmq.POLLIN, client_cb)

while (retries_left > 0) do

sequence = sequence + 1

-- We send a request, then we work to get a reply

local request = string.format("%d", sequence)

client:send(request)

expect_reply = true

while (expect_reply) do

-- Poll socket for a reply, with timeout

local cnt = assert(poller:poll(REQUEST_TIMEOUT * 1000))

-- Check if there was no reply

if (cnt == 0) then

retries_left = retries_left - 1

if (retries_left == 0) then

printf ("E: server seems to be offline, abandoning\n")

break

else

printf ("W: no response from server, retrying...\n")

-- Old socket is confused; close it and open a new one

poller:remove(client)

client:close()

client = s_client_socket (context)

poller:add(client, zmq.POLLIN, client_cb)

-- Send request again, on new socket

client:send(request)

end

end

end

end

client:close()

context:term()

lpclient: Node.js 中的 Lazy Pirate 客户端

lpclient: Objective-C 中的 Lazy Pirate 客户端

lpclient: ooc 中的 Lazy Pirate 客户端

lpclient: Perl 中的 Lazy Pirate 客户端

# Lazy Pirate client in Perl

# Use poll to do a safe request-reply

# To run, start lpserver.pl then randomly kill/restart it

use strict;

use warnings;

use v5.10;

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_REQ);

use EV;

my $REQUEST_TIMEOUT = 2500; # msecs

my $REQUEST_RETRIES = 3; # Before we abandon

my $SERVER_ENDPOINT = 'tcp://:5555';

my $ctx = ZMQ::FFI->new();

say 'I: connecting to server...';

my $client = $ctx->socket(ZMQ_REQ);

$client->connect($SERVER_ENDPOINT);

my $sequence = 0;

my $retries_left = $REQUEST_RETRIES;

REQUEST_LOOP:

while ($retries_left) {

# We send a request, then we work to get a reply

my $request = ++$sequence;

$client->send($request);

my $expect_reply = 1;

RETRY_LOOP:

while ($expect_reply) {

# Poll socket for a reply, with timeout

EV::once $client->get_fd, EV::READ, $REQUEST_TIMEOUT / 1000, sub {

my ($revents) = @_;

# Here we process a server reply and exit our loop if the

# reply is valid. If we didn't get a reply we close the client

# socket and resend the request. We try a number of times

# before finally abandoning:

if ($revents == EV::READ) {

while ($client->has_pollin) {

# We got a reply from the server, must match sequence

my $reply = $client->recv();

if ($reply == $sequence) {

say "I: server replied OK ($reply)";

$retries_left = $REQUEST_RETRIES;

$expect_reply = 0;

}

else {

say "E: malformed reply from server: $reply";

}

}

}

elsif (--$retries_left == 0) {

say 'E: server seems to be offline, abandoning';

}

else {

say "W: no response from server, retrying...";

# Old socket is confused; close it and open a new one

$client->close;

say "reconnecting to server...";

$client = $ctx->socket(ZMQ_REQ);

$client->connect($SERVER_ENDPOINT);

# Send request again, on new socket

$client->send($request);

}

};

last RETRY_LOOP if $retries_left == 0;

EV::run;

}

}

lpclient: PHP 中的 Lazy Pirate 客户端

<?php

/*

* Lazy Pirate client

* Use zmq_poll to do a safe request-reply

* To run, start lpserver and then randomly kill/restart it

*

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

define("REQUEST_TIMEOUT", 2500); // msecs, (> 1000!)

define("REQUEST_RETRIES", 3); // Before we abandon

/*

* Helper function that returns a new configured socket

* connected to the Hello World server

*/

function client_socket(ZMQContext $context)

{

echo "I: connecting to server...", PHP_EOL;

$client = new ZMQSocket($context,ZMQ::SOCKET_REQ);

$client->connect("tcp://:5555");

// Configure socket to not wait at close time

$client->setSockOpt(ZMQ::SOCKOPT_LINGER, 0);

return $client;

}

$context = new ZMQContext();

$client = client_socket($context);

$sequence = 0;

$retries_left = REQUEST_RETRIES;

$read = $write = array();

while ($retries_left) {

// We send a request, then we work to get a reply

$client->send(++$sequence);

$expect_reply = true;

while ($expect_reply) {

// Poll socket for a reply, with timeout

$poll = new ZMQPoll();

$poll->add($client, ZMQ::POLL_IN);

$events = $poll->poll($read, $write, REQUEST_TIMEOUT);

// If we got a reply, process it

if ($events > 0) {

// We got a reply from the server, must match sequence

$reply = $client->recv();

if (intval($reply) == $sequence) {

printf ("I: server replied OK (%s)%s", $reply, PHP_EOL);

$retries_left = REQUEST_RETRIES;

$expect_reply = false;

} else {

printf ("E: malformed reply from server: %s%s", $reply, PHP_EOL);

}

} elseif (--$retries_left == 0) {

echo "E: server seems to be offline, abandoning", PHP_EOL;

break;

} else {

echo "W: no response from server, retrying...", PHP_EOL;

// Old socket will be confused; close it and open a new one

$client = client_socket($context);

// Send request again, on new socket

$client->send($sequence);

}

}

}

lpclient: Python 中的 Lazy Pirate 客户端

#

# Lazy Pirate client

# Use zmq_poll to do a safe request-reply

# To run, start lpserver and then randomly kill/restart it

#

# Author: Daniel Lundin <dln(at)eintr(dot)org>

#

import itertools

import logging

import sys

import zmq

logging.basicConfig(format="%(levelname)s: %(message)s", level=logging.INFO)

REQUEST_TIMEOUT = 2500

REQUEST_RETRIES = 3

SERVER_ENDPOINT = "tcp://:5555"

context = zmq.Context()

logging.info("Connecting to server…")

client = context.socket(zmq.REQ)

client.connect(SERVER_ENDPOINT)

for sequence in itertools.count():

request = str(sequence).encode()

logging.info("Sending (%s)", request)

client.send(request)

retries_left = REQUEST_RETRIES

while True:

if (client.poll(REQUEST_TIMEOUT) & zmq.POLLIN) != 0:

reply = client.recv()

if int(reply) == sequence:

logging.info("Server replied OK (%s)", reply)

retries_left = REQUEST_RETRIES

break

else:

logging.error("Malformed reply from server: %s", reply)

continue

retries_left -= 1

logging.warning("No response from server")

# Socket is confused. Close and remove it.

client.setsockopt(zmq.LINGER, 0)

client.close()

if retries_left == 0:

logging.error("Server seems to be offline, abandoning")

sys.exit()

logging.info("Reconnecting to server…")

# Create new connection

client = context.socket(zmq.REQ)

client.connect(SERVER_ENDPOINT)

logging.info("Resending (%s)", request)

client.send(request)

lpclient: Q 中的 Lazy Pirate 客户端

lpclient: Racket 中的 Lazy Pirate 客户端

lpclient: Ruby 中的 Lazy Pirate 客户端

#!/usr/bin/env ruby

# Author: Han Holl <han.holl@pobox.com>

require 'rubygems'

require 'ffi-rzmq'

class LPClient

def initialize(connect, retries = nil, timeout = nil)

@connect = connect

@retries = (retries || 3).to_i

@timeout = (timeout || 10).to_i

@ctx = ZMQ::Context.new(1)

client_sock

at_exit do

@socket.close

end

end

def client_sock

@socket = @ctx.socket(ZMQ::REQ)

@socket.setsockopt(ZMQ::LINGER, 0)

@socket.connect(@connect)

end

def send(message)

@retries.times do |tries|

raise("Send: #{message} failed") unless @socket.send(message)

if ZMQ.select( [@socket], nil, nil, @timeout)

yield @socket.recv

return

else

@socket.close

client_sock

end

end

raise 'Server down'

end

end

if $0 == __FILE__

server = LPClient.new(ARGV[0] || "tcp://:5555", ARGV[1], ARGV[2])

count = 0

loop do

request = "#{count}"

count += 1

server.send(request) do |reply|

if reply == request

puts("I: server replied OK (#{reply})")

else

puts("E: malformed reply from server: #{reply}")

end

end

end

puts 'success'

end

lpclient: Rust 中的 Lazy Pirate 客户端

use std::convert::TryInto;

const REQUEST_TIMEOUT: i64 = 2500;

const REQUEST_RETRIES: usize = 3;

const SERVER_ENDPOINT: &'static str = "tcp://:5555";

fn connect(context: &zmq::Context) -> zmq::Socket {

let socket = context.socket(zmq::REQ).unwrap();

socket.set_linger(0).unwrap();

socket.connect(SERVER_ENDPOINT).unwrap();

socket

}

fn reconnect(context: &zmq::Context, socket: zmq::Socket) -> zmq::Socket {

drop(socket);

connect(context)

}

fn main() {

let mut retries_left = REQUEST_RETRIES;

let context = zmq::Context::new();

let mut socket = connect(&context);

let mut i = 0i64;

loop {

let value = i.to_le_bytes();

i += 1;

while retries_left > 0 {

retries_left -= 1;

let request = zmq::Message::from(&value.as_slice());

println!("Sending request {request:?}");

socket.send(request, 0).unwrap();

if socket.poll(zmq::POLLIN, REQUEST_TIMEOUT).unwrap() != 0 {

let reply = socket.recv_msg(0).unwrap();

let reply_content =

TryInto::<[u8; 8]>::try_into(&*reply).and_then(|x| Ok(i64::from_le_bytes(x)));

if let Ok(i) = reply_content {

println!("Server replied OK {i}");

retries_left = REQUEST_RETRIES;

break;

} else {

println!("Malformed message: {reply:?}");

continue;

}

}

println!("No response from Server");

println!("Reconnecting server...");

socket = reconnect(&context, socket);

println!("Resending Request");

}

if retries_left == 0 {

println!("Server is offline. Goodbye...");

return;

}

}

}

lpclient: Scala 中的 Lazy Pirate 客户端

lpclient: Tcl 中的 Lazy Pirate 客户端

#

# Lazy Pirate client

# Use zmq_poll to do a safe request-reply

# To run, start lpserver and then randomly kill/restart it

#

package require zmq

set REQUEST_TIMEOUT 2500 ;# msecs, (> 1000!)

set REQUEST_RETRIES 3 ;# Before we abandon

set SERVER_ENDPOINT "tcp://:5555"

zmq context context

puts "I: connecting to server..."

zmq socket client context REQ

client connect $SERVER_ENDPOINT

set sequence 0

set retries_left $REQUEST_RETRIES

while {$retries_left} {

# We send a request, then we work to get a reply

client send [incr sequence]

set expect_reply 1

while {$expect_reply} {

# Poll socket for a reply, with timeout

set rpoll_set [zmq poll {{client {POLLIN}}} $REQUEST_TIMEOUT]

# If we got a reply, process it

if {[llength $rpoll_set] && [lindex $rpoll_set 0 0] eq "client"} {

# We got a reply from the server, must match sequence

set reply [client recv]

if {$reply eq $sequence} {

puts "I: server replied OK ($reply)"

set retries_left $REQUEST_RETRIES

set expect_reply 0

} else {

puts "E: malformed reply from server: $reply"

}

} elseif {[incr retries_left -1] <= 0} {

puts "E: server seems to be offline, abandoning"

set retries_left 0

break

} else {

puts "W: no response from server, retrying..."

# Old socket is confused; close it and open a new one

client close

puts "I: connecting to server..."

zmq socket client context REQ

client connect $SERVER_ENDPOINT

# Send request again, on new socket

client send $sequence

}

}

}

client close

context term

lpclient: OCaml 中的 Lazy Pirate 客户端

将其与配套的服务器一起运行

lpserver: Ada 中的 Lazy Pirate 服务器

lpserver: Basic 中的 Lazy Pirate 服务器

lpserver: C 中的 Lazy Pirate 服务器

// Lazy Pirate server

// Binds REQ socket to tcp://*:5555

// Like hwserver except:

// - echoes request as-is

// - randomly runs slowly, or exits to simulate a crash.

#include "zhelpers.h"

#include <unistd.h>

int main (void)

{

srandom ((unsigned) time (NULL));

void *context = zmq_ctx_new ();

void *server = zmq_socket (context, ZMQ_REP);

zmq_bind (server, "tcp://*:5555");

int cycles = 0;

while (1) {

char *request = s_recv (server);

cycles++;

// Simulate various problems, after a few cycles

if (cycles > 3 && randof (3) == 0) {

printf ("I: simulating a crash\n");

break;

}

else

if (cycles > 3 && randof (3) == 0) {

printf ("I: simulating CPU overload\n");

sleep (2);

}

printf ("I: normal request (%s)\n", request);

sleep (1); // Do some heavy work

s_send (server, request);

free (request);

}

zmq_close (server);

zmq_ctx_destroy (context);

return 0;

}

lpserver: C++ 中的 Lazy Pirate 服务器

//

// Lazy Pirate server

// Binds REQ socket to tcp://*:5555

// Like hwserver except:

// - echoes request as-is

// - randomly runs slowly, or exits to simulate a crash.

//

#include "zhelpers.hpp"

int main ()

{

srandom ((unsigned) time (NULL));

zmq::context_t context(1);

zmq::socket_t server(context, ZMQ_REP);

server.bind("tcp://*:5555");

int cycles = 0;

while (1) {

std::string request = s_recv (server);

cycles++;

// Simulate various problems, after a few cycles

if (cycles > 3 && within (3) == 0) {

std::cout << "I: simulating a crash" << std::endl;

break;

}

else

if (cycles > 3 && within (3) == 0) {

std::cout << "I: simulating CPU overload" << std::endl;

sleep (2);

}

std::cout << "I: normal request (" << request << ")" << std::endl;

sleep (1); // Do some heavy work

s_send (server, request);

}

return 0;

}

lpserver: C# 中的 Lazy Pirate 服务器

lpserver: CL 中的 Lazy Pirate 服务器

lpserver: Delphi 中的 Lazy Pirate 服务器

program lpserver;

//

// Lazy Pirate server

// Binds REQ socket to tcp://*:5555

// Like hwserver except:

// - echoes request as-is

// - randomly runs slowly, or exits to simulate a crash.

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

;

var

context: TZMQContext;

server: TZMQSocket;

cycles: Integer;

request: Utf8String;

begin

Randomize;

context := TZMQContext.create;

server := context.socket( stRep );

server.bind( 'tcp://*:5555' );

cycles := 0;

while not context.Terminated do

try

server.recv( request );

inc( cycles );

// Simulate various problems, after a few cycles

if ( cycles > 3 ) and ( random(3) = 0) then

begin

Writeln( 'I: simulating a crash' );

break;

end else

if ( cycles > 3 ) and ( random(3) = 0 ) then

begin

Writeln( 'I: simulating CPU overload' );

sleep(2000);

end;

Writeln( Format( 'I: normal request (%s)', [request] ) );

sleep (1000); // Do some heavy work

server.send( request );

except

end;

context.Free;

end.

lpserver: Erlang 中的 Lazy Pirate 服务器

lpserver: Elixir 中的 Lazy Pirate 服务器

lpserver: F# 中的 Lazy Pirate 服务器

lpserver: Felix 中的 Lazy Pirate 服务器

lpserver: Go 中的 Lazy Pirate 服务器

// Lazy Pirate server

// Binds REQ socket to tcp://*:5555

// Like hwserver except:

// - echoes request as-is

// - randomly runs slowly, or exits to simulate a crash.

//

// Author: iano <scaly.iano@gmail.com>

// Based on C example

package main

import (

"fmt"

zmq "github.com/alecthomas/gozmq"

"math/rand"

"time"

)

const (

SERVER_ENDPOINT = "tcp://*:5555"

)

func main() {

src := rand.NewSource(time.Now().UnixNano())

random := rand.New(src)

context, _ := zmq.NewContext()

defer context.Close()

server, _ := context.NewSocket(zmq.REP)

defer server.Close()

server.Bind(SERVER_ENDPOINT)

for cycles := 1; ; cycles++ {

request, _ := server.Recv(0)

// Simulate various problems, after a few cycles

if cycles > 3 {

switch r := random.Intn(3); r {

case 0:

fmt.Println("I: Simulating a crash")

return

case 1:

fmt.Println("I: simulating CPU overload")

time.Sleep(2 * time.Second)

}

}

fmt.Printf("I: normal request (%s)\n", request)

time.Sleep(1 * time.Second) // Do some heavy work

server.Send(request, 0)

}

}

lpserver: Haskell 中的 Lazy Pirate 服务器

{--

Lazy Pirate server in Haskell

--}

module Main where

import System.ZMQ4.Monadic

import System.Random (randomRIO)

import System.Exit (exitSuccess)

import Control.Monad (forever, when)

import Control.Concurrent (threadDelay)

import Data.ByteString.Char8 (pack, unpack)

main :: IO ()

main =

runZMQ $ do

server <- socket Rep

bind server "tcp://*:5555"

sendClient 0 server

sendClient :: Int -> Socket z Rep -> ZMQ z ()

sendClient cycles server = do

req <- receive server

chance <- liftIO $ randomRIO (0::Int, 3)

when (cycles > 3 && chance == 0) $ do

liftIO crash

chance' <- liftIO $ randomRIO (0::Int, 3)

when (cycles > 3 && chance' == 0) $ do

liftIO overload

liftIO $ putStrLn $ "I: normal request " ++ (unpack req)

liftIO $ threadDelay $ 1 * 1000 * 1000

send server [] req

sendClient (cycles+1) server

crash = do

putStrLn "I: Simulating a crash"

exitSuccess

overload = do

putStrLn "I: Simulating CPU overload"

threadDelay $ 2 * 1000 * 1000

lpserver: Haxe 中的 Lazy Pirate 服务器

package ;

import neko.Lib;

import neko.Sys;

import org.zeromq.ZContext;

import org.zeromq.ZFrame;

import org.zeromq.ZMQ;

/**

* Lazy Pirate server

* Binds REP socket to tcp://*:5555

* Like HWServer except:

* - echoes request as-is

* - randomly runs slowly, or exists to simulate a crash.

*

* @see https://zguide.zeromq.cn/page:all#Client-side-Reliability-Lazy-Pirate-Pattern

*

*/

class LPServer

{

public static function main() {

Lib.println("** LPServer (see: https://zguide.zeromq.cn/page:all#Client-side-Reliability-Lazy-Pirate-Pattern)");

var ctx = new ZContext();

var server = ctx.createSocket(ZMQ_REP);

server.bind("tcp://*:5555");

var cycles = 0;

while (true) {

var requestFrame = ZFrame.recvFrame(server);

cycles++;

// Simulate various problems, after a few cycles

if (cycles > 3 && ZHelpers.randof(3) == 0) {

Lib.println("I: simulating a crash");

break;

}

else if (cycles > 3 && ZHelpers.randof(3) == 0) {

Lib.println("I: simulating CPU overload");

Sys.sleep(2.0);

}

Lib.println("I: normal request (" + requestFrame.toString() + ")");

Sys.sleep(1.0); // Do some heavy work

requestFrame.send(server);

requestFrame.destroy();

}

server.close();

ctx.destroy();

}

}lpserver: Java 中的 Lazy Pirate 服务器

package guide;

import java.util.Random;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZContext;

//

// Lazy Pirate server

// Binds REQ socket to tcp://*:5555

// Like hwserver except:

// - echoes request as-is

// - randomly runs slowly, or exits to simulate a crash.

//

public class lpserver

{

public static void main(String[] argv) throws Exception

{

Random rand = new Random(System.nanoTime());

try (ZContext context = new ZContext()) {

Socket server = context.createSocket(SocketType.REP);

server.bind("tcp://*:5555");

int cycles = 0;

while (true) {

String request = server.recvStr();

cycles++;

// Simulate various problems, after a few cycles

if (cycles > 3 && rand.nextInt(3) == 0) {

System.out.println("I: simulating a crash");

break;

}

else if (cycles > 3 && rand.nextInt(3) == 0) {

System.out.println("I: simulating CPU overload");

Thread.sleep(2000);

}

System.out.printf("I: normal request (%s)\n", request);

Thread.sleep(1000); // Do some heavy work

server.send(request);

}

}

}

}

lpserver: Julia 中的 Lazy Pirate 服务器

lpserver: Lua 中的 Lazy Pirate 服务器

--

-- Lazy Pirate server

-- Binds REQ socket to tcp://*:5555

-- Like hwserver except:

-- - echoes request as-is

-- - randomly runs slowly, or exits to simulate a crash.

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zhelpers"

math.randomseed(os.time())

local context = zmq.init(1)

local server = context:socket(zmq.REP)

server:bind("tcp://*:5555")

local cycles = 0

while true do

local request = server:recv()

cycles = cycles + 1

-- Simulate various problems, after a few cycles

if (cycles > 3 and randof (3) == 0) then

printf("I: simulating a crash\n")

break

elseif (cycles > 3 and randof (3) == 0) then

printf("I: simulating CPU overload\n")

s_sleep(2000)

end

printf("I: normal request (%s)\n", request)

s_sleep(1000) -- Do some heavy work

server:send(request)

end

server:close()

context:term()

lpserver: Node.js 中的 Lazy Pirate 服务器

lpserver: Objective-C 中的 Lazy Pirate 服务器

lpserver: ooc 中的 Lazy Pirate 服务器

lpserver: Perl 中的 Lazy Pirate 服务器

# Lazy Pirate server in Perl

# Binds REQ socket to tcp://*:5555

# Like hwserver except:

# - echoes request as-is

# - randomly runs slowly, or exits to simulate a crash.

use strict;

use warnings;

use v5.10;

use ZMQ::FFI;

use ZMQ::FFI::Constants qw(ZMQ_REP);

my $context = ZMQ::FFI->new();

my $server = $context->socket(ZMQ_REP);

$server->bind('tcp://*:5555');

my $cycles = 0;

SERVER_LOOP:

while (1) {

my $request = $server->recv();

$cycles++;

# Simulate various problems, after a few cycles

if ($cycles > 3 && int(rand(3)) == 0) {

say "I: simulating a crash";

last SERVER_LOOP;

}

elsif ($cycles > 3 && int(rand(3)) == 0) {

say "I: simulating CPU overload";

sleep 2;

}

say "I: normal request ($request)";

sleep 1; # Do some heavy work

$server->send($request);

}

lpserver: PHP 中的 Lazy Pirate 服务器

<?php

/*

* Lazy Pirate server

* Binds REQ socket to tcp://*:5555

* Like hwserver except:

* - echoes request as-is

* - randomly runs slowly, or exits to simulate a crash.

*

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

$context = new ZMQContext();

$server = new ZMQSocket($context, ZMQ::SOCKET_REP);

$server->bind("tcp://*:5555");

$cycles = 0;

while (true) {

$request = $server->recv();

$cycles++;

// Simulate various problems, after a few cycles

if ($cycles > 3 && rand(0, 3) == 0) {

echo "I: simulating a crash", PHP_EOL;

break;

} elseif ($cycles > 3 && rand(0, 3) == 0) {

echo "I: simulating CPU overload", PHP_EOL;

sleep(5);

}

printf ("I: normal request (%s)%s", $request, PHP_EOL);

sleep(1); // Do some heavy work

$server->send($request);

}

lpserver: Python 中的 Lazy Pirate 服务器

#

# Lazy Pirate server

# Binds REQ socket to tcp://*:5555

# Like hwserver except:

# - echoes request as-is

# - randomly runs slowly, or exits to simulate a crash.

#

# Author: Daniel Lundin <dln(at)eintr(dot)org>

#

from random import randint

import itertools

import logging

import time

import zmq

logging.basicConfig(format="%(levelname)s: %(message)s", level=logging.INFO)

context = zmq.Context()

server = context.socket(zmq.REP)

server.bind("tcp://*:5555")

for cycles in itertools.count():

request = server.recv()

# Simulate various problems, after a few cycles

if cycles > 3 and randint(0, 3) == 0:

logging.info("Simulating a crash")

break

elif cycles > 3 and randint(0, 3) == 0:

logging.info("Simulating CPU overload")

time.sleep(2)

logging.info("Normal request (%s)", request)

time.sleep(1) # Do some heavy work

server.send(request)

lpserver: Q 中的 Lazy Pirate 服务器

lpserver: Racket 中的 Lazy Pirate 服务器

lpserver: Ruby 中的 Lazy Pirate 服务器

#!/usr/bin/env ruby

# Author: Han Holl <han.holl@pobox.com>

require 'rubygems'

require 'zmq'

class LPServer

def initialize(connect)

@ctx = ZMQ::Context.new(1)

@socket = @ctx.socket(ZMQ::REP)

@socket.bind(connect)

end

def run

begin

loop do

rsl = yield @socket.recv

@socket.send rsl

end

ensure

@socket.close

@ctx.close

end

end

end

if $0 == __FILE__

cycles = 0

srand

LPServer.new(ARGV[0] || "tcp://*:5555").run do |request|

cycles += 1

if cycles > 3

if rand(3) == 0

puts "I: simulating a crash"

break

elsif rand(3) == 0

puts "I: simulating CPU overload"

sleep(3)

end

end

puts "I: normal request (#{request})"

sleep(1)

request

end

end

lpserver: Rust 中的 Lazy Pirate 服务器

use rand::{thread_rng, Rng};

use std::time::Duration;

fn main() {

let context = zmq::Context::new();

let server = context.socket(zmq::REP).unwrap();

server.bind("tcp://*:5555").unwrap();

let mut i = 0;

loop {

i += 1;

let request = server.recv_msg(0).unwrap();

println!("Got Request: {request:?}");

server.send(request, 0).unwrap();

std::thread::sleep(Duration::from_secs(1));

if (i > 3) && (thread_rng().gen_range(0..3) == 0) {

// simulate a crash

println!("Oh no! Server crashed.");

break;

}

if (i > 3) && (thread_rng().gen_range(0..3) == 0) {

// simulate overload

println!("Server is busy.");

std::thread::sleep(Duration::from_secs(2));

}

}

}

lpserver: Scala 中的 Lazy Pirate 服务器

import org.zeromq.ZMQ;

import java.util.Random;

/*

* Lazy Pirate server

* @author Zac Li

* @email zac.li.cmu@gmail.com

*/

object lpserver{

def main (args : Array[String]) {

val rand = new Random(System.nanoTime())

val context = ZMQ.context(1)

val server = context.socket(ZMQ.REP)

server.bind("tcp://*:5555")

val cycles = 0;

while (true) {

val request = server.recvStr()

cycles++

// Simulate various problems, after a few cycles

if (cycles > 3 && rand.nextInt(3) == 0) {

println("I: simulating a crash")

break

} else if (cycles > 3 && rand.nextInt(3) == 0) {

println("I: simulating CPU overload")

Thread.sleep(2000)

}

println(f"I: normal request (%s)\n", request)

Thread.sleep(1000)

server.send(request)

}

server close()

context term()

}

}lpserver: Tcl 中的 Lazy Pirate 服务器

#

# Lazy Pirate server

# Binds REQ socket to tcp://*:5555

# Like hwserver except:

# - echoes request as-is

# - randomly runs slowly, or exits to simulate a crash.

#

package require zmq

expr {srand([pid])}

zmq context context

zmq socket server context REP

server bind "tcp://*:5555"

set cycles 0

while {1} {

set request [server recv]

incr cycles

# Simulate various problems, after a few cycles

if {$cycles > 3 && int(rand()*3) == 0} {

puts "I: simulating a crash"

break;

} elseif {$cycles > 3 && int(rand()*3) == 0} {

puts "I: simulating CPU overload"

after 2000

}

puts "I: normal request ($request)"

after 1000 ;# Do some heavy work

server send $request

}

server close

context term

lpserver: OCaml 中的 Lazy Pirate 服务器

要运行此测试用例,请在两个控制台窗口中分别启动客户端和服务器。服务器将在发送几条消息后随机出现异常行为。你可以检查客户端的响应。以下是服务器的典型输出:

I: normal request (1)

I: normal request (2)

I: normal request (3)

I: simulating CPU overload

I: normal request (4)

I: simulating a crash

以下是客户端的响应:

I: connecting to server...

I: server replied OK (1)

I: server replied OK (2)

I: server replied OK (3)

W: no response from server, retrying...

I: connecting to server...

W: no response from server, retrying...

I: connecting to server...

E: server seems to be offline, abandoning

客户端对每条消息进行编号,并检查回复是否完全按顺序返回:确保没有请求或回复丢失,也没有回复返回多次或乱序。多运行几次测试,直到你确信这个机制确实有效。在生产应用中不需要序号;它们只是帮助我们验证我们的设计。

客户端使用 REQ 套接字,并执行暴力关闭/重新打开操作,因为 REQ 套接字强制执行严格的发送/接收循环。你可能会想使用 DEALER 代替,但这并不是一个好主意。首先,这意味着你需要模拟 REQ 在 envelope(信封)方面做的特殊处理(如果你忘了那是什么,这说明你不想自己去实现它)。其次,这意味着你可能会收到意料之外的回复。

仅在客户端处理失败的情况适用于一组客户端与单个服务器通信的场景。它可以处理服务器崩溃,但仅限于恢复意味着重新启动同一台服务器的情况。如果存在永久性错误,例如服务器硬件电源故障,这种方法将不起作用。由于服务器中的应用程序代码通常是任何架构中最大的故障源,因此依赖于单个服务器并非明智之举。

所以,优缺点:

- 优点:易于理解和实现。

- 优点:易于与现有客户端和服务器应用程序代码一起工作。

- 优点:ZeroMQ 会自动重试实际的重连直到成功。

- 缺点:不会故障转移到备用或备选服务器。

基本可靠排队 (Simple Pirate 模式) #

我们的第二种方法是在 Lazy Pirate 模式的基础上扩展一个队列代理,该代理允许我们透明地与多个服务器通信,我们可以更准确地称这些服务器为“worker”。我们将分阶段开发这个模式,从一个最简工作模型 Simple Pirate 模式开始。

在所有这些 Pirate 模式中,worker 都是无状态的。如果应用程序需要一些共享状态,例如共享数据库,我们在设计消息框架时并不知道这些。拥有一个队列代理意味着 worker 可以随时加入和离开,而客户端对此一无所知。如果一个 worker 死了,另一个会接管。这是一个不错、简单的拓扑结构,只有一个真正的弱点,那就是中心队列本身,它可能变得难以管理,并成为单点故障。

队列代理的基础是 第三章 - 高级请求-回复模式 中的负载均衡代理。为了处理死亡或阻塞的 worker,我们需要做最少的事情是什么?结果是,出乎意料地少。客户端已经有了重试机制。所以使用负载均衡模式会工作得很好。这符合 ZeroMQ 的哲学,即我们可以通过在中间插入简单的代理来扩展请求-回复这样的点对点模式。

我们不需要特殊的客户端;我们仍然使用 Lazy Pirate 客户端。以下是队列,它与负载均衡代理的主要任务完全相同:

spqueue: Ada 中的 Simple Pirate 队列

spqueue: Basic 中的 Simple Pirate 队列

spqueue: C 中的 Simple Pirate 队列

// Simple Pirate broker

// This is identical to load-balancing pattern, with no reliability

// mechanisms. It depends on the client for recovery. Runs forever.

#include "czmq.h"

#define WORKER_READY "\001" // Signals worker is ready

int main (void)

{

zctx_t *ctx = zctx_new ();

void *frontend = zsocket_new (ctx, ZMQ_ROUTER);

void *backend = zsocket_new (ctx, ZMQ_ROUTER);

zsocket_bind (frontend, "tcp://*:5555"); // For clients

zsocket_bind (backend, "tcp://*:5556"); // For workers

// Queue of available workers

zlist_t *workers = zlist_new ();

// The body of this example is exactly the same as lbbroker2.

// .skip

while (true) {

zmq_pollitem_t items [] = {

{ backend, 0, ZMQ_POLLIN, 0 },

{ frontend, 0, ZMQ_POLLIN, 0 }

};

// Poll frontend only if we have available workers

int rc = zmq_poll (items, zlist_size (workers)? 2: 1, -1);

if (rc == -1)

break; // Interrupted

// Handle worker activity on backend

if (items [0].revents & ZMQ_POLLIN) {

// Use worker identity for load-balancing

zmsg_t *msg = zmsg_recv (backend);

if (!msg)

break; // Interrupted

zframe_t *identity = zmsg_unwrap (msg);

zlist_append (workers, identity);

// Forward message to client if it's not a READY

zframe_t *frame = zmsg_first (msg);

if (memcmp (zframe_data (frame), WORKER_READY, 1) == 0)

zmsg_destroy (&msg);

else

zmsg_send (&msg, frontend);

}

if (items [1].revents & ZMQ_POLLIN) {

// Get client request, route to first available worker

zmsg_t *msg = zmsg_recv (frontend);

if (msg) {

zmsg_wrap (msg, (zframe_t *) zlist_pop (workers));

zmsg_send (&msg, backend);

}

}

}

// When we're done, clean up properly

while (zlist_size (workers)) {

zframe_t *frame = (zframe_t *) zlist_pop (workers);

zframe_destroy (&frame);

}

zlist_destroy (&workers);

zctx_destroy (&ctx);

return 0;

// .until

}

spqueue: C++ 中的 Simple Pirate 队列

//

// Simple Pirate queue

// This is identical to the LRU pattern, with no reliability mechanisms

// at all. It depends on the client for recovery. Runs forever.

//

// Andreas Hoelzlwimmer <andreas.hoelzlwimmer@fh-hagenberg.at

#include "zmsg.hpp"

#include <queue>

#define MAX_WORKERS 100

int main (void)

{

s_version_assert (2, 1);

// Prepare our context and sockets

zmq::context_t context(1);

zmq::socket_t frontend (context, ZMQ_ROUTER);

zmq::socket_t backend (context, ZMQ_ROUTER);

frontend.bind("tcp://*:5555"); // For clients

backend.bind("tcp://*:5556"); // For workers

// Queue of available workers

std::queue<std::string> worker_queue;

while (1) {

zmq::pollitem_t items [] = {

{ backend, 0, ZMQ_POLLIN, 0 },

{ frontend, 0, ZMQ_POLLIN, 0 }

};

// Poll frontend only if we have available workers

if (worker_queue.size())

zmq::poll (items, 2, -1);

else

zmq::poll (items, 1, -1);

// Handle worker activity on backend

if (items [0].revents & ZMQ_POLLIN) {

zmsg zm(backend);

//zmsg_t *zmsg = zmsg_recv (backend);

// Use worker address for LRU routing

assert (worker_queue.size() < MAX_WORKERS);

worker_queue.push(zm.unwrap());

// Return reply to client if it's not a READY

if (strcmp (zm.address(), "READY") == 0)

zm.clear();

else

zm.send (frontend);

}

if (items [1].revents & ZMQ_POLLIN) {

// Now get next client request, route to next worker

zmsg zm(frontend);

// REQ socket in worker needs an envelope delimiter

zm.wrap(worker_queue.front().c_str(), "");

zm.send(backend);

// Dequeue and drop the next worker address

worker_queue.pop();

}

}

// We never exit the main loop

return 0;

}

spqueue: C# 中的 Simple Pirate 队列

spqueue: CL 中的 Simple Pirate 队列

spqueue: Delphi 中的 Simple Pirate 队列

program spqueue;

//

// Simple Pirate broker

// This is identical to load-balancing pattern, with no reliability

// mechanisms. It depends on the client for recovery. Runs forever.

// @author Varga Balazs <bb.varga@gmail.com>

//

{$APPTYPE CONSOLE}

uses

SysUtils

, zmqapi

;

const

WORKER_READY = '\001'; // Signals worker is ready

var

ctx: TZMQContext;

frontend,

backend: TZMQSocket;

workers: TZMQMsg;

poller: TZMQPoller;

pc: Integer;

msg: TZMQMsg;

identity,

frame: TZMQFrame;

begin

ctx := TZMQContext.create;

frontend := ctx.Socket( stRouter );

backend := ctx.Socket( stRouter );

frontend.bind( 'tcp://*:5555' ); // For clients

backend.bind( 'tcp://*:5556' ); // For workers

// Queue of available workers

workers := TZMQMsg.create;

poller := TZMQPoller.Create( true );

poller.Register( backend, [pePollIn] );

poller.Register( frontend, [pePollIn] );

// The body of this example is exactly the same as lbbroker2.

while not ctx.Terminated do

try

// Poll frontend only if we have available workers

if workers.size > 0 then

pc := 2

else

pc := 1;

poller.poll( 1000, pc );

// Handle worker activity on backend

if pePollIn in poller.PollItem[0].revents then

begin

// Use worker identity for load-balancing

backend.recv( msg );

identity := msg.unwrap;

workers.add( identity );

// Forward message to client if it's not a READY

frame := msg.first;

if frame.asUtf8String = WORKER_READY then

begin

msg.Free;

msg := nil;

end else

frontend.send( msg );

end;

if pePollIn in poller.PollItem[1].revents then

begin

// Get client request, route to first available worker

frontend.recv( msg );

msg.wrap( workers.pop );

backend.send( msg );

end;

except

end;

workers.Free;

ctx.Free;

end.

spqueue: Erlang 中的 Simple Pirate 队列

spqueue: Elixir 中的 Simple Pirate 队列

spqueue: F# 中的 Simple Pirate 队列

spqueue: Felix 中的 Simple Pirate 队列

spqueue: Go 中的 Simple Pirate 队列

// Simple Pirate broker

// This is identical to load-balancing pattern, with no reliability

// mechanisms. It depends on the client for recovery. Runs forever.

//

// Author: iano <scaly.iano@gmail.com>

// Based on C & Python example

package main

import (

zmq "github.com/alecthomas/gozmq"

)

const LRU_READY = "\001"

func main() {

context, _ := zmq.NewContext()

defer context.Close()

frontend, _ := context.NewSocket(zmq.ROUTER)

defer frontend.Close()

frontend.Bind("tcp://*:5555") // For clients

backend, _ := context.NewSocket(zmq.ROUTER)

defer backend.Close()

backend.Bind("tcp://*:5556") // For workers

// Queue of available workers

workers := make([][]byte, 0, 0)

for {

items := zmq.PollItems{

zmq.PollItem{Socket: backend, Events: zmq.POLLIN},

zmq.PollItem{Socket: frontend, Events: zmq.POLLIN},

}

// Poll frontend only if we have available workers

if len(workers) > 0 {

zmq.Poll(items, -1)

} else {

zmq.Poll(items[:1], -1)

}

// Handle worker activity on backend

if items[0].REvents&zmq.POLLIN != 0 {

// Use worker identity for load-balancing

msg, err := backend.RecvMultipart(0)

if err != nil {

panic(err) // Interrupted

}

address := msg[0]

workers = append(workers, address)

// Forward message to client if it's not a READY

if reply := msg[2:]; string(reply[0]) != LRU_READY {

frontend.SendMultipart(reply, 0)

}

}

if items[1].REvents&zmq.POLLIN != 0 {

// Get client request, route to first available worker

msg, err := frontend.RecvMultipart(0)

if err != nil {

panic(err) // Interrupted

}

last := workers[len(workers)-1]

workers = workers[:len(workers)-1]

request := append([][]byte{last, nil}, msg...)

backend.SendMultipart(request, 0)

}

}

}

spqueue: Haskell 中的 Simple Pirate 队列

{--

Simple Pirate queue in Haskell

--}

module Main where

import System.ZMQ4.Monadic

import Control.Concurrent (threadDelay)

import Control.Applicative ((<$>))

import Control.Monad (when)

import Data.ByteString.Char8 (pack, unpack, empty)

import Data.List (intercalate)

type SockID = String

workerReady = "\001"

main :: IO ()

main =

runZMQ $ do

frontend <- socket Router

bind frontend "tcp://*:5555"

backend <- socket Router

bind backend "tcp://*:5556"

pollPeers frontend backend []

pollPeers :: Socket z Router -> Socket z Router -> [SockID] -> ZMQ z ()

pollPeers frontend backend workers = do

let toPoll = getPollList workers

evts <- poll 0 toPoll

workers' <- getBackend backend frontend evts workers

workers'' <- getFrontend frontend backend evts workers'

pollPeers frontend backend workers''

where getPollList [] = [Sock backend [In] Nothing]

getPollList _ = [Sock backend [In] Nothing, Sock frontend [In] Nothing]

getBackend :: Socket z Router -> Socket z Router ->

[[Event]] -> [SockID] -> ZMQ z ([SockID])

getBackend backend frontend evts workers =

if (In `elem` (evts !! 0))

then do

wkrID <- receive backend

id <- (receive backend >> receive backend)

msg <- (receive backend >> receive backend)

when ((unpack msg) /= workerReady) $ do

liftIO $ putStrLn $ "I: sending backend - " ++ (unpack msg)

send frontend [SendMore] id

send frontend [SendMore] empty

send frontend [] msg

return $ (unpack wkrID):workers

else return workers

getFrontend :: Socket z Router -> Socket z Router ->

[[Event]] -> [SockID] -> ZMQ z [SockID]

getFrontend frontend backend evts workers =

if (length evts > 1 && In `elem` (evts !! 1))

then do

id <- receive frontend

msg <- (receive frontend >> receive frontend)

liftIO $ putStrLn $ "I: msg on frontend - " ++ (unpack msg)

let wkrID = head workers

send backend [SendMore] (pack wkrID)

send backend [SendMore] empty

send backend [SendMore] id

send backend [SendMore] empty

send backend [] msg

return $ tail workers

else return workers

spqueue: Haxe 中的 Simple Pirate 队列

package ;

import haxe.Stack;

import neko.Lib;

import org.zeromq.ZFrame;

import org.zeromq.ZContext;

import org.zeromq.ZMQSocket;

import org.zeromq.ZMQPoller;

import org.zeromq.ZMQ;

import org.zeromq.ZMsg;

import org.zeromq.ZMQException;

/**

* Simple Pirate queue

* This is identical to the LRU pattern, with no reliability mechanisms

* at all. It depends on the client for recovery. Runs forever.

*

* @see https://zguide.zeromq.cn/page:all#Basic-Reliable-Queuing-Simple-Pirate-Pattern

*/

class SPQueue

{

// Signals workers are ready

private static inline var LRU_READY:String = String.fromCharCode(1);

public static function main() {

Lib.println("** SPQueue (see: https://zguide.zeromq.cn/page:all#Basic-Reliable-Queuing-Simple-Pirate-Pattern)");

// Prepare our context and sockets

var context:ZContext = new ZContext();

var frontend:ZMQSocket = context.createSocket(ZMQ_ROUTER);

var backend:ZMQSocket = context.createSocket(ZMQ_ROUTER);

frontend.bind("tcp://*:5555"); // For clients

backend.bind("tcp://*:5556"); // For workers

// Queue of available workers

var workerQueue:List<ZFrame> = new List<ZFrame>();

var poller:ZMQPoller = new ZMQPoller();

poller.registerSocket(backend, ZMQ.ZMQ_POLLIN());

while (true) {

poller.unregisterSocket(frontend);

if (workerQueue.length > 0) {

// Only poll frontend if there is at least 1 worker ready to do work

poller.registerSocket(frontend, ZMQ.ZMQ_POLLIN());

}

try {

poller.poll( -1 );

} catch (e:ZMQException) {

if (ZMQ.isInterrupted())

break;

trace("ZMQException #:" + e.errNo + ", str:" + e.str());

trace (Stack.toString(Stack.exceptionStack()));

}

if (poller.pollin(1)) {

// Use worker address for LRU routing

var msg = ZMsg.recvMsg(backend);

if (msg == null)

break; // Interrupted

var address = msg.unwrap();

workerQueue.add(address);

// Forward message to client if it's not a READY

var frame = msg.first();

if (frame.streq(LRU_READY))

msg.destroy();

else

msg.send(frontend);

}

if (poller.pollin(2)) {

// Get client request, route to first available worker

var msg = ZMsg.recvMsg(frontend);

if (msg != null) {

msg.wrap(workerQueue.pop());

msg.send(backend);

}

}

}

// When we're done, clean up properly

for (f in workerQueue) {

f.destroy();

}

context.destroy();

}

}spqueue: Java 中的 Simple Pirate 队列

package guide;

import java.util.ArrayList;

import org.zeromq.*;

import org.zeromq.ZMQ.Poller;

import org.zeromq.ZMQ.Socket;

//

// Simple Pirate queue

// This is identical to load-balancing pattern, with no reliability mechanisms

// at all. It depends on the client for recovery. Runs forever.

//

public class spqueue

{

private final static String WORKER_READY = "\001"; // Signals worker is ready

public static void main(String[] args)

{

try (ZContext ctx = new ZContext()) {

Socket frontend = ctx.createSocket(SocketType.ROUTER);

Socket backend = ctx.createSocket(SocketType.ROUTER);

frontend.bind("tcp://*:5555"); // For clients

backend.bind("tcp://*:5556"); // For workers

// Queue of available workers

ArrayList<ZFrame> workers = new ArrayList<ZFrame>();

Poller poller = ctx.createPoller(2);

poller.register(backend, Poller.POLLIN);

poller.register(frontend, Poller.POLLIN);

// The body of this example is exactly the same as lruqueue2.

while (true) {

boolean workersAvailable = workers.size() > 0;

int rc = poller.poll(-1);

// Poll frontend only if we have available workers

if (rc == -1)

break; // Interrupted

// Handle worker activity on backend

if (poller.pollin(0)) {

// Use worker address for LRU routing

ZMsg msg = ZMsg.recvMsg(backend);

if (msg == null)

break; // Interrupted

ZFrame address = msg.unwrap();

workers.add(address);

// Forward message to client if it's not a READY

ZFrame frame = msg.getFirst();

if (new String(frame.getData(), ZMQ.CHARSET).equals(WORKER_READY))

msg.destroy();

else msg.send(frontend);

}

if (workersAvailable && poller.pollin(1)) {

// Get client request, route to first available worker

ZMsg msg = ZMsg.recvMsg(frontend);

if (msg != null) {

msg.wrap(workers.remove(0));

msg.send(backend);

}

}

}

// When we're done, clean up properly

while (workers.size() > 0) {

ZFrame frame = workers.remove(0);

frame.destroy();

}

workers.clear();

}

}

}

spqueue: Julia 中的 Simple Pirate 队列

spqueue: Lua 中的 Simple Pirate 队列

--

-- Simple Pirate queue

-- This is identical to the LRU pattern, with no reliability mechanisms

-- at all. It depends on the client for recovery. Runs forever.

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zmq.poller"

require"zhelpers"

require"zmsg"

local tremove = table.remove

local MAX_WORKERS = 100

s_version_assert (2, 1)

-- Prepare our context and sockets

local context = zmq.init(1)

local frontend = context:socket(zmq.ROUTER)

local backend = context:socket(zmq.ROUTER)

frontend:bind("tcp://*:5555"); -- For clients

backend:bind("tcp://*:5556"); -- For workers

-- Queue of available workers

local worker_queue = {}

local is_accepting = false

local poller = zmq.poller(2)

local function frontend_cb()

-- Now get next client request, route to next worker

local msg = zmsg.recv (frontend)

-- Dequeue a worker from the queue.

local worker = tremove(worker_queue, 1)

msg:wrap(worker, "")

msg:send(backend)

if (#worker_queue == 0) then

-- stop accepting work from clients, when no workers are available.

poller:remove(frontend)

is_accepting = false

end

end

-- Handle worker activity on backend

poller:add(backend, zmq.POLLIN, function()

local msg = zmsg.recv(backend)

-- Use worker address for LRU routing

worker_queue[#worker_queue + 1] = msg:unwrap()

-- start accepting client requests, if we are not already doing so.

if not is_accepting then

is_accepting = true

poller:add(frontend, zmq.POLLIN, frontend_cb)

end

-- Forward message to client if it's not a READY

if (msg:address() ~= "READY") then

msg:send(frontend)

end

end)

-- start poller's event loop

poller:start()

-- We never exit the main loop

spqueue: Node.js 中的 Simple Pirate 队列

spqueue: Objective-C 中的 Simple Pirate 队列

spqueue: ooc 中的 Simple Pirate 队列

spqueue: Perl 中的 Simple Pirate 队列

spqueue: PHP 中的 Simple Pirate 队列

<?php

/*

* Simple Pirate queue

* This is identical to the LRU pattern, with no reliability mechanisms

* at all. It depends on the client for recovery. Runs forever.

*

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

include 'zmsg.php';

define("MAX_WORKERS", 100);

// Prepare our context and sockets

$context = new ZMQContext();

$frontend = $context->getSocket(ZMQ::SOCKET_ROUTER);

$backend = $context->getSocket(ZMQ::SOCKET_ROUTER);

$frontend->bind("tcp://*:5555"); // For clients

$backend->bind("tcp://*:5556"); // For workers

// Queue of available workers

$available_workers = 0;

$worker_queue = array();

$read = $write = array();

while (true) {

$poll = new ZMQPoll();

$poll->add($backend, ZMQ::POLL_IN);

// Poll frontend only if we have available workers

if ($available_workers) {

$poll->add($frontend, ZMQ::POLL_IN);

}

$events = $poll->poll($read, $write);

foreach ($read as $socket) {

$zmsg = new Zmsg($socket);

$zmsg->recv();

// Handle worker activity on backend

if ($socket === $backend) {

// Use worker address for LRU routing

assert($available_workers < MAX_WORKERS);

array_push($worker_queue, $zmsg->unwrap());

$available_workers++;

// Return reply to client if it's not a READY

if ($zmsg->address() != "READY") {

$zmsg->set_socket($frontend)->send();

}

} elseif ($socket === $frontend) {

// Now get next client request, route to next worker

// REQ socket in worker needs an envelope delimiter

// Dequeue and drop the next worker address

$zmsg->wrap(array_shift($worker_queue), "");

$zmsg->set_socket($backend)->send();

$available_workers--;

}

}

// We never exit the main loop

}

spqueue: Python 中的 Simple Pirate 队列

#

# Simple Pirate queue

# This is identical to the LRU pattern, with no reliability mechanisms

# at all. It depends on the client for recovery. Runs forever.

#

# Author: Daniel Lundin <dln(at)eintr(dot)org>

#

import zmq

LRU_READY = "\x01"

context = zmq.Context(1)

frontend = context.socket(zmq.ROUTER) # ROUTER

backend = context.socket(zmq.ROUTER) # ROUTER

frontend.bind("tcp://*:5555") # For clients

backend.bind("tcp://*:5556") # For workers

poll_workers = zmq.Poller()

poll_workers.register(backend, zmq.POLLIN)

poll_both = zmq.Poller()

poll_both.register(frontend, zmq.POLLIN)

poll_both.register(backend, zmq.POLLIN)

workers = []

while True:

if workers:

socks = dict(poll_both.poll())

else:

socks = dict(poll_workers.poll())

# Handle worker activity on backend

if socks.get(backend) == zmq.POLLIN:

# Use worker address for LRU routing

msg = backend.recv_multipart()

if not msg:

break

address = msg[0]

workers.append(address)

# Everything after the second (delimiter) frame is reply

reply = msg[2:]

# Forward message to client if it's not a READY

if reply[0] != LRU_READY:

frontend.send_multipart(reply)

if socks.get(frontend) == zmq.POLLIN:

# Get client request, route to first available worker

msg = frontend.recv_multipart()

request = [workers.pop(0), ''.encode()] + msg

backend.send_multipart(request)

spqueue: Q 中的 Simple Pirate 队列

spqueue: Racket 中的 Simple Pirate 队列

spqueue: Ruby 中的 Simple Pirate 队列

spqueue: Rust 中的 Simple Pirate 队列

spqueue: Scala 中的 Simple Pirate 队列

spqueue: Tcl 中的 Simple Pirate 队列

#

# Simple Pirate queue

# This is identical to the LRU pattern, with no reliability mechanisms

# at all. It depends on the client for recovery. Runs forever.

#

package require zmq

set LRU_READY "READY" ;# Signals worker is ready

# Prepare our context and sockets

zmq context context

zmq socket frontend context ROUTER

zmq socket backend context ROUTER

frontend bind "tcp://*:5555" ;# For clients

backend bind "tcp://*:5556" ;# For workers

# Queue of available workers

set workers {}

while {1} {

if {[llength $workers]} {

set poll_set [list [list backend [list POLLIN]] [list frontend [list POLLIN]]]

} else {

set poll_set [list [list backend [list POLLIN]]]

}

set rpoll_set [zmq poll $poll_set -1]

foreach rpoll $rpoll_set {

switch [lindex $rpoll 0] {

backend {

# Use worker address for LRU routing

set msg [zmsg recv backend]

set address [zmsg unwrap msg]

lappend workers $address

# Forward message to client if it's not a READY

if {[lindex $msg 0] ne $LRU_READY} {

zmsg send frontend $msg

}

}

frontend {

# Get client request, route to first available worker

set msg [zmsg recv frontend]

set workers [lassign $workers worker]

set msg [zmsg wrap $msg $worker]

zmsg send backend $msg

}

}

}

}

frontend close

backend close

context term

spqueue: OCaml 中的 Simple Pirate 队列

以下是 worker,它使用了 Lazy Pirate 服务器并将其适配到负载均衡模式(使用 REQ 的“ready”信号):

spworker: Ada 中的 Simple Pirate worker

spworker: Basic 中的 Simple Pirate worker

spworker: C 中的 Simple Pirate worker

// Simple Pirate worker

// Connects REQ socket to tcp://*:5556

// Implements worker part of load-balancing

#include "czmq.h"

#define WORKER_READY "\001" // Signals worker is ready

int main (void)

{

zctx_t *ctx = zctx_new ();

void *worker = zsocket_new (ctx, ZMQ_REQ);

// Set random identity to make tracing easier

srandom ((unsigned) time (NULL));

char identity [10];

sprintf (identity, "%04X-%04X", randof (0x10000), randof (0x10000));

zmq_setsockopt (worker, ZMQ_IDENTITY, identity, strlen (identity));

zsocket_connect (worker, "tcp://:5556");

// Tell broker we're ready for work

printf ("I: (%s) worker ready\n", identity);

zframe_t *frame = zframe_new (WORKER_READY, 1);

zframe_send (&frame, worker, 0);

int cycles = 0;

while (true) {