第 5 章 - 高级发布/订阅模式 #

在第 3 章 - 高级请求/回复模式和第 4 章 - 可靠请求/回复模式中,我们探讨了 ZeroMQ 请求/回复模式的高级用法。如果你能消化所有这些内容,恭喜你。在本章中,我们将重点关注发布/订阅,并用更高级的模式来扩展 ZeroMQ 的核心发布/订阅模式,以实现性能、可靠性、状态分发和监控。

我们将涵盖

- 何时使用发布/订阅

- 如何处理过慢的订阅者 (Suicidal Snail 模式)

- 如何设计高速订阅者 (Black Box 模式)

- 如何监控发布/订阅网络 (Espresso 模式)

- 如何构建共享键值存储 (Clone 模式)

- 如何使用 Reactor 简化复杂服务器

- 如何使用 Binary Star 模式为服务器添加故障转移功能

发布/订阅的优缺点 #

ZeroMQ 的底层模式各具特点。发布/订阅解决了 오래된 消息传递问题,即 多播 (multicast) 或 组播 (group messaging)。它具有 ZeroMQ 独特的细致的简洁性和残酷的漠视的结合。理解发布/订阅的权衡、它们如何使我们受益,以及如果需要如何绕过它们,是很有价值的。

首先,PUB 将每条消息发送给“许多中的所有”,而 PUSH 和 DEALER 将消息轮流发送给“许多中的一个”。你不能简单地用 PUB 替换 PUSH 或反过来,然后指望一切正常。这一点值得重申,因为人们似乎经常建议这样做。

更深层地说,发布/订阅旨在实现可伸缩性。这意味着大量数据快速发送给许多接收者。如果你需要每秒向数千个点发送数百万条消息,你将比只需每秒向少数接收者发送几条消息更能体会到发布/订阅的价值。

为了获得可伸缩性,发布/订阅使用了与 push-pull 相同的技巧,即去除回话。这意味着接收者不会回复发送者。有一些例外,例如 SUB 套接字会向 PUB 套接字发送订阅信息,但它是匿名的且不频繁的。

去除回话对于真正的可伸缩性至关重要。在发布/订阅中,这是该模式如何干净地映射到 PGM 多播协议的方式,该协议由网络交换机处理。换句话说,订阅者根本不连接到发布者,他们连接到交换机上的一个多播组,发布者将消息发送到该组。

当我们去除回话时,整体消息流会变得 훨씬 简单,这使得我们可以构建更简单的 API、更简单的协议,并且通常能够触达更多人。但我们也消除了协调发送者和接收者的任何可能性。这意味着

-

发布者无法知道订阅者何时成功连接,无论是在初始连接还是在网络故障后重新连接时。

-

订阅者无法告知发布者任何信息来让发布者控制他们发送消息的速度。发布者只有一个设置,即全速,订阅者必须跟上,否则就会丢失消息。

-

发布者无法知道订阅者何时因进程崩溃、网络中断等原因而消失。

缺点是,如果我们想实现可靠的多播,我们就确实需要所有这些功能。当订阅者正在连接时、网络故障发生时,或者仅仅是订阅者或网络无法跟上发布者时,ZeroMQ 发布/订阅模式会任意丢失消息。

好处是,在许多用例中,几乎 可靠的多播已经足够好。当我们需要这种回话时,我们可以切换到使用 ROUTER-DEALER (我在大多数正常流量情况下倾向于这样做),或者我们可以添加一个独立的通道用于同步 (在本章后面我们将看到一个例子)。

发布/订阅就像无线电广播;在你加入之前,你会错过所有内容,然后你收到多少信息取决于你的接收质量。令人惊讶的是,这种模式很有用并且广泛应用,因为它与现实世界的信息分发完美契合。想想 Facebook 和 Twitter、BBC 世界广播以及体育比赛结果。

就像我们在请求/回复模式中所做的那样,让我们根据可能出现的问题来定义可靠性。以下是发布/订阅的经典故障情况:

- 订阅者加入较晚,因此他们会错过服务器已经发送的消息。

- 订阅者获取消息速度过慢,导致队列积压并溢出。

- 订阅者可能断开连接并在断开期间丢失消息。

- 订阅者可能崩溃并重启,丢失他们已经收到的任何数据。

- 网络可能过载并丢弃数据 (特别是对于 PGM)。

- 网络可能变得过慢,导致发布者端的队列溢出,发布者崩溃。

可能出现的问题还有很多,但这些是我们在现实系统中看到的典型故障。自 v3.x 版本起,ZeroMQ 对其内部缓冲区(即所谓的高水位标记或 HWM)强制设置了默认限制,因此发布者崩溃的情况较少见,除非您故意将 HWM 设置为无限大。

所有这些故障情况都有解决方案,尽管并非总是简单的。可靠性需要我们大多数人大多数时候不需要的复杂性,这就是 ZeroMQ 不试图开箱即用提供它的原因(即使存在一种全局的可靠性设计,而实际上并不存在)。

发布/订阅跟踪 (Espresso 模式) #

让我们从查看一种跟踪发布/订阅网络的方法开始本章。在第 2 章 - 套接字与模式中,我们看到了一个使用这些套接字进行传输桥接的简单代理。该 zmq_proxy()方法有三个参数:它桥接在一起的 frontend 和 backend 套接字,以及一个 capture 套接字,它将所有消息发送到该套接字。

代码看起来很简单

espresso: Ada 中的 Espresso 模式

espresso: Basic 中的 Espresso 模式

espresso: C 中的 Espresso 模式

// Espresso Pattern

// This shows how to capture data using a pub-sub proxy

#include "czmq.h"

// The subscriber thread requests messages starting with

// A and B, then reads and counts incoming messages.

static void

subscriber_thread (void *args, zctx_t *ctx, void *pipe)

{

// Subscribe to "A" and "B"

void *subscriber = zsocket_new (ctx, ZMQ_SUB);

zsocket_connect (subscriber, "tcp://:6001");

zsocket_set_subscribe (subscriber, "A");

zsocket_set_subscribe (subscriber, "B");

int count = 0;

while (count < 5) {

char *string = zstr_recv (subscriber);

if (!string)

break; // Interrupted

free (string);

count++;

}

zsocket_destroy (ctx, subscriber);

}

// .split publisher thread

// The publisher sends random messages starting with A-J:

static void

publisher_thread (void *args, zctx_t *ctx, void *pipe)

{

void *publisher = zsocket_new (ctx, ZMQ_PUB);

zsocket_bind (publisher, "tcp://*:6000");

while (!zctx_interrupted) {

char string [10];

sprintf (string, "%c-%05d", randof (10) + 'A', randof (100000));

if (zstr_send (publisher, string) == -1)

break; // Interrupted

zclock_sleep (100); // Wait for 1/10th second

}

}

// .split listener thread

// The listener receives all messages flowing through the proxy, on its

// pipe. In CZMQ, the pipe is a pair of ZMQ_PAIR sockets that connect

// attached child threads. In other languages your mileage may vary:

static void

listener_thread (void *args, zctx_t *ctx, void *pipe)

{

// Print everything that arrives on pipe

while (true) {

zframe_t *frame = zframe_recv (pipe);

if (!frame)

break; // Interrupted

zframe_print (frame, NULL);

zframe_destroy (&frame);

}

}

// .split main thread

// The main task starts the subscriber and publisher, and then sets

// itself up as a listening proxy. The listener runs as a child thread:

int main (void)

{

// Start child threads

zctx_t *ctx = zctx_new ();

zthread_fork (ctx, publisher_thread, NULL);

zthread_fork (ctx, subscriber_thread, NULL);

void *subscriber = zsocket_new (ctx, ZMQ_XSUB);

zsocket_connect (subscriber, "tcp://:6000");

void *publisher = zsocket_new (ctx, ZMQ_XPUB);

zsocket_bind (publisher, "tcp://*:6001");

void *listener = zthread_fork (ctx, listener_thread, NULL);

zmq_proxy (subscriber, publisher, listener);

puts (" interrupted");

// Tell attached threads to exit

zctx_destroy (&ctx);

return 0;

}

espresso: C++ 中的 Espresso 模式

#include <iostream>

#include <thread>

#include <zmq.hpp>

#include <string>

#include <chrono>

#include <unistd.h>

// Subscriber thread function

void subscriber_thread(zmq::context_t& ctx) {

zmq::socket_t subscriber(ctx, ZMQ_SUB);

subscriber.connect("tcp://:6001");

subscriber.set(zmq::sockopt::subscribe, "A");

subscriber.set(zmq::sockopt::subscribe, "B");

int count = 0;

while (count < 5) {

zmq::message_t message;

if (subscriber.recv(message)) {

std::string msg = std::string((char*)(message.data()), message.size());

std::cout << "Received: " << msg << std::endl;

count++;

}

std::this_thread::sleep_for(std::chrono::milliseconds(100));

}

}

// Publisher thread function

void publisher_thread(zmq::context_t& ctx) {

zmq::socket_t publisher(ctx, ZMQ_PUB);

publisher.bind("tcp://*:6000");

while (true) {

char string[10];

sprintf(string, "%c-%05d", rand() % 10 + 'A', rand() % 100000);

zmq::message_t message(string, strlen(string));

publisher.send(message, zmq::send_flags::none);

std::this_thread::sleep_for(std::chrono::milliseconds(100));

}

}

// Listener thread function

void listener_thread(zmq::context_t& ctx) {

zmq::socket_t listener(ctx, ZMQ_PAIR);

listener.connect("inproc://listener");

while (true) {

zmq::message_t message;

if (listener.recv(message)) {

std::string msg = std::string((char*)(message.data()), message.size());

std::cout << "Listener Received: ";

if (msg[0] == 0 || msg[0] == 1){

std::cout << int(msg[0]);

std::cout << msg[1]<< std::endl;

} else {

std::cout << msg << std::endl;

}

}

}

}

int main() {

zmq::context_t context(1);

// Main thread acts as the listener proxy

zmq::socket_t proxy(context, ZMQ_PAIR);

proxy.bind("inproc://listener");

zmq::socket_t xsub(context, ZMQ_XSUB);

zmq::socket_t xpub(context, ZMQ_XPUB);

xpub.bind("tcp://*:6001");

sleep(1);

// Start publisher and subscriber threads

std::thread pub_thread(publisher_thread, std::ref(context));

std::thread sub_thread(subscriber_thread, std::ref(context));

// Set up listener thread

std::thread lis_thread(listener_thread, std::ref(context));

sleep(1);

xsub.connect("tcp://:6000");

// Proxy messages between SUB and PUB sockets

zmq_proxy(xsub, xpub, proxy);

// Wait for threads to finish

pub_thread.join();

sub_thread.join();

lis_thread.join();

return 0;

}

espresso: C# 中的 Espresso 模式

espresso: CL 中的 Espresso 模式

espresso: Delphi 中的 Espresso 模式

espresso: Erlang 中的 Espresso 模式

espresso: Elixir 中的 Espresso 模式

espresso: F# 中的 Espresso 模式

espresso: Felix 中的 Espresso 模式

espresso: Go 中的 Espresso 模式

espresso: Haskell 中的 Espresso 模式

espresso: Haxe 中的 Espresso 模式

espresso: Java 中的 Espresso 模式

package guide;

import java.util.Random;

import org.zeromq.*;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZThread.IAttachedRunnable;

// Espresso Pattern

// This shows how to capture data using a pub-sub proxy

public class espresso

{

// The subscriber thread requests messages starting with

// A and B, then reads and counts incoming messages.

private static class Subscriber implements IAttachedRunnable

{

@Override

public void run(Object[] args, ZContext ctx, Socket pipe)

{

// Subscribe to "A" and "B"

Socket subscriber = ctx.createSocket(SocketType.SUB);

subscriber.connect("tcp://:6001");

subscriber.subscribe("A".getBytes(ZMQ.CHARSET));

subscriber.subscribe("B".getBytes(ZMQ.CHARSET));

int count = 0;

while (count < 5) {

String string = subscriber.recvStr();

if (string == null)

break; // Interrupted

count++;

}

ctx.destroySocket(subscriber);

}

}

// .split publisher thread

// The publisher sends random messages starting with A-J:

private static class Publisher implements IAttachedRunnable

{

@Override

public void run(Object[] args, ZContext ctx, Socket pipe)

{

Socket publisher = ctx.createSocket(SocketType.PUB);

publisher.bind("tcp://*:6000");

Random rand = new Random(System.currentTimeMillis());

while (!Thread.currentThread().isInterrupted()) {

String string = String.format("%c-%05d", 'A' + rand.nextInt(10), rand.nextInt(100000));

if (!publisher.send(string))

break; // Interrupted

try {

Thread.sleep(100); // Wait for 1/10th second

}

catch (InterruptedException e) {

}

}

ctx.destroySocket(publisher);

}

}

// .split listener thread

// The listener receives all messages flowing through the proxy, on its

// pipe. In CZMQ, the pipe is a pair of ZMQ_PAIR sockets that connect

// attached child threads. In other languages your mileage may vary:

private static class Listener implements IAttachedRunnable

{

@Override

public void run(Object[] args, ZContext ctx, Socket pipe)

{

// Print everything that arrives on pipe

while (true) {

ZFrame frame = ZFrame.recvFrame(pipe);

if (frame == null)

break; // Interrupted

frame.print(null);

frame.destroy();

}

}

}

// .split main thread

// The main task starts the subscriber and publisher, and then sets

// itself up as a listening proxy. The listener runs as a child thread:

public static void main(String[] argv)

{

try (ZContext ctx = new ZContext()) {

// Start child threads

ZThread.fork(ctx, new Publisher());

ZThread.fork(ctx, new Subscriber());

Socket subscriber = ctx.createSocket(SocketType.XSUB);

subscriber.connect("tcp://:6000");

Socket publisher = ctx.createSocket(SocketType.XPUB);

publisher.bind("tcp://*:6001");

Socket listener = ZThread.fork(ctx, new Listener());

ZMQ.proxy(subscriber, publisher, listener);

System.out.println(" interrupted");

// NB: child threads exit here when the context is closed

}

}

}

espresso: Julia 中的 Espresso 模式

espresso: Lua 中的 Espresso 模式

espresso: Node.js 中的 Espresso 模式

/**

* Pub-Sub Tracing (Espresso Pattern)

* explained in

* https://zguide.zeromq.cn/docs/chapter5

*/

"use strict";

Object.defineProperty(exports, "__esModule", { value: true });

exports.runListenerThread = exports.runPubThread = exports.runSubThread = void 0;

const zmq = require("zeromq"),

publisher = new zmq.Publisher,

pubKeypair = zmq.curveKeyPair(),

publicKey = pubKeypair.publicKey;

var interrupted = false;

function getRandomInt(max) {

return Math.floor(Math.random() * Math.floor(max));

}

async function runSubThread() {

const subscriber = new zmq.Subscriber;

const subKeypair = zmq.curveKeyPair();

// Setup encryption.

for (const s of [subscriber]) {

subscriber.curveServerKey = publicKey; // '03P+E+f4AU6bSTcuzvgX&oGnt&Or<rN)FYIPyjQW'

subscriber.curveSecretKey = subKeypair.secretKey;

subscriber.curvePublicKey = subKeypair.publicKey;

}

await subscriber.connect("tcp://127.0.0.1:6000");

console.log('subscriber connected! subscribing A,B,C and D..');

//subscribe all at once - simultaneous subscriptions needed

Promise.all([

subscriber.subscribe("A"),

subscriber.subscribe("B"),

subscriber.subscribe("C"),

subscriber.subscribe("D"),

subscriber.subscribe("E"),

]);

for await (const [msg] of subscriber) {

console.log(`Received at subscriber: ${msg}`);

if (interrupted) {

await subscriber.disconnect("tcp://127.0.0.1:6000");

await subscriber.close();

break;

}

}

}

//Run the Publisher Thread!

async function runPubThread() {

// Setup encryption.

for (const s of [publisher]) {

s.curveServer = true;

s.curvePublicKey = publicKey;

s.curveSecretKey = pubKeypair.secretKey;

}

await publisher.bind("tcp://127.0.0.1:6000");

console.log(`Started publisher at tcp://127.0.0.1:6000 ..`);

var subs = 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789';

while (!interrupted) { //until ctl+c

var str = `${subs.charAt(getRandomInt(10))}-${getRandomInt(100000).toString().padStart(6, '0')}`; //"%c-%05d";

console.log(`Publishing ${str}`);

if (-1 == await publisher.send(str))

break; //Interrupted

await new Promise(resolve => setTimeout(resolve, 1000));

}

//if(! publisher.closed())

await publisher.close();

}

//Run the Pipe

async function runListenerThread() {

//a pipe using 'Pair' which receives and transmits data

const pipe = new zmq.Pair;

await pipe.connect("tcp://127.0.0.1:6000");

await pipe.bind("tcp://127.0.0.1:6001");

console.log('starting pipe (using Pair)..');

while (!interrupted) {

await pipe.send(await pipe.receive());

}

setTimeout(() => {

console.log('Terminating pipe..');

pipe.close();

}, 1000);

//a pipe using 'Proxy' <= not working, but give it a try.

// Still working with Proxy

/*

const pipe = new zmq.Proxy (new zmq.Router, new zmq.Dealer)

await pipe.backEnd.connect("tcp://127.0.0.1:6000")

await pipe.frontEnd.bind("tcp://127.0.0.1:6001")

await pipe.run()

setTimeout(() => {

console.log('Terminating pipe..');

await pipe.terminate()

}, 10000);

*/

}

exports.runSubThread = runSubThread;

exports.runPubThread = runPubThread;

exports.runListenerThread = runListenerThread;

process.on('SIGINT', function () {

interrupted = true;

});

process.setMaxListeners(30);

async function main() {

//execute all at once

Promise.all([

runPubThread(),

runListenerThread(),

runSubThread(),

]);

}

main().catch(err => {

console.error(err);

process.exit(1);

});

espresso: Objective-C 中的 Espresso 模式

espresso: ooc 中的 Espresso 模式

espresso: Perl 中的 Espresso 模式

espresso: PHP 中的 Espresso 模式

espresso: Python 中的 Espresso 模式

# Espresso Pattern

# This shows how to capture data using a pub-sub proxy

#

import time

from random import randint

from string import ascii_uppercase as uppercase

from threading import Thread

import zmq

from zmq.devices import monitored_queue

from zhelpers import zpipe

# The subscriber thread requests messages starting with

# A and B, then reads and counts incoming messages.

def subscriber_thread():

ctx = zmq.Context.instance()

# Subscribe to "A" and "B"

subscriber = ctx.socket(zmq.SUB)

subscriber.connect("tcp://:6001")

subscriber.setsockopt(zmq.SUBSCRIBE, b"A")

subscriber.setsockopt(zmq.SUBSCRIBE, b"B")

count = 0

while count < 5:

try:

msg = subscriber.recv_multipart()

except zmq.ZMQError as e:

if e.errno == zmq.ETERM:

break # Interrupted

else:

raise

count += 1

print ("Subscriber received %d messages" % count)

# publisher thread

# The publisher sends random messages starting with A-J:

def publisher_thread():

ctx = zmq.Context.instance()

publisher = ctx.socket(zmq.PUB)

publisher.bind("tcp://*:6000")

while True:

string = "%s-%05d" % (uppercase[randint(0,10)], randint(0,100000))

try:

publisher.send(string.encode('utf-8'))

except zmq.ZMQError as e:

if e.errno == zmq.ETERM:

break # Interrupted

else:

raise

time.sleep(0.1) # Wait for 1/10th second

# listener thread

# The listener receives all messages flowing through the proxy, on its

# pipe. Here, the pipe is a pair of ZMQ_PAIR sockets that connects

# attached child threads via inproc. In other languages your mileage may vary:

def listener_thread (pipe):

# Print everything that arrives on pipe

while True:

try:

print (pipe.recv_multipart())

except zmq.ZMQError as e:

if e.errno == zmq.ETERM:

break # Interrupted

# main thread

# The main task starts the subscriber and publisher, and then sets

# itself up as a listening proxy. The listener runs as a child thread:

def main ():

# Start child threads

ctx = zmq.Context.instance()

p_thread = Thread(target=publisher_thread)

s_thread = Thread(target=subscriber_thread)

p_thread.start()

s_thread.start()

pipe = zpipe(ctx)

subscriber = ctx.socket(zmq.XSUB)

subscriber.connect("tcp://:6000")

publisher = ctx.socket(zmq.XPUB)

publisher.bind("tcp://*:6001")

l_thread = Thread(target=listener_thread, args=(pipe[1],))

l_thread.start()

try:

monitored_queue(subscriber, publisher, pipe[0], b'pub', b'sub')

except KeyboardInterrupt:

print ("Interrupted")

del subscriber, publisher, pipe

ctx.term()

if __name__ == '__main__':

main()

espresso: Q 中的 Espresso 模式

espresso: Racket 中的 Espresso 模式

espresso: Ruby 中的 Espresso 模式

espresso: Rust 中的 Espresso 模式

espresso: Scala 中的 Espresso 模式

espresso: Tcl 中的 Espresso 模式

espresso: OCaml 中的 Espresso 模式

Espresso 的工作原理是创建一个监听线程,该线程读取一个 PAIR 套接字并打印其接收到的任何内容。该 PAIR 套接字是管道的一端;另一端(另一个 PAIR)是我们传递给 zmq_proxy()的方法。在实际应用中,您会过滤感兴趣的消息以获取您想要跟踪的核心内容(因此得名该模式)。

订阅者线程订阅“A”和“B”,接收五条消息,然后销毁其套接字。当您运行示例时,监听器打印两条订阅消息、五条数据消息、两条取消订阅消息,然后归于平静。

[002] 0141

[002] 0142

[007] B-91164

[007] B-12979

[007] A-52599

[007] A-06417

[007] A-45770

[002] 0041

[002] 0042

这清楚地展示了当没有订阅者订阅时,发布者套接字如何停止发送数据。发布者线程仍在发送消息。套接字只是默默地丢弃它们。

最新值缓存 #

如果您使用过商业发布/订阅系统,您可能习惯于 ZeroMQ 快速活泼的发布/订阅模式中缺少的一些功能。其中之一是最新值缓存 (LVC)。这解决了新订阅者加入网络时如何追赶数据的问题。理论上,发布者会在新订阅者加入并订阅特定主题时收到通知。然后,发布者可以重新广播这些主题的最新消息。

我已经解释了为什么在有新订阅者时发布者不会收到通知,因为在大型发布/订阅系统中,数据量使得这几乎不可能。要构建真正大规模的发布/订阅网络,您需要像 PGM 这样的协议,它利用高端以太网交换机将数据多播给数千个订阅者的能力。尝试通过 TCP 单播从发布者向数千个订阅者中的每一个发送数据根本无法扩展。你会遇到奇怪的峰值、不公平的分发(一些订阅者比其他人先收到消息)、网络拥塞以及普遍的不愉快。

PGM 是一种单向协议:发布者将消息发送到交换机上的一个多播地址,然后交换机将其重新广播给所有感兴趣的订阅者。发布者永远看不到订阅者何时加入或离开:这一切都发生在交换机中,而我们并不真正想开始重新编程交换机。

然而,在只有几十个订阅者和有限主题的低流量网络中,我们可以使用 TCP,这样 XSUB 和 XPUB 套接字确实会像我们在 Espresso 模式中看到的那样相互通信。

我们能否使用 ZeroMQ 构建一个 LVC?答案是肯定的,如果我们构建一个位于发布者和订阅者之间的代理;它类似于 PGM 交换机,但我们可以自己编程。

我将首先创建一个发布者和订阅者来突出最坏的情况。这个发布者是病态的。它一开始就立即向一千个主题中的每一个发送消息,然后每秒向一个随机主题发送一条更新。一个订阅者连接并订阅一个主题。如果没有 LVC,订阅者平均需要等待 500 秒才能获得任何数据。为了增加一些戏剧性,假设有一个名叫 Gregor 的越狱犯威胁说,如果我们不能解决那 8.3 分钟的延迟,他就要扯掉玩具兔子 Roger 的脑袋。

这是发布者代码。请注意,它有命令行选项可以连接到某个地址,但通常会绑定到一个端点。我们稍后会用它连接到我们的最新值缓存。

pathopub: Ada 中的病态发布者

pathopub: Basic 中的病态发布者

pathopub: C 中的病态发布者

// Pathological publisher

// Sends out 1,000 topics and then one random update per second

#include "czmq.h"

int main (int argc, char *argv [])

{

zctx_t *context = zctx_new ();

void *publisher = zsocket_new (context, ZMQ_PUB);

if (argc == 2)

zsocket_bind (publisher, argv [1]);

else

zsocket_bind (publisher, "tcp://*:5556");

// Ensure subscriber connection has time to complete

sleep (1);

// Send out all 1,000 topic messages

int topic_nbr;

for (topic_nbr = 0; topic_nbr < 1000; topic_nbr++) {

zstr_sendfm (publisher, "%03d", topic_nbr);

zstr_send (publisher, "Save Roger");

}

// Send one random update per second

srandom ((unsigned) time (NULL));

while (!zctx_interrupted) {

sleep (1);

zstr_sendfm (publisher, "%03d", randof (1000));

zstr_send (publisher, "Off with his head!");

}

zctx_destroy (&context);

return 0;

}

pathopub: C++ 中的病态发布者

// Pathological publisher

// Sends out 1,000 topics and then one random update per second

#include <thread>

#include <chrono>

#include "zhelpers.hpp"

int main (int argc, char *argv [])

{

zmq::context_t context(1);

zmq::socket_t publisher(context, ZMQ_PUB);

// Initialize random number generator

srandom ((unsigned) time (NULL));

if (argc == 2)

publisher.bind(argv [1]);

else

publisher.bind("tcp://*:5556");

// Ensure subscriber connection has time to complete

std::this_thread::sleep_for(std::chrono::seconds(1));

// Send out all 1,000 topic messages

int topic_nbr;

for (topic_nbr = 0; topic_nbr < 1000; topic_nbr++) {

std::stringstream ss;

ss << std::dec << std::setw(3) << std::setfill('0') << topic_nbr;

s_sendmore (publisher, ss.str());

s_send (publisher, std::string("Save Roger"));

}

// Send one random update per second

while (1) {

std::this_thread::sleep_for(std::chrono::seconds(1));

std::stringstream ss;

ss << std::dec << std::setw(3) << std::setfill('0') << within(1000);

s_sendmore (publisher, ss.str());

s_send (publisher, std::string("Off with his head!"));

}

return 0;

}

pathopub: C# 中的病态发布者

pathopub: CL 中的病态发布者

pathopub: Delphi 中的病态发布者

pathopub: Erlang 中的病态发布者

pathopub: Elixir 中的病态发布者

pathopub: F# 中的病态发布者

pathopub: Felix 中的病态发布者

pathopub: Go 中的病态发布者

pathopub: Haskell 中的病态发布者

pathopub: Haxe 中的病态发布者

pathopub: Java 中的病态发布者

package guide;

import java.util.Random;

import org.zeromq.SocketType;

import org.zeromq.ZContext;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

// Pathological publisher

// Sends out 1,000 topics and then one random update per second

public class pathopub

{

public static void main(String[] args) throws Exception

{

try (ZContext context = new ZContext()) {

Socket publisher = context.createSocket(SocketType.PUB);

if (args.length == 1)

publisher.connect(args[0]);

else publisher.bind("tcp://*:5556");

// Ensure subscriber connection has time to complete

Thread.sleep(1000);

// Send out all 1,000 topic messages

int topicNbr;

for (topicNbr = 0; topicNbr < 1000; topicNbr++) {

publisher.send(String.format("%03d", topicNbr), ZMQ.SNDMORE);

publisher.send("Save Roger");

}

// Send one random update per second

Random rand = new Random(System.currentTimeMillis());

while (!Thread.currentThread().isInterrupted()) {

Thread.sleep(1000);

publisher.send(

String.format("%03d", rand.nextInt(1000)), ZMQ.SNDMORE

);

publisher.send("Off with his head!");

}

}

}

}

pathopub: Julia 中的病态发布者

pathopub: Lua 中的病态发布者

pathopub: Node.js 中的病态发布者

pathopub: Objective-C 中的病态发布者

pathopub: ooc 中的病态发布者

pathopub: Perl 中的病态发布者

pathopub: PHP 中的病态发布者

pathopub: Python 中的病态发布者

#

# Pathological publisher

# Sends out 1,000 topics and then one random update per second

#

import sys

import time

from random import randint

import zmq

def main(url=None):

ctx = zmq.Context.instance()

publisher = ctx.socket(zmq.PUB)

if url:

publisher.bind(url)

else:

publisher.bind("tcp://*:5556")

# Ensure subscriber connection has time to complete

time.sleep(1)

# Send out all 1,000 topic messages

for topic_nbr in range(1000):

publisher.send_multipart([

b"%03d" % topic_nbr,

b"Save Roger",

])

while True:

# Send one random update per second

try:

time.sleep(1)

publisher.send_multipart([

b"%03d" % randint(0,999),

b"Off with his head!",

])

except KeyboardInterrupt:

print "interrupted"

break

if __name__ == '__main__':

main(sys.argv[1] if len(sys.argv) > 1 else None)

pathopub: Q 中的病态发布者

pathopub: Racket 中的病态发布者

pathopub: Ruby 中的病态发布者

#!/usr/bin/env ruby

#

# Pathological publisher

# Sends out 1,000 topics and then one random update per second

#

require 'ffi-rzmq'

context = ZMQ::Context.new

TOPIC_COUNT = 1_000

publisher = context.socket(ZMQ::PUB)

if ARGV[0]

publisher.bind(ARGV[0])

else

publisher.bind("tcp://*:5556")

end

# Ensure subscriber connection has time to complete

sleep 1

TOPIC_COUNT.times do |n|

topic = "%03d" % [n]

publisher.send_strings([topic, "Save Roger"])

end

loop do

sleep 1

topic = "%03d" % [rand(1000)]

publisher.send_strings([topic, "Off with his head!"])

end

pathopub: Rust 中的病态发布者

pathopub: Scala 中的病态发布者

pathopub: Tcl 中的病态发布者

pathopub: OCaml 中的病态发布者

这是订阅者代码

pathosub: Ada 中的病态订阅者

pathosub: Basic 中的病态订阅者

pathosub: C 中的病态订阅者

// Pathological subscriber

// Subscribes to one random topic and prints received messages

#include "czmq.h"

int main (int argc, char *argv [])

{

zctx_t *context = zctx_new ();

void *subscriber = zsocket_new (context, ZMQ_SUB);

if (argc == 2)

zsocket_connect (subscriber, argv [1]);

else

zsocket_connect (subscriber, "tcp://:5556");

srandom ((unsigned) time (NULL));

char subscription [5];

sprintf (subscription, "%03d", randof (1000));

zsocket_set_subscribe (subscriber, subscription);

while (true) {

char *topic = zstr_recv (subscriber);

if (!topic)

break;

char *data = zstr_recv (subscriber);

assert (streq (topic, subscription));

puts (data);

free (topic);

free (data);

}

zctx_destroy (&context);

return 0;

}

pathosub: C++ 中的病态订阅者

// Pathological subscriber

// Subscribes to one random topic and prints received messages

#include "zhelpers.hpp"

int main (int argc, char *argv [])

{

zmq::context_t context(1);

zmq::socket_t subscriber (context, ZMQ_SUB);

// Initialize random number generator

srandom ((unsigned) time (NULL));

if (argc == 2)

subscriber.connect(argv [1]);

else

subscriber.connect("tcp://:5556");

std::stringstream ss;

ss << std::dec << std::setw(3) << std::setfill('0') << within(1000);

std::cout << "topic:" << ss.str() << std::endl;

subscriber.set( zmq::sockopt::subscribe, ss.str().c_str());

while (1) {

std::string topic = s_recv (subscriber);

std::string data = s_recv (subscriber);

if (topic != ss.str())

break;

std::cout << data << std::endl;

}

return 0;

}

pathosub: C# 中的病态订阅者

pathosub: CL 中的病态订阅者

pathosub: Delphi 中的病态订阅者

pathosub: Erlang 中的病态订阅者

pathosub: Elixir 中的病态订阅者

pathosub: F# 中的病态订阅者

pathosub: Felix 中的病态订阅者

pathosub: Go 中的病态订阅者

pathosub: Haskell 中的病态订阅者

pathosub: Haxe 中的病态订阅者

pathosub: Java 中的病态订阅者

package guide;

import java.util.Random;

import org.zeromq.SocketType;

import org.zeromq.ZContext;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

// Pathological subscriber

// Subscribes to one random topic and prints received messages

public class pathosub

{

public static void main(String[] args)

{

try (ZContext context = new ZContext()) {

Socket subscriber = context.createSocket(SocketType.SUB);

if (args.length == 1)

subscriber.connect(args[0]);

else subscriber.connect("tcp://:5556");

Random rand = new Random(System.currentTimeMillis());

String subscription = String.format("%03d", rand.nextInt(1000));

subscriber.subscribe(subscription.getBytes(ZMQ.CHARSET));

while (true) {

String topic = subscriber.recvStr();

if (topic == null)

break;

String data = subscriber.recvStr();

assert (topic.equals(subscription));

System.out.println(data);

}

}

}

}

pathosub: Julia 中的病态订阅者

pathosub: Lua 中的病态订阅者

pathosub: Node.js 中的病态订阅者

pathosub: Objective-C 中的病态订阅者

pathosub: ooc 中的病态订阅者

pathosub: Perl 中的病态订阅者

pathosub: PHP 中的病态订阅者

pathosub: Python 中的病态订阅者

#

# Pathological subscriber

# Subscribes to one random topic and prints received messages

#

import sys

import time

from random import randint

import zmq

def main(url=None):

ctx = zmq.Context.instance()

subscriber = ctx.socket(zmq.SUB)

if url is None:

url = "tcp://:5556"

subscriber.connect(url)

subscription = b"%03d" % randint(0,999)

subscriber.setsockopt(zmq.SUBSCRIBE, subscription)

while True:

topic, data = subscriber.recv_multipart()

assert topic == subscription

print data

if __name__ == '__main__':

main(sys.argv[1] if len(sys.argv) > 1 else None)

pathosub: Q 中的病态订阅者

pathosub: Racket 中的病态订阅者

pathosub: Ruby 中的病态订阅者

#!/usr/bin/env ruby

#

# Pathological subscriber

# Subscribes to one random topic and prints received messages

#

require 'ffi-rzmq'

context = ZMQ::Context.new

subscriber = context.socket(ZMQ::SUB)

subscriber.connect(ARGV[0] || "tcp://:5556")

topic = "%03d" % [rand(1000)]

subscriber.setsockopt(ZMQ::SUBSCRIBE, topic)

loop do

subscriber.recv_strings(parts = [])

topic, data = parts

puts "#{topic}: #{data}"

end

pathosub: Rust 中的病态订阅者

pathosub: Scala 中的病态订阅者

pathosub: Tcl 中的病态订阅者

pathosub: OCaml 中的病态订阅者

尝试构建并运行这些代码:先运行订阅者,再运行发布者。你会看到订阅者按预期报告收到“Save Roger”

./pathosub &

./pathopub

当你运行第二个订阅者时,你就会明白 Roger 的困境。你需要等待相当长的时间,它才会报告收到任何数据。所以,这就是我们的最新值缓存。正如我承诺的,它是一个代理,绑定到两个套接字,然后处理这两个套接字上的消息。

lvcache: Ada 中的最新值缓存代理

lvcache: Basic 中的最新值缓存代理

lvcache: C 中的最新值缓存代理

// Last value cache

// Uses XPUB subscription messages to re-send data

#include "czmq.h"

int main (void)

{

zctx_t *context = zctx_new ();

void *frontend = zsocket_new (context, ZMQ_SUB);

zsocket_connect (frontend, "tcp://*:5557");

void *backend = zsocket_new (context, ZMQ_XPUB);

zsocket_bind (backend, "tcp://*:5558");

// Subscribe to every single topic from publisher

zsocket_set_subscribe (frontend, "");

// Store last instance of each topic in a cache

zhash_t *cache = zhash_new ();

// .split main poll loop

// We route topic updates from frontend to backend, and

// we handle subscriptions by sending whatever we cached,

// if anything:

while (true) {

zmq_pollitem_t items [] = {

{ frontend, 0, ZMQ_POLLIN, 0 },

{ backend, 0, ZMQ_POLLIN, 0 }

};

if (zmq_poll (items, 2, 1000 * ZMQ_POLL_MSEC) == -1)

break; // Interrupted

// Any new topic data we cache and then forward

if (items [0].revents & ZMQ_POLLIN) {

char *topic = zstr_recv (frontend);

char *current = zstr_recv (frontend);

if (!topic)

break;

char *previous = zhash_lookup (cache, topic);

if (previous) {

zhash_delete (cache, topic);

free (previous);

}

zhash_insert (cache, topic, current);

zstr_sendm (backend, topic);

zstr_send (backend, current);

free (topic);

}

// .split handle subscriptions

// When we get a new subscription, we pull data from the cache:

if (items [1].revents & ZMQ_POLLIN) {

zframe_t *frame = zframe_recv (backend);

if (!frame)

break;

// Event is one byte 0=unsub or 1=sub, followed by topic

byte *event = zframe_data (frame);

if (event [0] == 1) {

char *topic = zmalloc (zframe_size (frame));

memcpy (topic, event + 1, zframe_size (frame) - 1);

printf ("Sending cached topic %s\n", topic);

char *previous = zhash_lookup (cache, topic);

if (previous) {

zstr_sendm (backend, topic);

zstr_send (backend, previous);

}

free (topic);

}

zframe_destroy (&frame);

}

}

zctx_destroy (&context);

zhash_destroy (&cache);

return 0;

}

lvcache: C++ 中的最新值缓存代理

// Last value cache

// Uses XPUB subscription messages to re-send data

#include <unordered_map>

#include "zhelpers.hpp"

int main ()

{

zmq::context_t context(1);

zmq::socket_t frontend(context, ZMQ_SUB);

zmq::socket_t backend(context, ZMQ_XPUB);

frontend.connect("tcp://:5557");

backend.bind("tcp://*:5558");

// Subscribe to every single topic from publisher

frontend.set(zmq::sockopt::subscribe, "");

// Store last instance of each topic in a cache

std::unordered_map<std::string, std::string> cache_map;

zmq::pollitem_t items[2] = {

{ static_cast<void*>(frontend), 0, ZMQ_POLLIN, 0 },

{ static_cast<void*>(backend), 0, ZMQ_POLLIN, 0 }

};

// .split main poll loop

// We route topic updates from frontend to backend, and we handle

// subscriptions by sending whatever we cached, if anything:

while (1)

{

if (zmq::poll(items, 2, 1000) == -1)

break; // Interrupted

// Any new topic data we cache and then forward

if (items[0].revents & ZMQ_POLLIN)

{

std::string topic = s_recv(frontend);

std::string data = s_recv(frontend);

if (topic.empty())

break;

cache_map[topic] = data;

s_sendmore(backend, topic);

s_send(backend, data);

}

// .split handle subscriptions

// When we get a new subscription, we pull data from the cache:

if (items[1].revents & ZMQ_POLLIN) {

zmq::message_t msg;

backend.recv(&msg);

if (msg.size() == 0)

break;

// Event is one byte 0=unsub or 1=sub, followed by topic

uint8_t *event = (uint8_t *)msg.data();

if (event[0] == 1) {

std::string topic((char *)(event+1), msg.size()-1);

auto i = cache_map.find(topic);

if (i != cache_map.end())

{

s_sendmore(backend, topic);

s_send(backend, i->second);

}

}

}

}

return 0;

}

lvcache: C# 中的最新值缓存代理

lvcache: CL 中的最新值缓存代理

lvcache: Delphi 中的最新值缓存代理

lvcache: Erlang 中的最新值缓存代理

lvcache: Elixir 中的最新值缓存代理

lvcache: F# 中的最新值缓存代理

lvcache: Felix 中的最新值缓存代理

lvcache: Go 中的最新值缓存代理

lvcache: Haskell 中的最新值缓存代理

lvcache: Haxe 中的最新值缓存代理

lvcache: Java 中的最新值缓存代理

package guide;

import java.util.HashMap;

import java.util.Map;

import org.zeromq.SocketType;

import org.zeromq.ZContext;

import org.zeromq.ZFrame;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Poller;

import org.zeromq.ZMQ.Socket;

// Last value cache

// Uses XPUB subscription messages to re-send data

public class lvcache

{

public static void main(String[] args)

{

try (ZContext context = new ZContext()) {

Socket frontend = context.createSocket(SocketType.SUB);

frontend.bind("tcp://*:5557");

Socket backend = context.createSocket(SocketType.XPUB);

backend.bind("tcp://*:5558");

// Subscribe to every single topic from publisher

frontend.subscribe(ZMQ.SUBSCRIPTION_ALL);

// Store last instance of each topic in a cache

Map<String, String> cache = new HashMap<String, String>();

Poller poller = context.createPoller(2);

poller.register(frontend, Poller.POLLIN);

poller.register(backend, Poller.POLLIN);

// .split main poll loop

// We route topic updates from frontend to backend, and we handle

// subscriptions by sending whatever we cached, if anything:

while (true) {

if (poller.poll(1000) == -1)

break; // Interrupted

// Any new topic data we cache and then forward

if (poller.pollin(0)) {

String topic = frontend.recvStr();

String current = frontend.recvStr();

if (topic == null)

break;

cache.put(topic, current);

backend.sendMore(topic);

backend.send(current);

}

// .split handle subscriptions

// When we get a new subscription, we pull data from the cache:

if (poller.pollin(1)) {

ZFrame frame = ZFrame.recvFrame(backend);

if (frame == null)

break;

// Event is one byte 0=unsub or 1=sub, followed by topic

byte[] event = frame.getData();

if (event[0] == 1) {

String topic = new String(event, 1, event.length - 1, ZMQ.CHARSET);

System.out.printf("Sending cached topic %s\n", topic);

String previous = cache.get(topic);

if (previous != null) {

backend.sendMore(topic);

backend.send(previous);

}

}

frame.destroy();

}

}

}

}

}

lvcache: Julia 中的最新值缓存代理

lvcache: Lua 中的最新值缓存代理

lvcache: Node.js 中的最新值缓存代理

// Last value cache

// Uses XPUB subscription messages to re-send data

var zmq = require('zeromq');

var frontEnd = zmq.socket('sub');

var backend = zmq.socket('xpub');

var cache = {};

frontEnd.connect('tcp://127.0.0.1:5557');

frontEnd.subscribe('');

backend.bindSync('tcp://*:5558');

frontEnd.on('message', function(topic, message) {

cache[topic] = message;

backend.send([topic, message]);

});

backend.on('message', function(frame) {

// frame is one byte 0=unsub or 1=sub, followed by topic

if (frame[0] === 1) {

var topic = frame.slice(1);

var previous = cache[topic];

console.log('Sending cached topic ' + topic);

if (typeof previous !== 'undefined') {

backend.send([topic, previous]);

}

}

});

process.on('SIGINT', function() {

frontEnd.close();

backend.close();

console.log('\nClosed')

});

lvcache: Objective-C 中的最新值缓存代理

lvcache: ooc 中的最新值缓存代理

lvcache: Perl 中的最新值缓存代理

lvcache: PHP 中的最新值缓存代理

lvcache: Python 中的最新值缓存代理

#

# Last value cache

# Uses XPUB subscription messages to re-send data

#

import zmq

def main():

ctx = zmq.Context.instance()

frontend = ctx.socket(zmq.SUB)

frontend.connect("tcp://*:5557")

backend = ctx.socket(zmq.XPUB)

backend.bind("tcp://*:5558")

# Subscribe to every single topic from publisher

frontend.setsockopt(zmq.SUBSCRIBE, b"")

# Store last instance of each topic in a cache

cache = {}

# main poll loop

# We route topic updates from frontend to backend, and

# we handle subscriptions by sending whatever we cached,

# if anything:

poller = zmq.Poller()

poller.register(frontend, zmq.POLLIN)

poller.register(backend, zmq.POLLIN)

while True:

try:

events = dict(poller.poll(1000))

except KeyboardInterrupt:

print("interrupted")

break

# Any new topic data we cache and then forward

if frontend in events:

msg = frontend.recv_multipart()

topic, current = msg

cache[topic] = current

backend.send_multipart(msg)

# handle subscriptions

# When we get a new subscription we pull data from the cache:

if backend in events:

event = backend.recv()

# Event is one byte 0=unsub or 1=sub, followed by topic

if event[0] == 1:

topic = event[1:]

if topic in cache:

print ("Sending cached topic %s" % topic)

backend.send_multipart([ topic, cache[topic] ])

if __name__ == '__main__':

main()

lvcache: Q 中的最新值缓存代理

lvcache: Racket 中的最新值缓存代理

lvcache: Ruby 中的最新值缓存代理

#!/usr/bin/env ruby

#

# Last value cache

# Uses XPUB subscription messages to re-send data

#

require 'ffi-rzmq'

context = ZMQ::Context.new

frontend = context.socket(ZMQ::SUB)

frontend.connect("tcp://*:5557")

backend = context.socket(ZMQ::XPUB)

backend.bind("tcp://*:5558")

# Subscribe to every single topic from publisher

frontend.setsockopt(ZMQ::SUBSCRIBE, "")

# Store last instance of each topic in a cache

cache = {}

# We route topic updates from frontend to backend, and we handle subscriptions

# by sending whatever we cached, if anything:

poller = ZMQ::Poller.new

[frontend, backend].each { |sock| poller.register_readable sock }

loop do

poller.poll(1000)

poller.readables.each do |sock|

if sock == frontend

# Any new topic data we cache and then forward

frontend.recv_strings(parts = [])

topic, data = parts

cache[topic] = data

backend.send_strings(parts)

elsif sock == backend

# When we get a new subscription we pull data from the cache:

backend.recv_strings(parts = [])

event, _ = parts

# Event is one byte 0=unsub or 1=sub, followed by topic

if event[0].ord == 1

topic = event[1..-1]

puts "Sending cached topic #{topic}"

previous = cache[topic]

backend.send_strings([topic, previous]) if previous

end

end

end

end

lvcache: Rust 中的最新值缓存代理

lvcache: Scala 中的最新值缓存代理

lvcache: Tcl 中的最新值缓存代理

lvcache: OCaml 中的最新值缓存代理

现在,运行代理,然后再运行发布者

./lvcache &

./pathopub tcp://:5557

现在,运行任意数量的订阅者实例,每次都连接到端口 5558 上的代理

./pathosub tcp://:5558

每个订阅者都开心地报告收到“Save Roger”,而越狱犯 Gregor 则溜回座位享用晚餐和一杯热牛奶,这才是他真正想要的。

注意一点:默认情况下,XPUB 套接字不会报告重复订阅,这是您天真地将 XPUB 连接到 XSUB 时所期望的行为。我们的示例巧妙地绕过了这个问题,通过使用随机主题,这样它不工作的几率只有百万分之一。在真正的 LVC 代理中,您会希望使用ZMQ_XPUB_VERBOSE选项,我们在第 6 章 - ZeroMQ 社区中将其作为一个练习来实现。

慢速订阅者检测 (Suicidal Snail 模式) #

在实际应用中使用发布/订阅模式时,您会遇到的一个常见问题是慢速订阅者。在理想世界中,我们以全速将数据从发布者传输到订阅者。在现实中,订阅者应用程序通常是用解释型语言编写的,或者只是做了大量工作,或者写得很糟糕,以至于它们无法跟上发布者的速度。

我们如何处理慢速订阅者?理想的解决方案是让订阅者更快,但这可能需要工作和时间。一些处理慢速订阅者的经典策略包括:

-

在发布者端排队消息。当我不读邮件几个小时时,Gmail 就是这样做的。但在高流量消息传递中,将队列推向上游会导致发布者内存不足并崩溃,结果既惊心动魄又不划算——特别是当订阅者数量很多且出于性能原因无法刷新到磁盘时。

-

在订阅者端排队消息。这要好得多,如果网络能够跟上,ZeroMQ 默认就是这样做的。如果有人会内存不足并崩溃,那将是订阅者而不是发布者,这是公平的。这对于“峰值”流非常适用,在这种情况下,订阅者可能暂时无法跟上,但在流速变慢时可以赶上。然而,这对于总体上速度过慢的订阅者来说并非解决方案。

-

一段时间后停止新消息排队。当我的邮箱溢出其宝贵的几 GB 空间时,Gmail 就是这样做的。新消息会被拒绝或丢弃。从发布者的角度来看,这是一个很好的策略,也是 ZeroMQ 在发布者设置 HWM 时所做的事情。然而,这仍然无法帮助我们解决慢速订阅者的问题。现在我们的消息流中只会出现空白。

-

通过断开连接惩罚慢速订阅者。这是 Hotmail(还记得吗?)在我两周没有登录时做的事情,这也是当我意识到可能有更好的方法时,我已经在使用我的第十五个 Hotmail 账户的原因。这是一种很好的残酷策略,它迫使订阅者振作精神并集中注意力,这会很理想,但 ZeroMQ 不会这样做,并且没有办法在其之上实现这一层,因为订阅者对于发布者应用程序来说是不可见的。

这些经典策略都不适用,所以我们需要创新。与其断开发布者,不如说服订阅者自杀。这就是 Suicidal Snail 模式。当订阅者检测到自己运行速度过慢(其中“过慢”大概是一个配置选项,实际意思是“慢到如果你到达这里,就大声呼救,因为我需要知道,这样我才能修复它!”)时,它就会“呱”一声然后死去。

订阅者如何检测到这一点?一种方法是给消息编号(按顺序编号),并在发布者端使用 HWM。现在,如果订阅者检测到缺口(即编号不连续),它就知道有问题了。然后我们将 HWM 调整到“如果达到此水平就‘呱’一声死去”的程度。

这个解决方案有两个问题。第一,如果我们有多个发布者,如何给消息编号?解决方案是给每个发布者一个唯一的 ID 并添加到编号中。第二,如果订阅者使用ZMQ_SUBSCRIBE过滤器,他们从定义上就会获得缺口。我们宝贵的编号将毫无用处。

有些用例不使用过滤器,编号对它们有效。但更通用的解决方案是发布者为每条消息加上时间戳。当订阅者收到消息时,它检查时间,如果差值超过(比如)一秒,它就会执行“呱”一声死去的动作,可能会先向操作员控制台发送一声尖叫。

自杀蜗牛模式特别适用于订阅者拥有自己的客户端和服务水平协议,并且需要保证一定的最大延迟的情况。中止一个订阅者可能看起来不是一种建设性的方式来保证最大延迟,但这是一种断言模型。今天就中止,问题就会得到解决。允许延迟的数据向下游流动,问题可能会造成更广泛的损害,并且需要更长时间才能被发现。

这里是一个自杀蜗牛的最小示例

suisnail: 自杀蜗牛 使用 Ada

suisnail: 自杀蜗牛 使用 Basic

suisnail: 自杀蜗牛 使用 C

// Suicidal Snail

#include "czmq.h"

// This is our subscriber. It connects to the publisher and subscribes

// to everything. It sleeps for a short time between messages to

// simulate doing too much work. If a message is more than one second

// late, it croaks.

#define MAX_ALLOWED_DELAY 1000 // msecs

static void

subscriber (void *args, zctx_t *ctx, void *pipe)

{

// Subscribe to everything

void *subscriber = zsocket_new (ctx, ZMQ_SUB);

zsocket_set_subscribe (subscriber, "");

zsocket_connect (subscriber, "tcp://:5556");

// Get and process messages

while (true) {

char *string = zstr_recv (subscriber);

printf("%s\n", string);

int64_t clock;

int terms = sscanf (string, "%" PRId64, &clock);

assert (terms == 1);

free (string);

// Suicide snail logic

if (zclock_time () - clock > MAX_ALLOWED_DELAY) {

fprintf (stderr, "E: subscriber cannot keep up, aborting\n");

break;

}

// Work for 1 msec plus some random additional time

zclock_sleep (1 + randof (2));

}

zstr_send (pipe, "gone and died");

}

// .split publisher task

// This is our publisher task. It publishes a time-stamped message to its

// PUB socket every millisecond:

static void

publisher (void *args, zctx_t *ctx, void *pipe)

{

// Prepare publisher

void *publisher = zsocket_new (ctx, ZMQ_PUB);

zsocket_bind (publisher, "tcp://*:5556");

while (true) {

// Send current clock (msecs) to subscribers

char string [20];

sprintf (string, "%" PRId64, zclock_time ());

zstr_send (publisher, string);

char *signal = zstr_recv_nowait (pipe);

if (signal) {

free (signal);

break;

}

zclock_sleep (1); // 1msec wait

}

}

// .split main task

// The main task simply starts a client and a server, and then

// waits for the client to signal that it has died:

int main (void)

{

zctx_t *ctx = zctx_new ();

void *pubpipe = zthread_fork (ctx, publisher, NULL);

void *subpipe = zthread_fork (ctx, subscriber, NULL);

free (zstr_recv (subpipe));

zstr_send (pubpipe, "break");

zclock_sleep (100);

zctx_destroy (&ctx);

return 0;

}

suisnail: 自杀蜗牛 使用 C++

//

// Suicidal Snail

//

// Andreas Hoelzlwimmer <andreas.hoelzlwimmer@fh-hagenberg.at>

#include "zhelpers.hpp"

#include <thread>

// ---------------------------------------------------------------------

// This is our subscriber

// It connects to the publisher and subscribes to everything. It

// sleeps for a short time between messages to simulate doing too

// much work. If a message is more than 1 second late, it croaks.

#define MAX_ALLOWED_DELAY 1000 // msecs

namespace {

bool Exit = false;

};

static void *

subscriber () {

zmq::context_t context(1);

// Subscribe to everything

zmq::socket_t subscriber(context, ZMQ_SUB);

subscriber.connect("tcp://:5556");

subscriber.set(zmq::sockopt::subscribe, "");

std::stringstream ss;

// Get and process messages

while (1) {

ss.clear();

ss.str(s_recv (subscriber));

int64_t clock;

assert ((ss >> clock));

const auto delay = s_clock () - clock;

// Suicide snail logic

if (delay> MAX_ALLOWED_DELAY) {

std::cerr << "E: subscriber cannot keep up, aborting. Delay=" <<delay<< std::endl;

break;

}

// Work for 1 msec plus some random additional time

s_sleep(1000*(1+within(2)));

}

Exit = true;

return (NULL);

}

// ---------------------------------------------------------------------

// This is our server task

// It publishes a time-stamped message to its pub socket every 1ms.

static void *

publisher () {

zmq::context_t context (1);

// Prepare publisher

zmq::socket_t publisher(context, ZMQ_PUB);

publisher.bind("tcp://*:5556");

std::stringstream ss;

while (!Exit) {

// Send current clock (msecs) to subscribers

ss.str("");

ss << s_clock();

s_send (publisher, ss.str());

s_sleep(1);

}

return 0;

}

// This main thread simply starts a client, and a server, and then

// waits for the client to croak.

//

int main (void)

{

std::thread server_thread(&publisher);

std::thread client_thread(&subscriber);

client_thread.join();

server_thread.join();

return 0;

}

suisnail: 自杀蜗牛 使用 C#

suisnail: 自杀蜗牛 使用 CL

suisnail: 自杀蜗牛 使用 Delphi

suisnail: 自杀蜗牛 使用 Erlang

suisnail: 自杀蜗牛 使用 Elixir

suisnail: 自杀蜗牛 使用 F#

suisnail: 自杀蜗牛 使用 Felix

suisnail: 自杀蜗牛 使用 Go

suisnail: 自杀蜗牛 使用 Haskell

suisnail: 自杀蜗牛 使用 Haxe

suisnail: 自杀蜗牛 使用 Java

package guide;

import java.util.Random;

// Suicidal Snail

import org.zeromq.SocketType;

import org.zeromq.ZContext;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZThread;

import org.zeromq.ZThread.IAttachedRunnable;

public class suisnail

{

private static final long MAX_ALLOWED_DELAY = 1000; // msecs

private static Random rand = new Random(System.currentTimeMillis());

// This is our subscriber. It connects to the publisher and subscribes to

// everything. It sleeps for a short time between messages to simulate

// doing too much work. If a message is more than one second late, it

// croaks.

private static class Subscriber implements IAttachedRunnable

{

@Override

public void run(Object[] args, ZContext ctx, Socket pipe)

{

// Subscribe to everything

Socket subscriber = ctx.createSocket(SocketType.SUB);

subscriber.subscribe(ZMQ.SUBSCRIPTION_ALL);

subscriber.connect("tcp://:5556");

// Get and process messages

while (true) {

String string = subscriber.recvStr();

System.out.printf("%s\n", string);

long clock = Long.parseLong(string);

// Suicide snail logic

if (System.currentTimeMillis() - clock > MAX_ALLOWED_DELAY) {

System.err.println(

"E: subscriber cannot keep up, aborting"

);

break;

}

// Work for 1 msec plus some random additional time

try {

Thread.sleep(1000 + rand.nextInt(2000));

}

catch (InterruptedException e) {

break;

}

}

pipe.send("gone and died");

}

}

// .split publisher task

// This is our publisher task. It publishes a time-stamped message to its

// PUB socket every millisecond:

private static class Publisher implements IAttachedRunnable

{

@Override

public void run(Object[] args, ZContext ctx, Socket pipe)

{

// Prepare publisher

Socket publisher = ctx.createSocket(SocketType.PUB);

publisher.bind("tcp://*:5556");

while (true) {

// Send current clock (msecs) to subscribers

String string = String.format("%d", System.currentTimeMillis());

publisher.send(string);

String signal = pipe.recvStr(ZMQ.DONTWAIT);

if (signal != null) {

break;

}

try {

Thread.sleep(1);

}

catch (InterruptedException e) {

}

}

}

}

// .split main task

// The main task simply starts a client and a server, and then waits for

// the client to signal that it has died:

public static void main(String[] args) throws Exception

{

try (ZContext ctx = new ZContext()) {

Socket pubpipe = ZThread.fork(ctx, new Publisher());

Socket subpipe = ZThread.fork(ctx, new Subscriber());

subpipe.recvStr();

pubpipe.send("break");

Thread.sleep(100);

}

}

}

suisnail: 自杀蜗牛 使用 Julia

suisnail: 自杀蜗牛 使用 Lua

--

-- Suicidal Snail

--

-- Author: Robert G. Jakabosky <bobby@sharedrealm.com>

--

require"zmq"

require"zmq.threads"

require"zhelpers"

-- ---------------------------------------------------------------------

-- This is our subscriber

-- It connects to the publisher and subscribes to everything. It

-- sleeps for a short time between messages to simulate doing too

-- much work. If a message is more than 1 second late, it croaks.

local subscriber = [[

require"zmq"

require"zhelpers"

local MAX_ALLOWED_DELAY = 1000 -- msecs

local context = zmq.init(1)

-- Subscribe to everything

local subscriber = context:socket(zmq.SUB)

subscriber:connect("tcp://:5556")

subscriber:setopt(zmq.SUBSCRIBE, "", 0)

-- Get and process messages

while true do

local msg = subscriber:recv()

local clock = tonumber(msg)

-- Suicide snail logic

if (s_clock () - clock > MAX_ALLOWED_DELAY) then

fprintf (io.stderr, "E: subscriber cannot keep up, aborting\n")

break

end

-- Work for 1 msec plus some random additional time

s_sleep (1 + randof (2))

end

subscriber:close()

context:term()

]]

-- ---------------------------------------------------------------------

-- This is our server task

-- It publishes a time-stamped message to its pub socket every 1ms.

local publisher = [[

require"zmq"

require"zhelpers"

local context = zmq.init(1)

-- Prepare publisher

local publisher = context:socket(zmq.PUB)

publisher:bind("tcp://*:5556")

while true do

-- Send current clock (msecs) to subscribers

publisher:send(tostring(s_clock()))

s_sleep (1); -- 1msec wait

end

publisher:close()

context:term()

]]

-- This main thread simply starts a client, and a server, and then

-- waits for the client to croak.

--

local server_thread = zmq.threads.runstring(nil, publisher)

server_thread:start(true)

local client_thread = zmq.threads.runstring(nil, subscriber)

client_thread:start()

client_thread:join()

suisnail: 自杀蜗牛 使用 Node.js

suisnail: 自杀蜗牛 使用 Objective-C

suisnail: 自杀蜗牛 使用 ooc

suisnail: 自杀蜗牛 使用 Perl

suisnail: 自杀蜗牛 使用 PHP

<?php

/* Suicidal Snail

*

* @author Ian Barber <ian(dot)barber(at)gmail(dot)com>

*/

/* ---------------------------------------------------------------------

* This is our subscriber

* It connects to the publisher and subscribes to everything. It

* sleeps for a short time between messages to simulate doing too

* much work. If a message is more than 1 second late, it croaks.

*/

define("MAX_ALLOWED_DELAY", 100); // msecs

function subscriber()

{

$context = new ZMQContext();

// Subscribe to everything

$subscriber = new ZMQSocket($context, ZMQ::SOCKET_SUB);

$subscriber->connect("tcp://:5556");

$subscriber->setSockOpt(ZMQ::SOCKOPT_SUBSCRIBE, "");

// Get and process messages

while (true) {

$clock = $subscriber->recv();

// Suicide snail logic

if (microtime(true)*100 - $clock*100 > MAX_ALLOWED_DELAY) {

echo "E: subscriber cannot keep up, aborting", PHP_EOL;

break;

}

// Work for 1 msec plus some random additional time

usleep(1000 + rand(0, 1000));

}

}

/* ---------------------------------------------------------------------

* This is our server task

* It publishes a time-stamped message to its pub socket every 1ms.

*/

function publisher()

{

$context = new ZMQContext();

// Prepare publisher

$publisher = new ZMQSocket($context, ZMQ::SOCKET_PUB);

$publisher->bind("tcp://*:5556");

while (true) {

// Send current clock (msecs) to subscribers

$publisher->send(microtime(true));

usleep(1000); // 1msec wait

}

}

/*

* This main thread simply starts a client, and a server, and then

* waits for the client to croak.

*/

$pid = pcntl_fork();

if ($pid == 0) {

publisher();

exit();

}

$pid = pcntl_fork();

if ($pid == 0) {

subscriber();

exit();

}

suisnail: 自杀蜗牛 使用 Python

"""

Suicidal Snail

Author: Min RK <benjaminrk@gmail.com>

"""

from __future__ import print_function

import sys

import threading

import time

from pickle import dumps, loads

import random

import zmq

from zhelpers import zpipe

# ---------------------------------------------------------------------

# This is our subscriber

# It connects to the publisher and subscribes to everything. It

# sleeps for a short time between messages to simulate doing too

# much work. If a message is more than 1 second late, it croaks.

MAX_ALLOWED_DELAY = 1.0 # secs

def subscriber(pipe):

# Subscribe to everything

ctx = zmq.Context.instance()

sub = ctx.socket(zmq.SUB)

sub.setsockopt(zmq.SUBSCRIBE, b'')

sub.connect("tcp://:5556")

# Get and process messages

while True:

clock = loads(sub.recv())

# Suicide snail logic

if (time.time() - clock > MAX_ALLOWED_DELAY):

print("E: subscriber cannot keep up, aborting", file=sys.stderr)

break

# Work for 1 msec plus some random additional time

time.sleep(1e-3 * (1+2*random.random()))

pipe.send(b"gone and died")

# ---------------------------------------------------------------------

# This is our server task

# It publishes a time-stamped message to its pub socket every 1ms.

def publisher(pipe):

# Prepare publisher

ctx = zmq.Context.instance()

pub = ctx.socket(zmq.PUB)

pub.bind("tcp://*:5556")

while True:

# Send current clock (secs) to subscribers

pub.send(dumps(time.time()))

try:

signal = pipe.recv(zmq.DONTWAIT)

except zmq.ZMQError as e:

if e.errno == zmq.EAGAIN:

# nothing to recv

pass

else:

raise

else:

# received break message

break

time.sleep(1e-3) # 1msec wait

# This main thread simply starts a client, and a server, and then

# waits for the client to signal it's died.

def main():

ctx = zmq.Context.instance()

pub_pipe, pub_peer = zpipe(ctx)

sub_pipe, sub_peer = zpipe(ctx)

pub_thread = threading.Thread(target=publisher, args=(pub_peer,))

pub_thread.daemon=True

pub_thread.start()

sub_thread = threading.Thread(target=subscriber, args=(sub_peer,))

sub_thread.daemon=True

sub_thread.start()

# wait for sub to finish

sub_pipe.recv()

# tell pub to halt

pub_pipe.send(b"break")

time.sleep(0.1)

if __name__ == '__main__':

main()

suisnail: 自杀蜗牛 使用 Q

suisnail: 自杀蜗牛 使用 Racket

suisnail: 自杀蜗牛 使用 Ruby

suisnail: 自杀蜗牛 使用 Rust

suisnail: 自杀蜗牛 使用 Scala

suisnail: 自杀蜗牛 使用 Tcl

#

# Suicidal Snail

#

package require zmq

if {[llength $argv] == 0} {

set argv [list driver]

} elseif {[llength $argv] != 1} {

puts "Usage: suisnail.tcl <driver|sub|pub>"

exit 1

}

lassign $argv what

set MAX_ALLOWED_DELAY 1000 ;# msecs

set tclsh [info nameofexecutable]

expr {srand([pid])}

switch -exact -- $what {

sub {

# This is our subscriber

# It connects to the publisher and subscribes to everything. It

# sleeps for a short time between messages to simulate doing too

# much work. If a message is more than 1 second late, it croaks.

zmq context context

zmq socket subpipe context PAIR

subpipe connect "ipc://subpipe.ipc"

# Subscribe to everything

zmq socket subscriber context SUB

subscriber setsockopt SUBSCRIBE ""

subscriber connect "tcp://:5556"

# Get and process messages

while {1} {

set string [subscriber recv]

puts "$string (delay = [expr {[clock milliseconds] - $string}])"

if {[clock milliseconds] - $string > $::MAX_ALLOWED_DELAY} {

puts stderr "E: subscriber cannot keep up, aborting"

break

}

after [expr {1+int(rand()*2)}]

}

subpipe send "gone and died"

subscriber close

subpipe close

context term

}

pub {

# This is our server task

# It publishes a time-stamped message to its pub socket every 1ms.

zmq context context

zmq socket pubpipe context PAIR

pubpipe connect "ipc://pubpipe.ipc"

# Prepare publisher

zmq socket publisher context PUB

publisher bind "tcp://*:5556"

while {1} {

# Send current clock (msecs) to subscribers

publisher send [clock milliseconds]

if {"POLLIN" in [pubpipe getsockopt EVENTS]} {

break

}

after 1 ;# 1msec wait

}

publisher close

pubpipe close

context term

}

driver {

zmq context context

zmq socket pubpipe context PAIR

pubpipe bind "ipc://pubpipe.ipc"

zmq socket subpipe context PAIR

subpipe bind "ipc://subpipe.ipc"

puts "Start publisher, output redirected to publisher.log"

exec $tclsh suisnail.tcl pub > publisher.log 2>@1 &

puts "Start subscriber, output redirected to subscriber.log"

exec $tclsh suisnail.tcl sub > subscriber.log 2>@1 &

subpipe recv

pubpipe send "break"

after 100

pubpipe close

subpipe close

context term

}

}

suisnail: 自杀蜗牛 使用 OCaml

关于自杀蜗牛示例的一些注意事项如下:

-

此处的消息仅包含当前系统时钟(表示为毫秒数)。在实际应用中,消息至少应包含带时间戳的消息头和带数据的消息体。

-

示例将订阅者和发布者作为两个线程放在同一个进程中。实际上,它们应该是独立的进程。使用线程只是为了方便演示。

高速订阅者(黑盒模式) #

现在我们来看一种方法来加快订阅者的速度。发布-订阅的一种常见用例是分发大型数据流,例如来自证券交易所的市场数据。典型的设置是发布者连接到证券交易所,获取报价,然后将其发送给许多订阅者。如果只有少数订阅者,我们可以使用 TCP。如果订阅者数量较多,我们可能会使用可靠的多播,例如 PGM。

假设我们的数据源平均每秒有 100,000 条 100 字节的消息。这是过滤掉不需要发送给订阅者的市场数据后的典型速率。现在我们决定记录一整天的数据(8 小时大约 250 GB),然后将其回放到一个模拟网络,即一小组订阅者。虽然每秒 10 万条消息对于 ZeroMQ 应用来说很容易,但我们想以快得多的速度回放它。

因此,我们建立了一个包含许多盒子的架构——一个用于发布者,每个订阅者一个。这些是配置良好的盒子——八核,发布者为十二核。

当我们将数据泵入订阅者时,我们注意到两件事

-

当我们对消息进行哪怕最少量的工作时,都会让我们的订阅者慢下来,以至于无法再次赶上发布者。

-

我们达到了一个上限,无论是在发布者还是订阅者端,大约是每秒 600 万条消息,即使经过仔细优化和 TCP 调优之后也是如此。

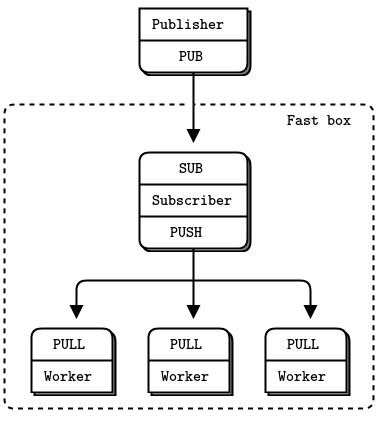

我们首先要做的是将订阅者拆分成多线程设计,这样我们就可以在一组线程中处理消息,同时在另一组线程中读取消息。通常,我们不想以相同的方式处理每条消息。相反,订阅者会过滤一些消息,可能通过前缀键进行过滤。当消息匹配某些条件时,订阅者将调用一个 worker 来处理它。用 ZeroMQ 的术语来说,这意味着将消息发送到 worker 线程。

因此,订阅者看起来像是一个队列设备。我们可以使用各种套接字来连接订阅者和 worker。如果我们假定是单向流量且所有 worker 都相同,我们可以使用 PUSH 和 PULL 并将所有路由工作委托给 ZeroMQ。这是最简单快捷的方法。

订阅者通过 TCP 或 PGM 与发布者通信。订阅者通过与位于同一进程中的 worker 通信,通过inproc:@<//>@.

现在来打破这个上限。订阅者线程会占用 100% 的 CPU,而且由于它是一个线程,所以无法使用超过一个核心。单个线程总是会达到上限,无论是在每秒 200 万、600 万还是更多消息时。我们希望将工作分配到多个可以并行运行的线程中。

许多高性能产品使用的方法(在此处也适用)是分片(sharding)。使用分片,我们将工作分解为并行且独立的流,例如将一半的主题键分到一个流中,另一半分到另一个流中。我们可以使用许多流,但除非有空闲核心,否则性能不会扩展。那么让我们看看如何分片成两个流。

使用两个流,以全速工作时,我们将按如下方式配置 ZeroMQ

- 两个 I/O 线程,而不是一个。

- 两个网络接口(NIC),每个订阅者一个。

- 每个 I/O 线程绑定到一个特定的 NIC。

- 两个订阅者线程,绑定到特定的核心。

- 两个 SUB 套接字,每个订阅者线程一个。

- 剩余的核心分配给 worker 线程。

- Worker 线程连接到两个订阅者 PUSH 套接字。

理想情况下,我们希望架构中满载线程的数量与核心数量相匹配。当线程开始争夺核心和 CPU 周期时,增加更多线程的成本将大于收益。例如,创建更多的 I/O 线程不会带来任何好处。

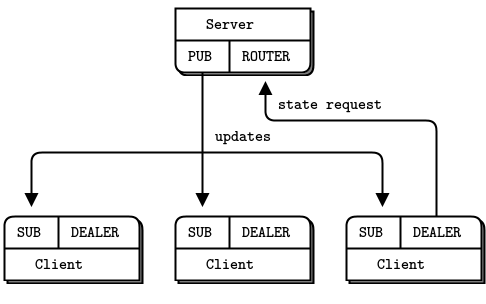

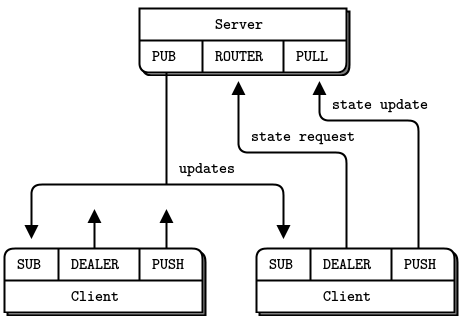

可靠的发布-订阅(克隆模式) #

作为一个更详细的示例,我们将探讨如何构建一个可靠的发布-订阅架构的问题。我们将分阶段开发它。目标是允许一组应用程序共享一些共同的状态。以下是我们的技术挑战

- 我们有大量的客户端应用程序,例如数千或数万个。

- 它们将随意加入和离开网络。

- 这些应用程序必须共享一个最终一致的状态。

- 任何应用程序都可以在任何时候更新状态。

假设更新量相对较低。我们没有实时性要求。整个状态可以放入内存。一些可能的用例包括

- 一组云服务器共享的配置。

- 一组玩家共享的游戏状态。

- 实时更新并可供应用程序使用的汇率数据。

中心化 vs 去中心化 #

我们首先需要决定的问题是是否使用中心服务器。这对最终的设计会产生很大影响。权衡如下

-

从概念上讲,中心服务器更容易理解,因为网络并非天然对称。使用中心服务器,我们可以避免所有关于服务发现、绑定(bind)与连接(connect)等问题。

-

通常,完全分布式的架构在技术上更具挑战性,但最终得到的协议更简单。也就是说,每个节点必须以正确的方式扮演服务器和客户端的角色,这很精妙。如果处理得当,结果会比使用中心服务器更简单。我们在第 4 章 - 可靠的请求-回复模式中的 Freelance 模式中看到了这一点。

-

中心服务器在高容量用例中会成为瓶颈。如果需要处理每秒数百万条消息的规模,我们应该立即考虑去中心化。

-

具有讽刺意味的是,中心化架构更容易扩展到更多节点,而去中心化架构则不然。也就是说,将 10,000 个节点连接到一个服务器比将它们相互连接更容易。

因此,对于克隆模式,我们将使用一个发布状态更新的服务器和一组代表应用程序的客户端。

将状态表示为键值对 #

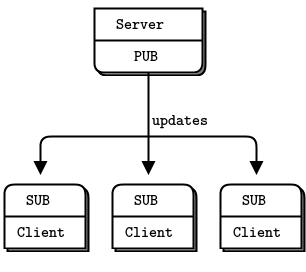

我们将分阶段开发克隆模式,一次解决一个问题。首先,让我们看看如何在一组客户端之间更新共享状态。我们需要决定如何表示我们的状态以及更新。最简单的可行格式是键值存储,其中一个键值对代表共享状态中的一个原子变化单元。

我们在第 1 章 - 基础知识中有一个简单的发布-订阅示例,即天气服务器和客户端。让我们修改服务器来发送键值对,并让客户端将其存储在哈希表中。这使我们可以使用经典的发布-订阅模型从一个服务器向一组客户端发送更新。

更新可以是新的键值对、现有键的修改值或已删除的键。现在我们可以假设整个存储适合内存,并且应用程序通过键访问它,例如使用哈希表或字典。对于更大的存储和某种持久化需求,我们可能会将状态存储在数据库中,但这与此处无关。

这是服务器端

clonesrv1: 克隆服务器,模型一 使用 Ada

clonesrv1: 克隆服务器,模型一 使用 Basic

clonesrv1: 克隆服务器,模型一 使用 C

// Clone server Model One

#include "kvsimple.c"

int main (void)

{

// Prepare our context and publisher socket

zctx_t *ctx = zctx_new ();

void *publisher = zsocket_new (ctx, ZMQ_PUB);

zsocket_bind (publisher, "tcp://*:5556");

zclock_sleep (200);

zhash_t *kvmap = zhash_new ();

int64_t sequence = 0;

srandom ((unsigned) time (NULL));

while (!zctx_interrupted) {

// Distribute as key-value message

kvmsg_t *kvmsg = kvmsg_new (++sequence);

kvmsg_fmt_key (kvmsg, "%d", randof (10000));

kvmsg_fmt_body (kvmsg, "%d", randof (1000000));

kvmsg_send (kvmsg, publisher);

kvmsg_store (&kvmsg, kvmap);

}

printf (" Interrupted\n%d messages out\n", (int) sequence);

zhash_destroy (&kvmap);

zctx_destroy (&ctx);

return 0;

}

clonesrv1: 克隆服务器,模型一 使用 C++

#include <iostream>

#include <unordered_map>

#include "kvsimple.hpp"

using namespace std;

int main() {

// Prepare our context and publisher socket

zmq::context_t ctx(1);

zmq::socket_t publisher(ctx, ZMQ_PUB);

publisher.bind("tcp://*:5555");

s_sleep(5000); // Sleep for a short while to allow connections to be established

// Initialize key-value map and sequence

unordered_map<string,string> kvmap;

int64_t sequence = 0;

srand(time(NULL));

s_catch_signals();

while (!s_interrupted) {

// Distribute as key-value message

string key = to_string(within(10000));

string body = to_string(within(1000000));

kvmsg kv(key, sequence, (unsigned char *)body.c_str());

kv.send(publisher); // Send key-value message

// Store key-value pair in map

kvmap[key] = body;

sequence++;

// Sleep for a short while before sending the next message

s_sleep(1000);

}

cout << "Interrupted" << endl;

cout << sequence << " messages out" << endl;

return 0;

}

clonesrv1: 克隆服务器,模型一 使用 C#

clonesrv1: 克隆服务器,模型一 使用 CL

clonesrv1: 克隆服务器,模型一 使用 Delphi

clonesrv1: 克隆服务器,模型一 使用 Erlang

clonesrv1: 克隆服务器,模型一 使用 Elixir

clonesrv1: 克隆服务器,模型一 使用 F#

clonesrv1: 克隆服务器,模型一 使用 Felix

clonesrv1: 克隆服务器,模型一 使用 Go

clonesrv1: 克隆服务器,模型一 使用 Haskell

clonesrv1: 克隆服务器,模型一 使用 Haxe

clonesrv1: 克隆服务器,模型一 使用 Java

package guide;

import java.nio.ByteBuffer;

import java.util.Random;

import java.util.concurrent.atomic.AtomicLong;

import org.zeromq.SocketType;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

import org.zeromq.ZContext;

/**

*

* Clone server model 1

* @author Danish Shrestha <dshrestha06@gmail.com>

*

*/

public class clonesrv1

{

private static AtomicLong sequence = new AtomicLong();

public void run()

{

try (ZContext ctx = new ZContext()) {

Socket publisher = ctx.createSocket(SocketType.PUB);

publisher.bind("tcp://*:5556");

try {

Thread.sleep(200);

}

catch (InterruptedException e) {

e.printStackTrace();

}

Random random = new Random();

while (true) {

long currentSequenceNumber = sequence.incrementAndGet();

int key = random.nextInt(10000);

int body = random.nextInt(1000000);

ByteBuffer b = ByteBuffer.allocate(4);

b.asIntBuffer().put(body);

kvsimple kvMsg = new kvsimple(

key + "", currentSequenceNumber, b.array()

);

kvMsg.send(publisher);

System.out.println("sending " + kvMsg);

}

}

}

public static void main(String[] args)

{

new clonesrv1().run();

}

}

clonesrv1: 克隆服务器,模型一 使用 Julia

clonesrv1: 克隆服务器,模型一 使用 Lua

clonesrv1: 克隆服务器,模型一 使用 Node.js

clonesrv1: 克隆服务器,模型一 使用 Objective-C

clonesrv1: 克隆服务器,模型一 使用 ooc

clonesrv1: 克隆服务器,模型一 使用 Perl

clonesrv1: 克隆服务器,模型一 使用 PHP

clonesrv1: 克隆服务器,模型一 使用 Python

"""

Clone server Model One

"""

import random

import time

import zmq

from kvsimple import KVMsg

def main():

# Prepare our context and publisher socket

ctx = zmq.Context()

publisher = ctx.socket(zmq.PUB)

publisher.bind("tcp://*:5556")

time.sleep(0.2)

sequence = 0

random.seed(time.time())

kvmap = {}

try:

while True:

# Distribute as key-value message

sequence += 1

kvmsg = KVMsg(sequence)

kvmsg.key = "%d" % random.randint(1,10000)

kvmsg.body = "%d" % random.randint(1,1000000)

kvmsg.send(publisher)

kvmsg.store(kvmap)

except KeyboardInterrupt:

print " Interrupted\n%d messages out" % sequence

if __name__ == '__main__':

main()

clonesrv1: 克隆服务器,模型一 使用 Q

clonesrv1: 克隆服务器,模型一 使用 Racket

clonesrv1: 克隆服务器,模型一 使用 Ruby

clonesrv1: 克隆服务器,模型一 使用 Rust

clonesrv1: 克隆服务器,模型一 使用 Scala

clonesrv1: 克隆服务器,模型一 使用 Tcl

#

# Clone server Model One

#

lappend auto_path .

package require KVSimple

# Prepare our context and publisher socket

zmq context context

set pub [zmq socket publisher context PUB]

$pub bind "tcp://*:5556"

after 200

set sequence 0

expr srand([pid])

while {1} {

# Distribute as key-value message

set kvmsg [KVSimple new [incr sequence]]

$kvmsg set_key [expr {int(rand()*10000)}]

$kvmsg set_body [expr {int(rand()*1000000)}]

$kvmsg send $pub

$kvmsg store kvmap

puts [$kvmsg dump]

after 500

}

$pub close

context term

clonesrv1: 克隆服务器,模型一 使用 OCaml

这是客户端

clonecli1: 克隆客户端,模型一 使用 Ada

clonecli1: 克隆客户端,模型一 使用 Basic

clonecli1: 克隆客户端,模型一 使用 C

// Clone client Model One

#include "kvsimple.c"

int main (void)

{

// Prepare our context and updates socket

zctx_t *ctx = zctx_new ();

void *updates = zsocket_new (ctx, ZMQ_SUB);

zsocket_set_subscribe (updates, "");

zsocket_connect (updates, "tcp://:5556");

zhash_t *kvmap = zhash_new ();

int64_t sequence = 0;

while (true) {

kvmsg_t *kvmsg = kvmsg_recv (updates);

if (!kvmsg)

break; // Interrupted

kvmsg_store (&kvmsg, kvmap);

sequence++;

}

printf (" Interrupted\n%d messages in\n", (int) sequence);

zhash_destroy (&kvmap);

zctx_destroy (&ctx);

return 0;

}

clonecli1: 克隆客户端,模型一 使用 C++

#include <iostream>

#include <unordered_map>

#include "kvsimple.hpp"

using namespace std;

int main() {

// Prepare our context and updates socket

zmq::context_t ctx(1);

zmq::socket_t updates(ctx, ZMQ_SUB);

updates.set(zmq::sockopt::subscribe, ""); // Subscribe to all messages

updates.connect("tcp://:5555");

// Initialize key-value map and sequence

unordered_map<string, string> kvmap;

int64_t sequence = 0;

while (true) {

// Receive key-value message

auto update_kv_msg = kvmsg::recv(updates);

if (!update_kv_msg) {

cout << "Interrupted" << endl;

return 0;

}

// Convert message to string and extract key-value pair

string key = update_kv_msg->key();

string value = (char *)update_kv_msg->body().c_str();

cout << key << " --- " << value << endl;

// Store key-value pair in map

kvmap[key] = value;

sequence++;

}

return 0;

}

clonecli1: 克隆客户端,模型一 使用 C#

clonecli1: 克隆客户端,模型一 使用 CL

clonecli1: 克隆客户端,模型一 使用 Delphi

clonecli1: 克隆客户端,模型一 使用 Erlang

clonecli1: 克隆客户端,模型一 使用 Elixir

clonecli1: 克隆客户端,模型一 使用 F#

clonecli1: 克隆客户端,模型一 使用 Felix

clonecli1: 克隆客户端,模型一 使用 Go

clonecli1: 克隆客户端,模型一 使用 Haskell

clonecli1: 克隆客户端,模型一 使用 Haxe

clonecli1: 克隆客户端,模型一 使用 Java

package guide;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.atomic.AtomicLong;

import org.zeromq.SocketType;

import org.zeromq.ZContext;

import org.zeromq.ZMQ;

import org.zeromq.ZMQ.Socket;

/**

* Clone client model 1

* @author Danish Shrestha <dshrestha06@gmail.com>

*

*/

public class clonecli1

{

private static Map<String, kvsimple> kvMap = new HashMap<String, kvsimple>();

private static AtomicLong sequence = new AtomicLong();

public void run()

{

try (ZContext ctx = new ZContext()) {

Socket subscriber = ctx.createSocket(SocketType.SUB);

subscriber.connect("tcp://:5556");

subscriber.subscribe(ZMQ.SUBSCRIPTION_ALL);

while (true) {

kvsimple kvMsg = kvsimple.recv(subscriber);

if (kvMsg == null)

break;

clonecli1.kvMap.put(kvMsg.getKey(), kvMsg);

System.out.println("receiving " + kvMsg);

sequence.incrementAndGet();

}

}

}

public static void main(String[] args)

{

new clonecli1().run();

}

}

clonecli1: 克隆客户端,模型一 使用 Julia

clonecli1: 克隆客户端,模型一 使用 Lua

clonecli1: 克隆客户端,模型一 使用 Node.js

clonecli1: 克隆客户端,模型一 使用 Objective-C

clonecli1: 克隆客户端,模型一 使用 ooc

clonecli1: 克隆客户端,模型一 使用 Perl

clonecli1: 克隆客户端,模型一 使用 PHP

clonecli1: 克隆客户端,模型一 使用 Python

"""

Clone Client Model One

Author: Min RK <benjaminrk@gmail.com>

"""

import random

import time

import zmq

from kvsimple import KVMsg

def main():

# Prepare our context and publisher socket

ctx = zmq.Context()

updates = ctx.socket(zmq.SUB)

updates.linger = 0

updates.setsockopt(zmq.SUBSCRIBE, '')

updates.connect("tcp://:5556")

kvmap = {}

sequence = 0

while True:

try:

kvmsg = KVMsg.recv(updates)

except:

break # Interrupted

kvmsg.store(kvmap)

sequence += 1

print "Interrupted\n%d messages in" % sequence

if __name__ == '__main__':

main()

clonecli1: 克隆客户端,模型一 使用 Q

clonecli1: 克隆客户端,模型一 使用 Racket

clonecli1: 克隆客户端,模型一 使用 Ruby

clonecli1: 克隆客户端,模型一 使用 Rust

clonecli1: 克隆客户端,模型一 使用 Scala

clonecli1: 克隆客户端,模型一 使用 Tcl

#

# Clone client Model One

#

lappend auto_path .

package require KVSimple

zmq context context

set upd [zmq socket updates context SUB]

$upd setsockopt SUBSCRIBE ""

$upd connect "tcp://:5556"

after 200

while {1} {

set kvmsg [KVSimple new]

$kvmsg recv $upd

$kvmsg store kvmap

puts [$kvmsg dump]

}

$upd close

context term

clonecli1: 克隆客户端,模型一 使用 OCaml

关于这个第一个模型的一些注意事项如下:

-

所有繁重的工作都在一个kvmsg类中完成。这个类处理键值消息对象,它们是多部分 ZeroMQ 消息,其结构包含三个帧:一个键(ZeroMQ 字符串)、一个序列号(64 位值,网络字节序)以及一个二进制消息体(包含其他所有内容)。

-

服务器生成带有随机 4 位数字键的消息,这使我们可以模拟一个大型但不巨大的哈希表(10K 条目)。

-

此版本中未实现删除功能:所有消息都是插入或更新。

-

服务器在绑定其套接字后暂停 200 毫秒。这是为了防止慢连接综合征(slow joiner syndrome),指订阅者在连接到服务器套接字时丢失消息的情况。我们会在后续版本的克隆代码中移除此暂停。

-

我们将在代码中使用术语发布者和订阅者来指代套接字。这将在稍后处理多个执行不同任务的套接字时有所帮助。

这是kvmsg类,这是目前可用的最简单形式

kvsimple: 键值消息类 使用 Ada

kvsimple: 键值消息类 使用 Basic

kvsimple: 键值消息类 使用 C

// kvsimple class - key-value message class for example applications

#include "kvsimple.h"

#include "zlist.h"

// Keys are short strings

#define KVMSG_KEY_MAX 255

// Message is formatted on wire as 3 frames:

// frame 0: key (0MQ string)

// frame 1: sequence (8 bytes, network order)

// frame 2: body (blob)

#define FRAME_KEY 0

#define FRAME_SEQ 1

#define FRAME_BODY 2

#define KVMSG_FRAMES 3

// The kvmsg class holds a single key-value message consisting of a

// list of 0 or more frames:

struct _kvmsg {

// Presence indicators for each frame

int present [KVMSG_FRAMES];

// Corresponding 0MQ message frames, if any

zmq_msg_t frame [KVMSG_FRAMES];

// Key, copied into safe C string

char key [KVMSG_KEY_MAX + 1];

};

// .split constructor and destructor

// Here are the constructor and destructor for the class:

// Constructor, takes a sequence number for the new kvmsg instance:

kvmsg_t *

kvmsg_new (int64_t sequence)

{

kvmsg_t

*self;

self = (kvmsg_t *) zmalloc (sizeof (kvmsg_t));

kvmsg_set_sequence (self, sequence);

return self;

}

// zhash_free_fn callback helper that does the low level destruction:

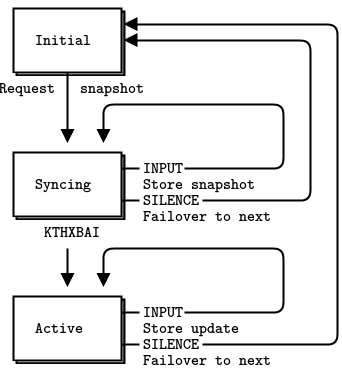

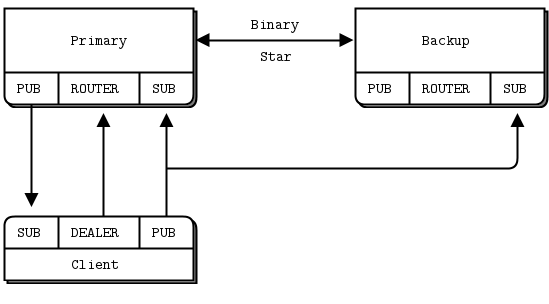

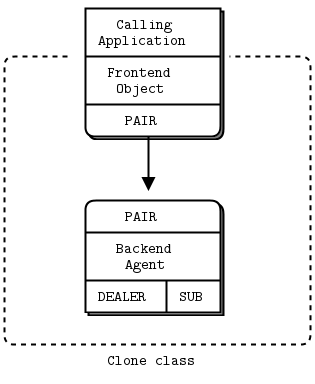

void